Table of Contents

- Introduction

- Editor’s Choice

- Key Market AI Chips Statistics

- Top Market Players in the AI Chips Market Statistics

- Comparison of AI Chips and GPU Performance Statistics

- Memory Technologies in AI Chips Statistics

- Power Efficiency Metrics in AI Chips Statistics

- Notable AI Chips and Their Specifications Key Statistics

- Venture Capital Spending On Semiconductors, AI Chips Statistics

- Quantum Computing’s Impact on AI Chips Statistics

- Recent Developments

- Conclusion

Introduction

AI Chips Statistics: AI chips, also called AI accelerators, are specialized hardware designed to enhance the speed and efficiency of artificial intelligence tasks. Unlike traditional CPUs, they’re optimized for parallel processing and specific AI operations, like matrix calculations in neural networks.

Types include GPUs, TPUs, and ASICs. These chips boost performance metrics like FLOPS, INT8/INT4 ops, and memory bandwidth to balance power efficiency and computation power.

They’re a crucial part of the AI ecosystem, aiding tasks from image recognition to natural language processing and driving AI research and application advancements.

Editor’s Choice

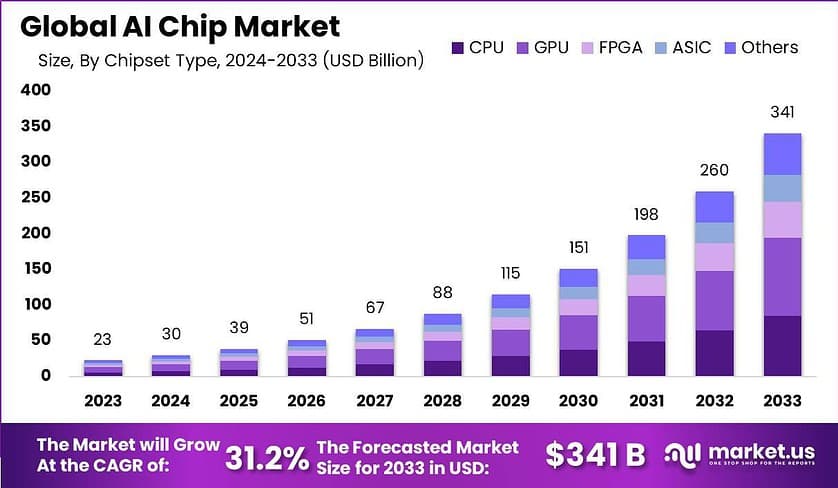

- The Global AI Chip Market size is expected to be worth around USD 341 Billion by 2033, from USD 23.0 Billion in 2023, growing at a CAGR of 31.2% during the forecast period from 2024 to 2033.

- It is anticipated to reach new heights, culminating in a valuation of 165 billion USD by 2030.

- In 2017, Intel became the first AI chip manufacturer to exceed $1 billion in sales.

- NVIDIA’s GPUs have consistently pushed the boundaries of FLOPS performance. For instance, the NVIDIA A100 GPU was released in 2020. Delivered over 19 teraflops (TFLOPS) of single-precision (FP32) performance and around 9.7 TFLOPS of double-precision (FP64) performance.

- Venture capital spending in the AI sector followed a dynamic trajectory over the years. In the first quarter of 2018, the investment amounted to $282 million, marking the inception of this financial trend.

- Venture capital spending in the AI sector followed a dynamic trajectory over the years. In the first quarter of 2018, the investment amounted to $282 million, marking the inception of this financial trend.

- Based on a survey conducted in 2022, the global implementation of quantum computing surpassed the adoption rate of artificial intelligence (AI).

Key Market AI Chips Statistics

- The AI chip market has witnessed a remarkable growth trajectory over the years. With its market size consistently expanding at a compound annual growth rate (CAGR) of approximately 31.2%.

- In 2023, the market was valued at 23 billion USD, demonstrating the industry’s potential.

- Building on this momentum, the market experienced substantial growth. Reaching 23 billion USD in 2023 and a remarkable 30 billion USD in 2024.

- As AI technologies advance, the market is projected to surge even further. With estimations of 39 billion USD in 2025 and an impressive 51 billion USD in 2026.

- The trend is expected to continue upward, with market sizes projected to be 67 billion USD in 2027, 88 billion USD in 2028, and 115 billion USD in 2029.

- Looking ahead, the AI chip market is anticipated to reach new heights. Culminating in a valuation of 341 billion USD by 2033. This growth reflects the increasing integration of AI technologies across diverse industries and underscores the significance of AI chips in driving innovation and transformative applications.

Top Market Players in the AI Chips Market Statistics

- NVIDIA has an extensive history in manufacturing graphics processing units (GPUs) for the gaming sector, with a presence dating back to the 1990s. They have supplied GPUs for popular gaming platforms like the PlayStation 3 and Xbox. The company also develops AI chips such as Volta, Xavier, and Tesla. Recent outstanding performance in generative AI contributed to impressive outcomes in Q2 2023, resulting in a valuation surpassing one trillion.

- Intel, a significant player in the industry, boasts a rich heritage in technology advancement. In 2017, the company became the first AI chip manufacturer to exceed $1 billion in sales.

- Google’s Cloud TPU is meticulously crafted to accelerate machine learning and powers various Google products, including Translate, Photos, and Search. This capability can be accessed through the Google Cloud platform. Another offering, Google Alphabet’s Edge TPU, is tailored for smaller devices such as smartphones, tablets, and IoT devices, delivering efficient edge computing solutions.

- AMD, a chip manufacturer, provides various products encompassing CPUs, GPUs, and AI accelerators. For instance, their Alveo U50 data center accelerator card stands out with an impressive 50 billion transistors. It excels in tasks like efficiently managing embedding datasets and swiftly executing graph algorithms.

- In 2014, IBM unveiled the “neuromorphic chip” TrueNorth AI. This remarkable chip boasts specifications including 5.4 billion transistors, 1 million neurons, and 256 million synapses. It’s engineered for streamlined deep network inference and precise data interpretation.

(Source: AI Multiple)

Comparison of AI Chips and GPU Performance Statistics

- NVIDIA’s GPUs have consistently pushed the boundaries of FLOPS performance. For instance, the NVIDIA A100 GPU, released in 2020, delivered over 19 teraflops (TFLOPS) of single-precision (FP32) performance and around 9.7 TFLOPS of double-precision (FP64) performance.

- Google’s Tensor Processing Units (TPUs) are known for their high AI-related FLOPS. The TPU v3, introduced in 2018, was reported to provide around 420 TFLOPS of AI performance.

- Intel’s Nervana Neural Network Processor for Training (NNP-T) aimed to provide strong AI performance. The Intel NNP-T 1000, released in 2020, targeted 119 TFLOPS of AI performance.

- AMD GPUs, such as the Radeon Instinct MI100, introduced in 2020, promised around 11.5 TFLOPS of double-precision performance.

- Custom chips, like Apple’s M1, introduced in 2020, showcased impressive AI capabilities with around 2.6 TFLOPS of throughput.

(Source: Silicon Angle)

Memory Technologies in AI Chips Statistics

- On-chip memory stands out for its impressive bandwidth and efficiency despite its capacity limitations. Notable instances, such as Cerebras achieving remarkable benchmarks of 9 petabytes/sec memory bandwidth and 18GB on-chip memory, effectively bolster its 400,000 AI-focused cores.

- Prominent offerings like AMD’s Radeon RX Vega 56, NVIDIA’s Tesla V100, Fujitsu’s A64FX processor featuring 4 HBM2 DRAMs, and NEC’s Vector Engine Processor equipped with 6 HBM2 DRAMs, underline the preference for HBM2 in supercomputing scenarios.

- Conversely, GDDR extends to AI system designers the advantages of substantial bandwidth and well-established manufacturing methods akin to conventional DDR memory systems. With a legacy spanning two decades, GDDR memory utilizes tried-and-true chip-on-PCB manufacturing practices.

- In comparing HBM2 and GDDR6, HBM2 demonstrates favorable power and space considerations attributes.

- GDDR6 exhibits notably higher power consumption (between three and a half to four times) on the System-On-Chip (SoC) PHY when juxtaposed with HBM2.

- Additionally, GDDR6 occupies a larger PHY area (one and a half to one and three-quarters times) on the SoC.

(Source: Semiconductor Engineering)

Power Efficiency Metrics in AI Chips Statistics

- In April 2023, Qualcomm Inc’s AI chips performed better than Nvidia Corp’s in two out of three measurements related to power efficiency.

- A significant cost factor pertains to power consumption. Qualcomm capitalized on its expertise in chip design for battery-dependent devices, such as smartphones, to create the Cloud AI 100 chip with a strong emphasis on reducing power usage.

- Regarding power efficiency, Qualcomm’s chips achieved 227.4 server queries per watt, surpassing Nvidia’s 108.4 queries per watt.

- Qualcomm also exceeded Nvidia in object detection, achieving a score of 3.8 queries per watt compared to Nvidia’s 2.4 questions per watt.

- However, Nvidia secured the top position in a test of natural language processing—a crucial AI technology widely used in applications like chatbots—leading to overall performance and power efficiency.

- Nvidia achieved 10.8 queries per watt, while Qualcomm secured the second place with 8.9 queries per watt.

(Source: Reuters)

Notable AI Chips and Their Specifications Key Statistics

NVIDIA GPUs

Tesla V100

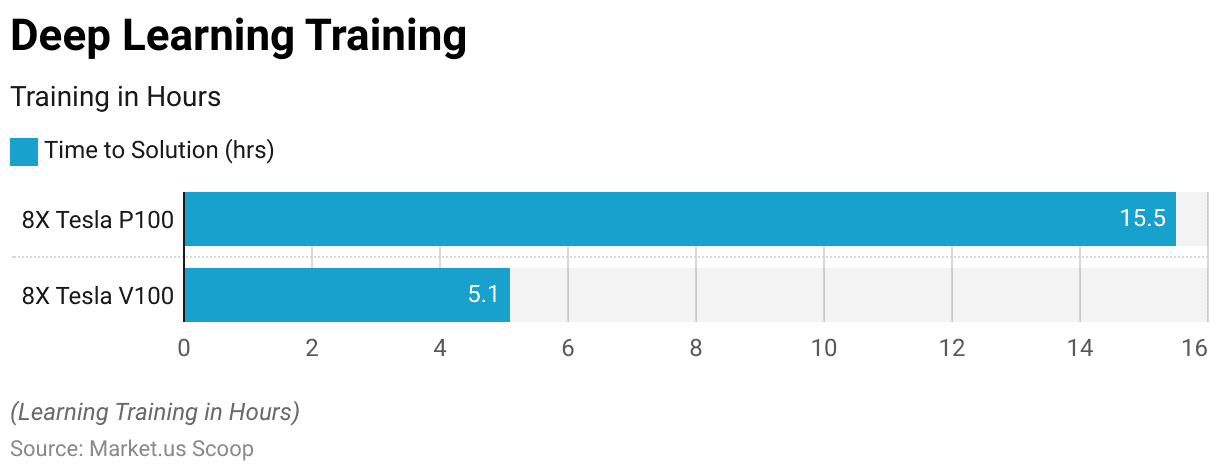

- Having 640 Tensor Cores, the Tesla V100 stands out as the first GPU globally to surpass the 100 teraFLOPS (TFLOPS) mark in deep learning performance.

- The upcoming iteration of NVIDIA NVLink establishes connections between multiple V100 GPUs, achieving speeds of up to 300 GB/s, thereby crafting the planet’s most potent computing servers.

- Engineered for optimal effectiveness within present hyperscale server racks, the Tesla V100 is tailored to provide peak performance.

- At its core, the Tesla V100 GPU prioritizes AI, resulting in a remarkable 30-fold increase in inference performance compared to a CPU server.

- The NVIDIA Tesla V100 boasts an array of specifications across different variants, such as Tesla V100 for NVLink, Tesla V100 for PCIe, and Tesla V100S for PCIe.

- These specifications outline the impressive performance capabilities of these GPUs. With NVIDIA GPU Boost enhancing its prowess, the Tesla V100 achieves 7.8 teraFLOPS in double-precision computation, 15.7 teraFLOPS in single-precision processing, and an outstanding 125 teraFLOPS in deep learning tasks.

- For the Tesla V100 for the PCIe variant, the performance figures are seven teraFLOPS (double-precision), 14 teraFLOPS (single-precision), and 112 teraFLOPS (deep learning).

- Meanwhile, the Tesla V100S for PCIe variant reaches 8.2 teraFLOPS (double-precision), 16.4 teraFLOPS (single-precision), and an impressive 130 teraFLOPS (deep learning).

- The bi-directional NVLink achieves 300 GB/s for interconnect bandwidth, while PCIe offers 32 GB/s.

- Memory-wise, the GPUs are equipped with CoWoS Stacked HBM2, with capacities ranging from 16 GB to 32 GB and bandwidths running from 900 GB/s to 1134 GB/s.

- Power consumption is capped at 300 watts for the maximum consumption of the Tesla V100. On the other hand, the Tesla V100 for PCIe operates at 250 watts.

(Source: NVIDIA)

A100

- Their comprehensive specifications distinguish the A100 80GB PCIe and the A100 80GB SXM. In terms of computational prowess, these GPUs deliver 9.7 TFLOPS in FP64, 19.5 TFLOPS in FP64 Tensor Core, 19.5 TFLOPS in FP32, and impressive figures like 156 TFLOPS (or 312 TFLOPS) in Tensor Float 32 (TF32), 312 TFLOPS (or 624 TFLOPS) in BFLOAT16 Tensor Core, and 312 TFLOPS (or 624 TFLOPS) in FP16 Tensor Core.

- INT8 Tensor Core operation reaches 624 TOPS (or 1248 TOPS).

- Both GPUs have 80GB of HBM2e GPU memory, boasting remarkable bandwidths of 1,935 GB/s and 2,039 GB/s, respectively.

- Their thermal design power (TDP) varies with the A100 80GB PCIe at 300W and the A100 80GB SXM at 400W.

- These GPUs support multi-instance GPU configurations with up to 7 MIGs at 10GB each.

- While the A100 80GB PCIe comes in a dual-slot air-cooled or single-slot liquid-cooled form factor, the A100 80GB SXM utilizes NVIDIA NVLink Bridge for interconnect at 600 GB/s or PCIe Gen4 at 64 GB/s.

- Server options encompass partner and NVIDIA-Certified Systems accommodating 1-8 GPUs for the A100 80GB PCIe and NVIDIA HGX A100-Partner and NVIDIA-Certified Systems with 4, 8, or 16 GPUs or NVIDIA DGX A100 with 8 GPUs for the A100 80GB SXM.

(Source: NVIDIA)

Google TPUs

- The series of Tensor Processing Units (TPUs), including TPUv1, TPUv2, TPUv3, TPUv4, and Edge v1, were introduced in 2016, 2017, 2018, 2021, and 2018, respectively.

- These iterations have evolved with different process nodes, starting from 28nm for TPUv1, progressing to 16nm for TPUv2 and TPUv3, and eventually to the advanced 7nm for TPUv4.

- Their die sizes have reduced, with TPUv4 having the smallest at less than 400mm². The on-chip memory also underwent enhancements, ranging from 28 MiB for TPUv1 to 144 MiB for TPUv4.

- The clock speeds of these TPUs have experienced adjustments over time, reaching up to 1050 MHz for TPUv4.

- With TPUv4 housing 32 GiB of HBM memory, memory capacity has increased, offering a substantial memory bandwidth of 1200 GB/s.

- In terms of power consumption, TPUv4 holds the lowest TDP at 170W. These TPUs have shown remarkable improvements in processing capabilities, with TPUv4 leading with 275 TOPS (Tera Operations Per Second).

- Efficiency has also increased significantly, with TPUv4 achieving 1.62 TOPS/W, marking a remarkable advancement from TPUv1’s 0.31 TOPS/W.

(Source: Google Cloud, ServeTheHome)

AMD GPUs

Radeon Instinct MI100

- The Radeon Instinct MI100 has a graphics processor known as Arcturus, under the variant Arcturus XL, which operates on the CDNA 1.0 architecture.

- Produced by TSMC using a 7nm process, it encompasses 25,600 million transistors, yielding 34.1 million per mm² density within a substantial die size of 750 mm².

- The graphics card, part of the Radeon Instinct generation, was released on November 16th, 2020, marking it as an active production.

- Utilizing a PCIe 4.0 x16 bus interface, it sustains a base clock of 1000 MHz and a boost clock of 1502 MHz, with other clock speeds, including a SOC clock at 1091 MHz and a UVD clock at 1403 MHz.

- Memory attributes include 32 GB of HBM2 memory on a 4096-bit memory bus, providing a substantial bandwidth of 1,229 GB/s.

- The render configuration incorporates 7680 shading units, 480 TMUs, 64 ROPs, and 120 compute units.

- Accompanied by a 16 KB L1 cache per compute unit and an 8 MB L2 cache.

- Its theoretical performance is notable, with a pixel rate of 96.13 GPixel/s and a texture rate of 721.0 GTexel/s.

- The graphics card’s board design is a dual-slot, 267 mm in length and 111 mm in width.

- It operates at a TDP of 300 W, recommending a PSU of 700 W. Notably, it features no outputs and requires power connectors in the form of 2x 8-pin.

(Source: TechPowerUp)

Venture Capital Spending On Semiconductors, AI Chips Statistics

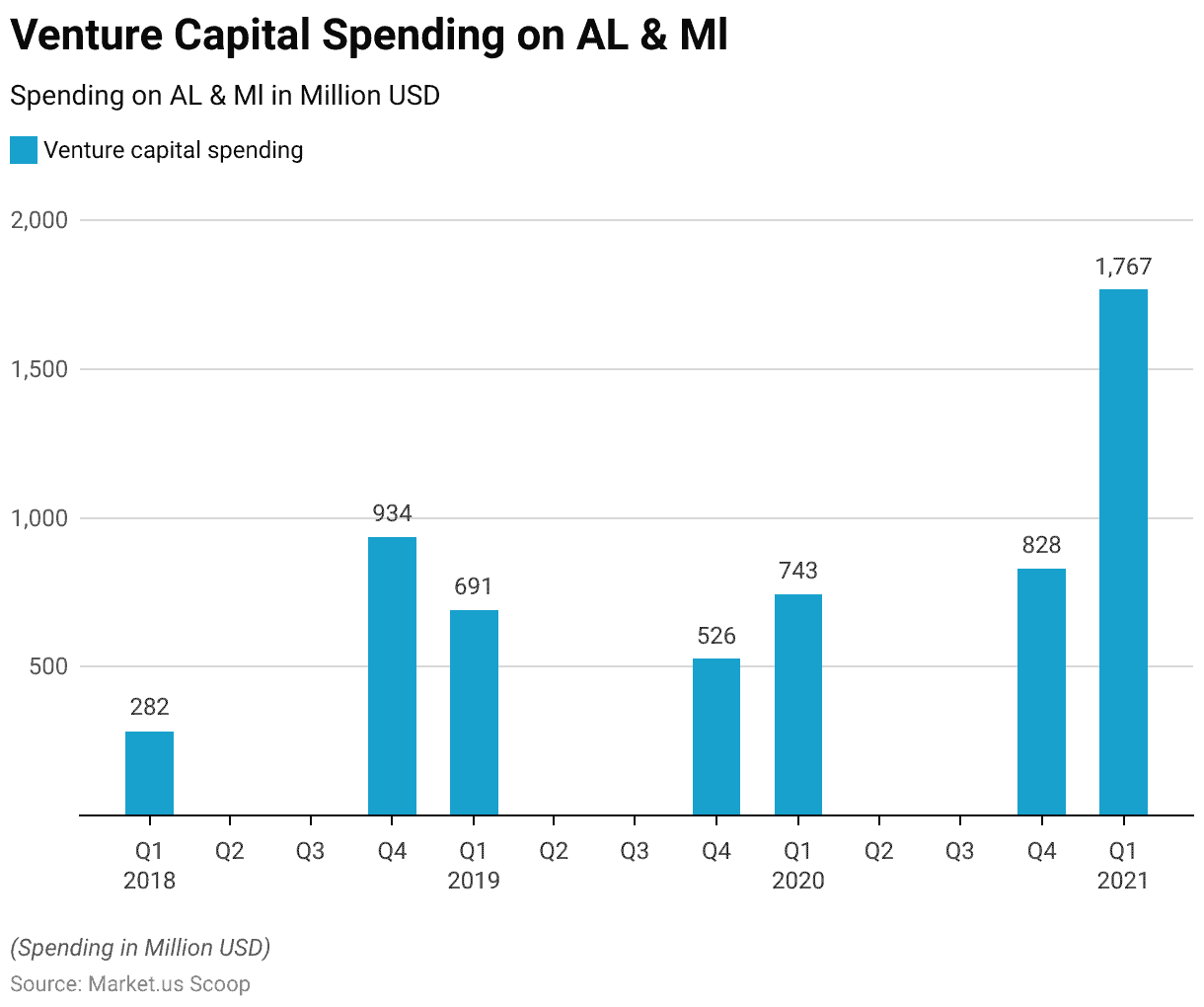

- Venture capital spending in the AI sector followed a dynamic trajectory over the years. In the first quarter of 2018, the investment amounted to $282 million, marking the inception of this financial trend.

- Subsequently, the fourth quarter of 2018 witnessed a substantial leap, reaching $934 million, signifying a significant uptick in investor interest.

- The first quarter of 2019 recorded a considerable investment of $691 million, sustaining the sector’s growth momentum.

- As the year progressed, the fourth quarter of 2019 saw a decline to $526 million.

- Reflecting some fluctuations in the investment landscape.

- The first quarter of 2020 regained momentum with a robust investment of $743 million.

- Suggesting the sector’s resilience in the face of economic challenges.

- Closing the year on a positive note, the fourth quarter of 2020 reported an impressive $828 million investment, showcasing a rebound in investor confidence.

- The first quarter of 2021 marked a remarkable pinnacle, with venture capital spending soaring to $1,767 million.

- Underscoring the industry’s rapid expansion and enduring appeal to investors.

(Source: Statista)

Quantum Computing’s Impact on AI Chips Statistics

- Based on a survey conducted in 2022, the global implementation of quantum computing surpassed the adoption rate of artificial intelligence (AI).

- Almost half of the participants (49%) expressed that their adoption of quantum computing was more rapid than AI.

- While only 17% mentioned a slower progression.

- This trend was especially pronounced in North America.

- Where 62% of the surveyed indicated a quicker pace of adopting quantum computing than AI.

(Source: Statista)

Recent Developments

Acquisitions and Mergers:

Nvidia acquires Arm Holdings: In 2024, Nvidia completed the acquisition of Arm Holdings for $40 billion. This merger aims to enhance Nvidia’s AI capabilities by leveraging Arm’s processor designs, which are widely used in various AI applications.

AMD merges with Xilinx: In late 2023, AMD finalized its merger with Xilinx, a leader in adaptive and intelligent computing, for $35 billion. This merger strengthens AMD’s position in the AI chip market by integrating Xilinx’s FPGA technology with AMD’s processors.

New Product Launches:

Intel’s Ponte Vecchio: Intel launched its Ponte Vecchio AI chip in early 2024. This high-performance chip is designed for supercomputing and AI workloads, offering unprecedented speed and efficiency.

Google’s TPU v5: Google unveiled its Tensor Processing Unit (TPU) v5 in mid-2023, boasting significant improvements in processing power and energy efficiency, specifically designed for large-scale AI and machine learning applications.

Funding:

Graphcore secures $250 million: In 2023, AI chip startup Graphcore raised $250 million in a Series E funding round to expand its AI chip production and enhance its IPU (Intelligence Processing Unit) technology.

SambaNova Systems raises $200 million: SambaNova Systems, an AI hardware and software startup, secured $200 million in funding in early 2024 to accelerate the development and deployment of its AI chip solutions.

Technological Advancements:

Quantum AI Chips: Research and development in quantum AI chips have advanced, with companies like IBM and Google exploring quantum computing to achieve breakthroughs in AI processing capabilities.

Neuromorphic Chips: Intel and IBM are leading the development of neuromorphic chips, which mimic the human brain’s neural networks to achieve more efficient AI processing and lower power consumption.

Market Dynamics:

Growing Demand: The global AI chip market is expected to grow at a CAGR of 25% from 2023 to 2028, driven by increasing adoption in data centers, autonomous vehicles, and consumer electronics.

Competitive Landscape: Nvidia, Intel, AMD, and Google are leading the AI chip market, with significant R&D investments to maintain their competitive edge.

Regulatory and Strategic Developments:

US-China Trade Policies: Ongoing trade tensions between the US and China have led to regulatory changes impacting the AI chip market. Export restrictions on advanced AI chips to China are influencing global supply chains and market dynamics.

EU’s AI Regulation Framework: The European Union is developing a comprehensive regulatory framework for AI technologies, including AI chips, to ensure ethical AI development and deployment across member states.

Research and Development:

AI Chip Architectures: Ongoing research into new AI chip architectures, such as tensor processing units (TPUs) and graphics processing units (GPUs), aims to enhance performance and efficiency in AI applications.

Collaborative AI Projects: Leading tech companies and academic institutions are collaborating on AI chip research projects to explore innovative applications and improve existing technologies.

Conclusion

AI Chips Statistics – The field of AI chips has witnessed remarkable growth and evolution. In the dynamic landscape of AI chips, market growth has been evident, led by key players like Nvidia, Intel, Google, AMD, and IBM.

Nvidia’s GPU legacy, Intel’s technological strides, Google’s TPU offerings, AMD’s diverse products, and IBM’s neuromorphic chip define the industry. Power efficiency and memory types have crucial roles in shaping AI systems.

The competition between architectures, like Nvidia’s Tensor Cores and AMD’s CDNA, drives performance enhancements.

Venture capital trends and quantum computing adoption underscore industry dynamism. As AI advances, these chips remain pivotal, fueling innovation across sectors.

FAQs

AI chips, also known as AI accelerators or AI processors, are specialized hardware components designed to perform tasks. Related to artificial intelligence, machine learning, and deep learning more efficiently and quickly than general-purpose processors. They are optimized for the specific computational demands of AI workloads.

The AI chip market has experienced substantial growth in recent years. Market size data indicates a consistent upward trend, with significant increases projected. Leading companies like Nvidia, Intel, and AMD have pivotal roles in driving this expansion through innovative chip designs.

Prominent companies in the AI chip market include Nvidia, known for its GPUs and AI-specific designs. Intel, a major player with a history of technology development; Google, offers products like TPUs for machine learning acceleration. AMD, providing CPUs, GPUs, and AI accelerators; and IBM, with its TrueNorth AI neuromorphic chip.

Memory types like on-chip memory, high-bandwidth memory (HBM), and graphics DDR SDRAM (GDDR SDRAM) are crucial for AI systems. On-chip memory provides high bandwidth and efficiency. while HBM offers substantial power and area advantages over GDDR. Contributing to improved performance in AI applications.

Different AI chips exhibit varying power efficiency and performance characteristics. These are often measured regarding floating-point operations per second (FLOPS). The FLOPS-to-power ratio (TOPS/W) is an important metric that reflects the energy efficiency of AI chips. Where higher values indicate better performance per watt.