Table of Contents

- Introduction

- Editor’s Choice

- Volume of Data/Information Created, Captured, Copied, and Consumed Worldwide

- Data Processing and BI Primary Environment for Workload Worldwide

- Unique and Replicated Data in The Global Datasphere By Data Lake Statistics

- Data Lake Statistics By Main Network Data Sources

- Global Big Data Market Size By Data Lake Statistics

- Data Lake Market Statistics

- Data Lake Statistics By Data Rates

- Usage of Data Lake Building Tools Globally Statistics

- Data Lake Statistics By Data Storage Supply and Demand Worldwide

- Leading Countries by Number of Data Centers By Data Lake Statistics

- Data Center Storage Capacity Worldwide

- Secondary Data Storage Locations at Organizations

- Cloud Storage of Corporate Data in Organizations Worldwide

- Enterprise Data Storage and Management

- Location of Sensitive Data in Organizations

- Data Storage Devices Shipments Statistics

- Data Processing and Hosting Businesses

- Cost Metrics

- Key Spending Statistics

- Issues and Challenges

- Regulations for Data Lake Statistics

- Innovations in Data Lake Statistics

- Recent Developments

- Conclusion

- FAQs

Introduction

Data Lake Statistics: A data lake is a centralized repository designed to store vast amounts of structured, semi-structured, and unstructured data in its raw form.

Unlike traditional data warehouses that require predefined schemas. Data lakes use a schema-on-read approach, allowing for flexible data querying and analysis.

They leverage scalable storage solutions, often cloud-based, to handle large volumes of data cost-effectively.

Further, data lakes support diverse processing frameworks and analytics tools, enabling comprehensive data analysis.

However, they require effective data management and governance to ensure data quality, security, and performance, addressing challenges related to data variety and volume.

Editor’s Choice

- The volume of data created, captured, copied, and consumed worldwide is projected to rise to 181 zettabytes by 2025.

- In 2021, the most important network data sources worldwide were dominated by security device logs. Which were identified by 30% of respondents as a critical source of network data.

- The global data lake market revenue reached USD 16.6 billion in 2023.

- By 2032, the global data lake market is expected to reach USD 90.0 billion. With solutions accounting for USD 55.17 billion and services for USD 34.83 billion.

- The global data lake market is segmented by deployment mode into cloud-based and on-premise solutions. As of the latest data, cloud-based deployment holds the majority of the market share, accounting for 58.6% of the market.

- In 2023, the global usage of tools for building data lakes reflects a varied landscape. Traditional relational databases remain the most widely used, with 22% of respondents indicating their preference for this tool.

- In 2018, the majority of enterprise data storage and management was conducted on on-premise systems, accounting for 71% of the total.

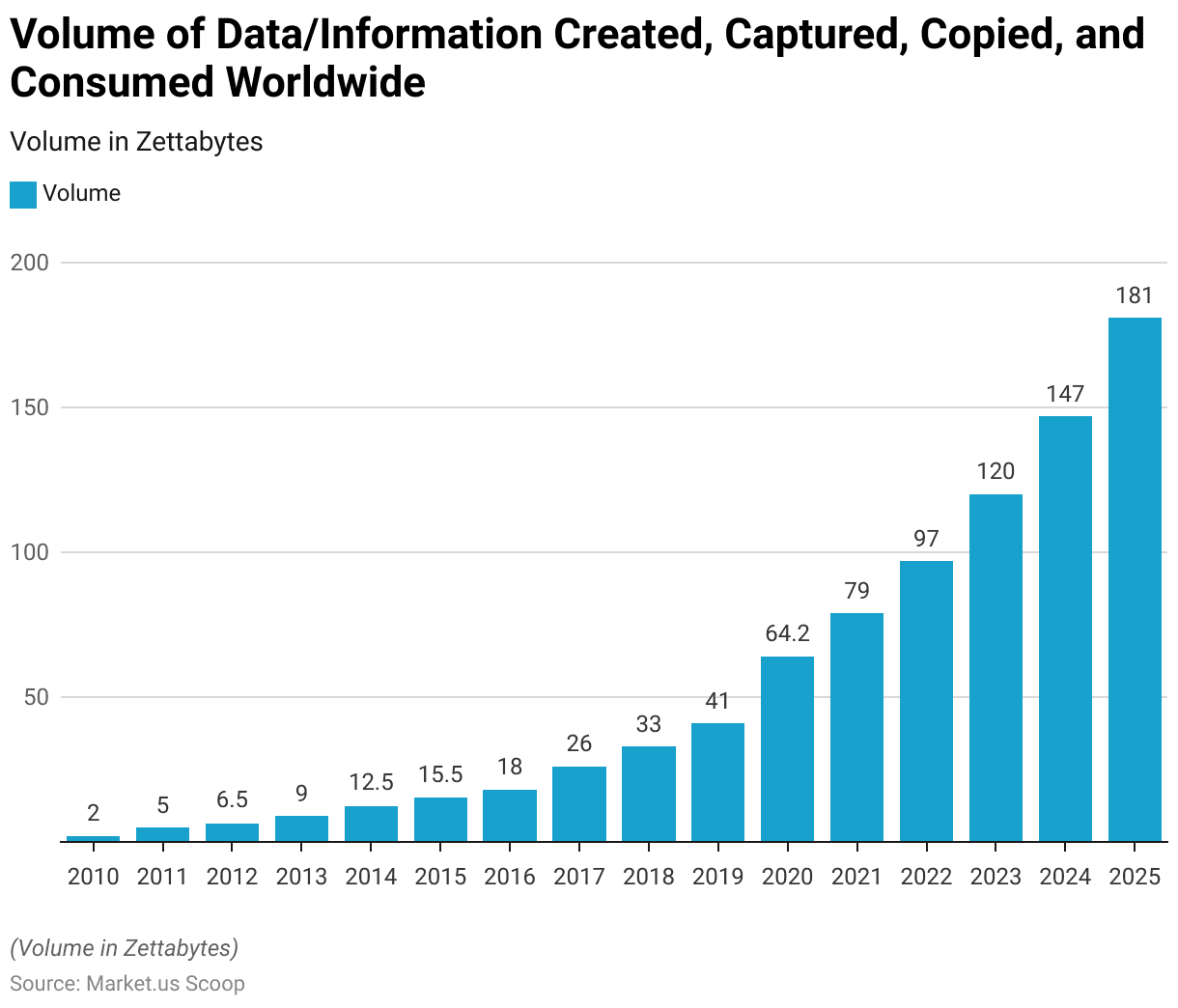

Volume of Data/Information Created, Captured, Copied, and Consumed Worldwide

- The volume of data created, captured, copied, and consumed. Worldwide has grown significantly from 2010 to 2020, with further increases projected through 2025.

- In 2010, the global data volume was measured at two zettabytes, which more than doubled to 5 zettabytes by 2011.

- This upward trajectory continued, reaching 6.5 zettabytes in 2012, 9 zettabytes in 2013, and 12.5 zettabytes in 2014.

- By 2015, the volume had risen to 15.5 zettabytes, followed by 18 zettabytes in 2016 and 26 zettabytes in 2017.

- The expansion persisted, with data volumes reaching 33 zettabytes in 2018 and 41 zettabytes in 2019.

- A notable surge occurred in 2020, with global data volumes spiking to 64.2 zettabytes.

- Projections indicate further rapid growth, with volumes expected to reach 79 zettabytes in 2021, 97 zettabytes in 2022, and 120 zettabytes by 2023.

- This trend is forecasted to continue, with data volumes projected to rise to 147 zettabytes in 2024 and 181 zettabytes by 2025. Reflecting the accelerating pace of data generation and consumption globally.

(Source: Statista)

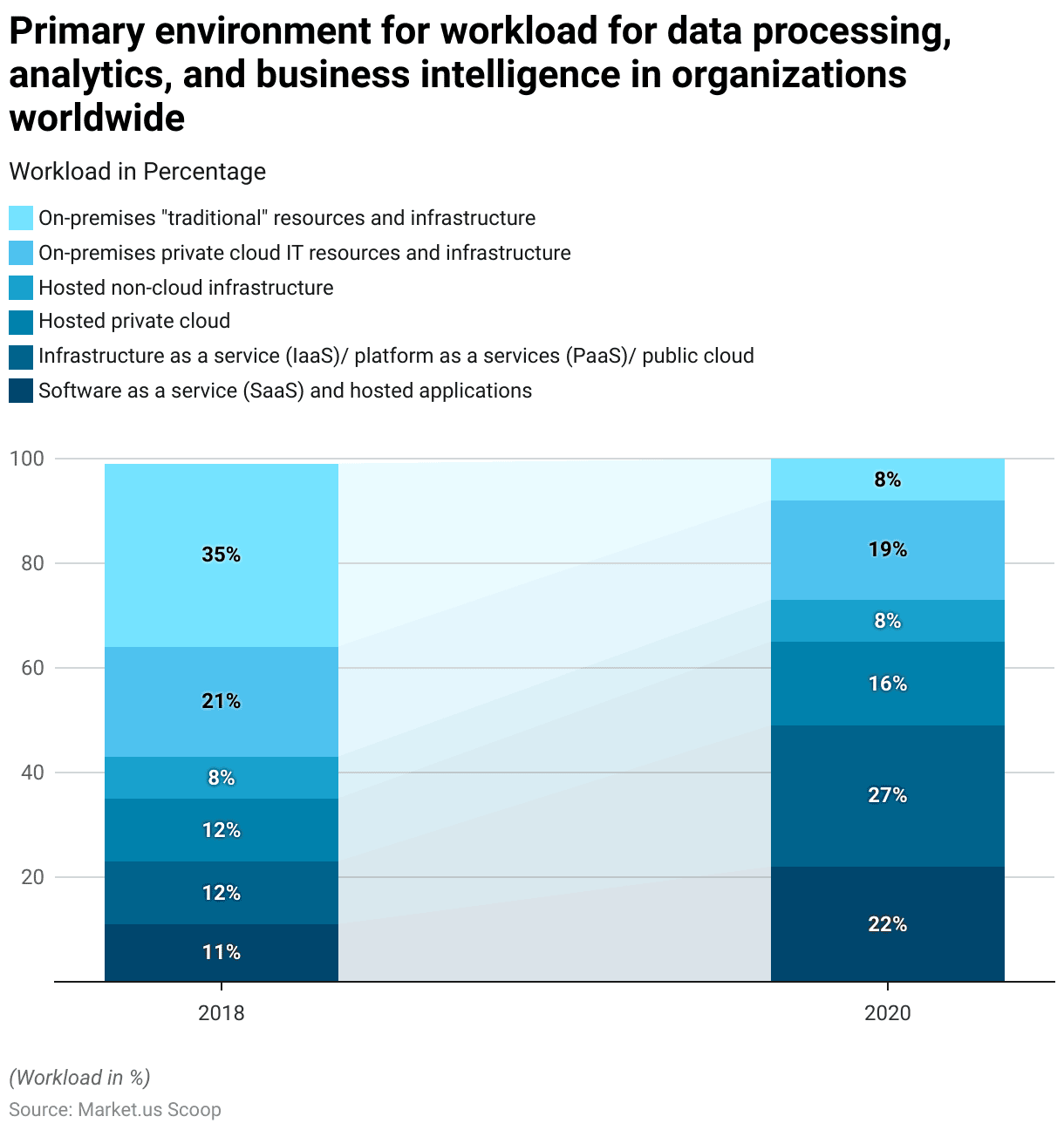

Data Processing and BI Primary Environment for Workload Worldwide

- Between 2018 and 2020, the primary environment for data processing, analytics, and business intelligence workloads in organizations worldwide shifted significantly.

- In 2018, on-premises “traditional” resources and infrastructure were the most common environment used by 35% of organizations.

- However, by 2020, this figure dropped sharply to just 8%, reflecting a major move away from traditional on-premises solutions.

- On-premises private cloud IT resources and infrastructure also saw a slight decline, from 21% in 2018 to 19% in 2020. In contrast, the adoption of cloud-based solutions increased significantly.

- The use of software as a service (SaaS) and hosted applications nearly doubled. Rising from 11% in 2018 to 22% in 2020.

- Similarly, the use of infrastructure as a service (IaaS), platform as a service (PaaS), and public cloud solutions grew from 12% in 2018 to 27% in 2020. Reflecting the increasing reliance on cloud platforms for data workloads.

- Hosted private cloud usage also rose from 12% in 2018 to 16% in 2020. While hosted non-cloud infrastructure remained constant at 8% over the two years.

- These trends demonstrate the ongoing transition from traditional on-premises infrastructure to cloud-based environments as organizations seek more scalable, flexible, and cost-effective solutions for their data processing needs.

(Source: Statista)

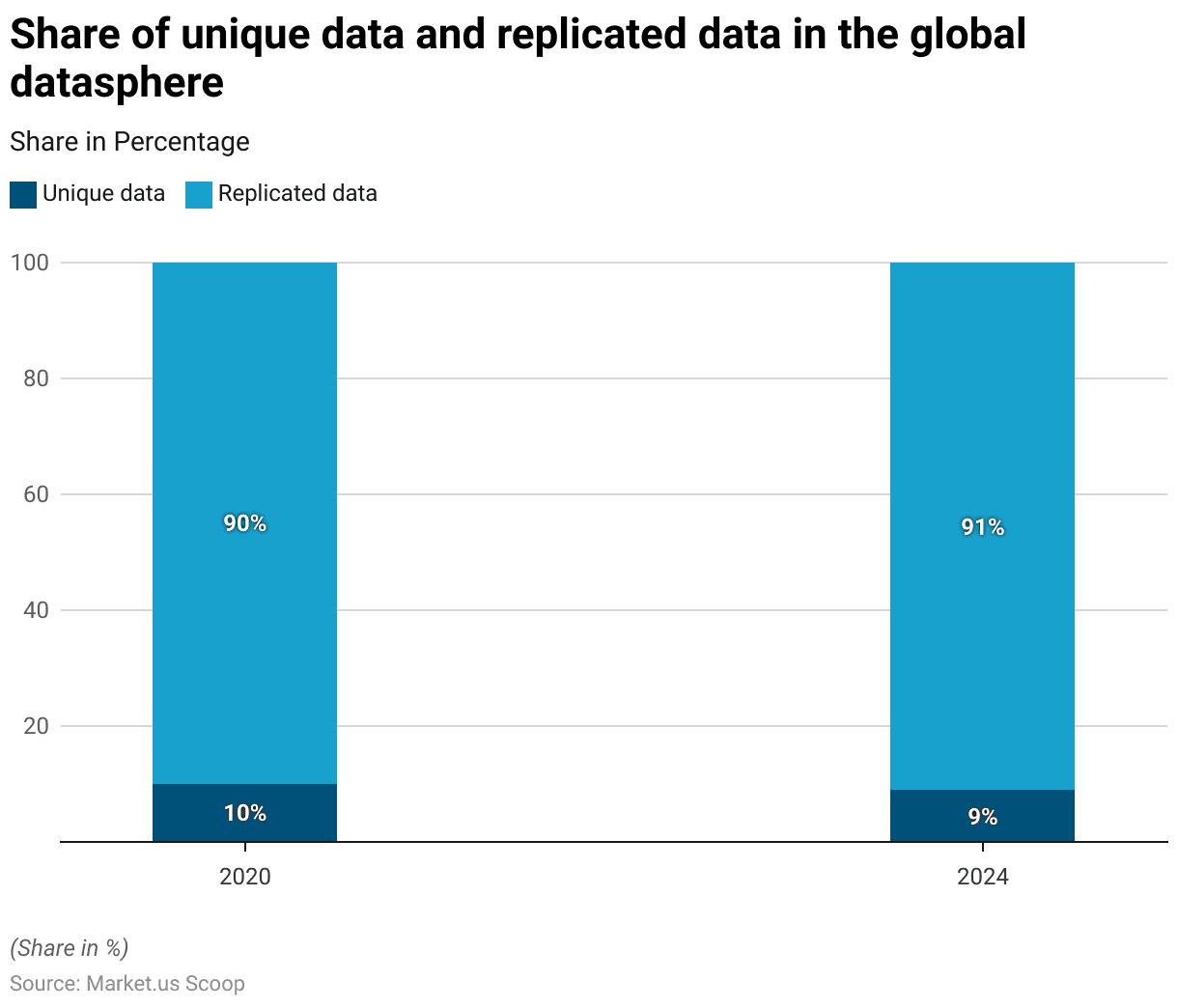

Unique and Replicated Data in The Global Datasphere By Data Lake Statistics

- In the global datasphere, the share of unique data compared to replicated data is projected to shift slightly between 2020 and 2024.

- In 2020, unique data accounted for 10% of the total data, while replicated data made up 90%.

- By 2024, the share of unique data is expected to decrease to 9%, with replicated data increasing to 91%.

- This trend reflects the growing importance of data replication for backups, redundancy, and distributed computing as organizations increasingly prioritize data availability and reliability.

(Source: Statista)

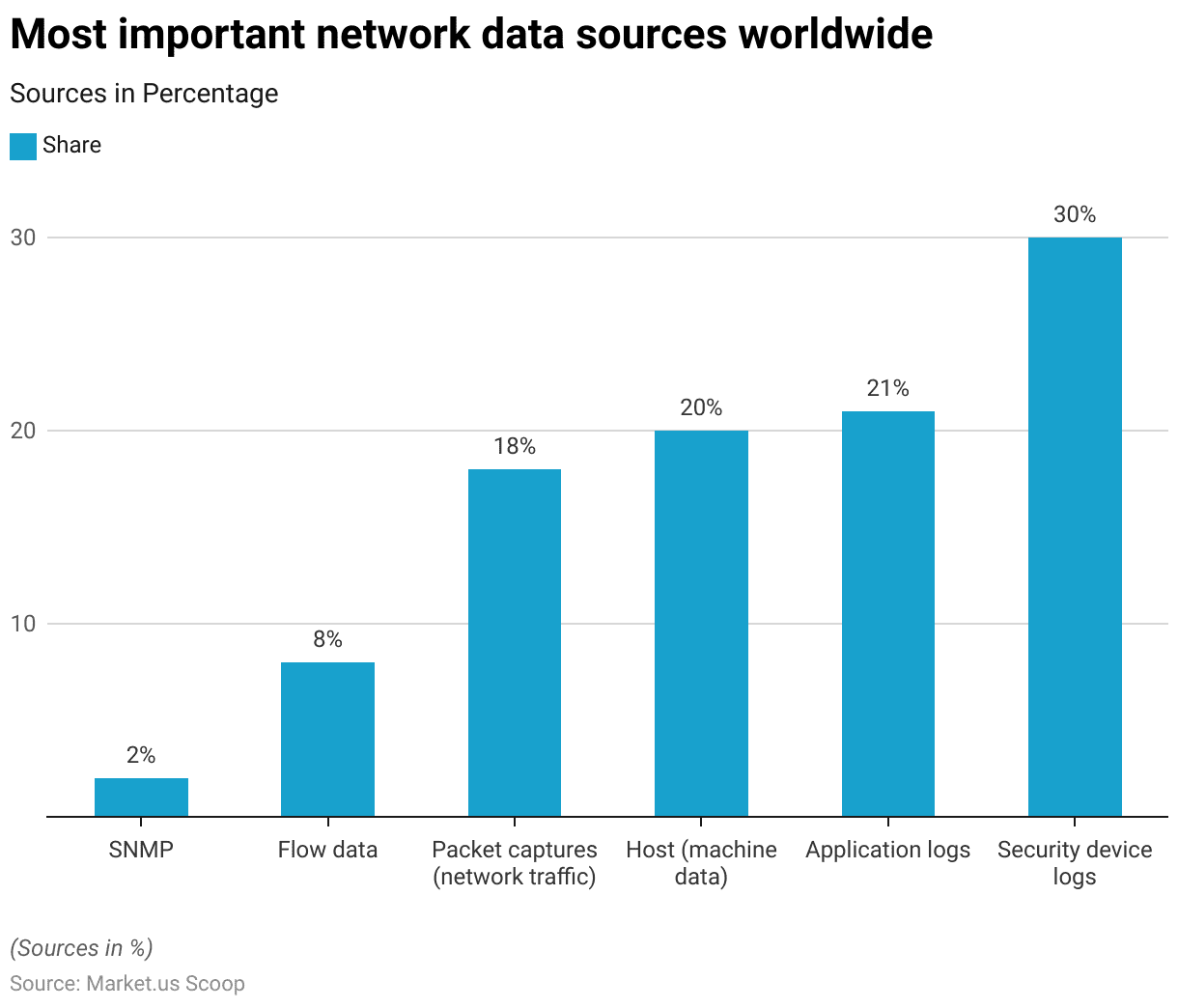

Data Lake Statistics By Main Network Data Sources

- In 2021, the most important network data sources worldwide were dominated by security device logs. Which were identified by 30% of respondents as a critical source of network data.

- Application logs followed, being cited by 21% of respondents, while host or machine data was important for 20%.

- Packet captures, representing network traffic, accounted for 18% of responses.

- Flow data was considered a key source by 8% of respondents, and Simple Network Management Protocol (SNMP) was identified by only 2%. Reflecting its relatively lower importance compared to other data sources in network monitoring and management.

(Source: Statista)

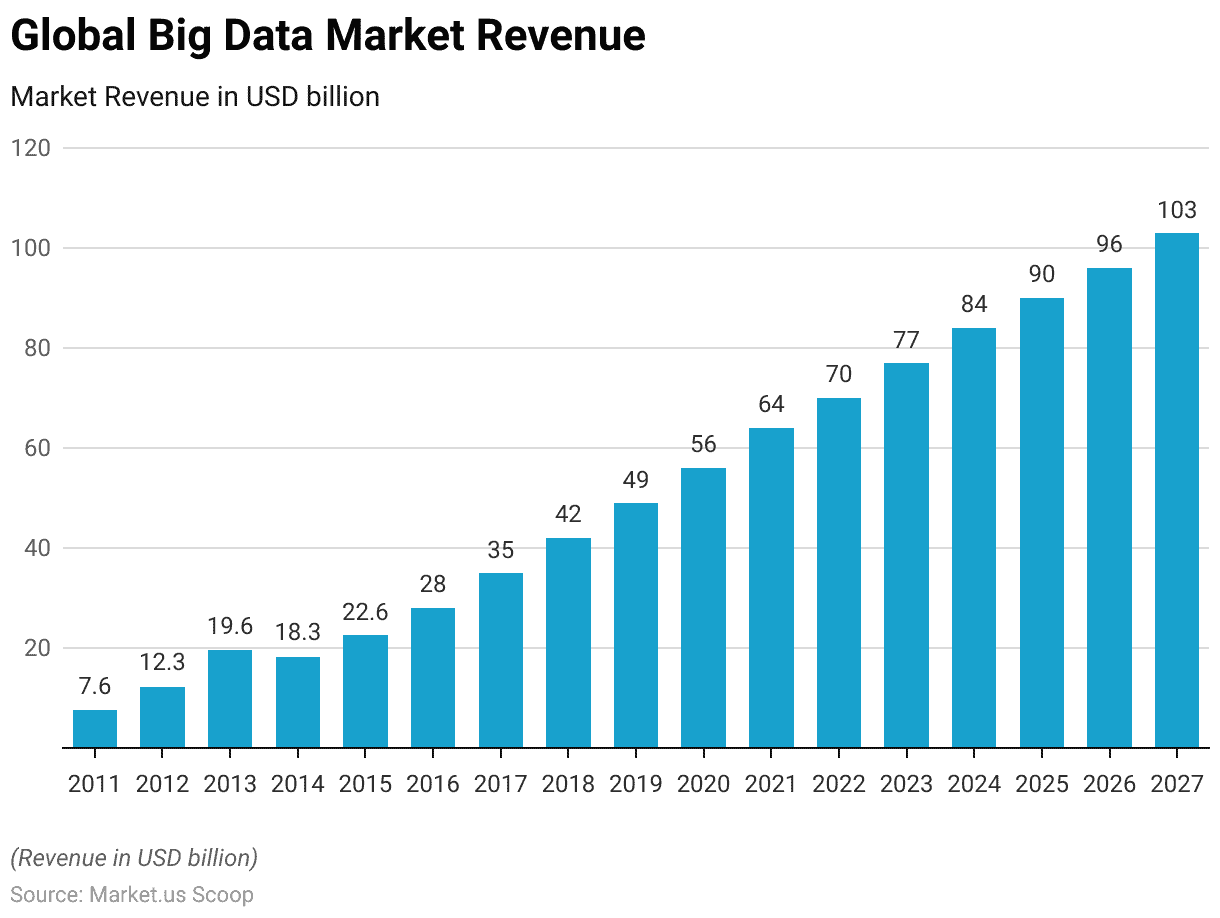

Global Big Data Market Size By Data Lake Statistics

- The global big data market has experienced substantial growth from 2011 to 2027.

- In 2011, the market revenue stood at USD 7.6 billion. Which increased to USD 12.25 billion in 2012 and further surged to USD 19.6 billion in 2013.

- After a slight decline to USD 18.3 billion in 2014, the market recovered and reached USD 22.6 billion in 2015.

- The upward trajectory continued in subsequent years. With the market expanding to USD 28 billion in 2016, USD 35 billion in 2017, and USD 42 billion in 2018.

- By 2019, the global big data market revenue had risen to USD 49 billion, and it continued to grow steadily, reaching USD 56 billion in 2020 and USD 64 billion in 2021.

- The market further expanded to USD 70 billion in 2022 and USD 77 billion in 2023.

- Projections indicate that this growth will continue. With the market expected to reach USD 84 billion in 2024, USD 90 billion in 2025, and USD 96 billion in 2026.

- By 2027, the global big data market is forecasted to hit USD 103 billion. Reflecting the increasing demand for data-driven solutions across industries.

(Source: Statista)

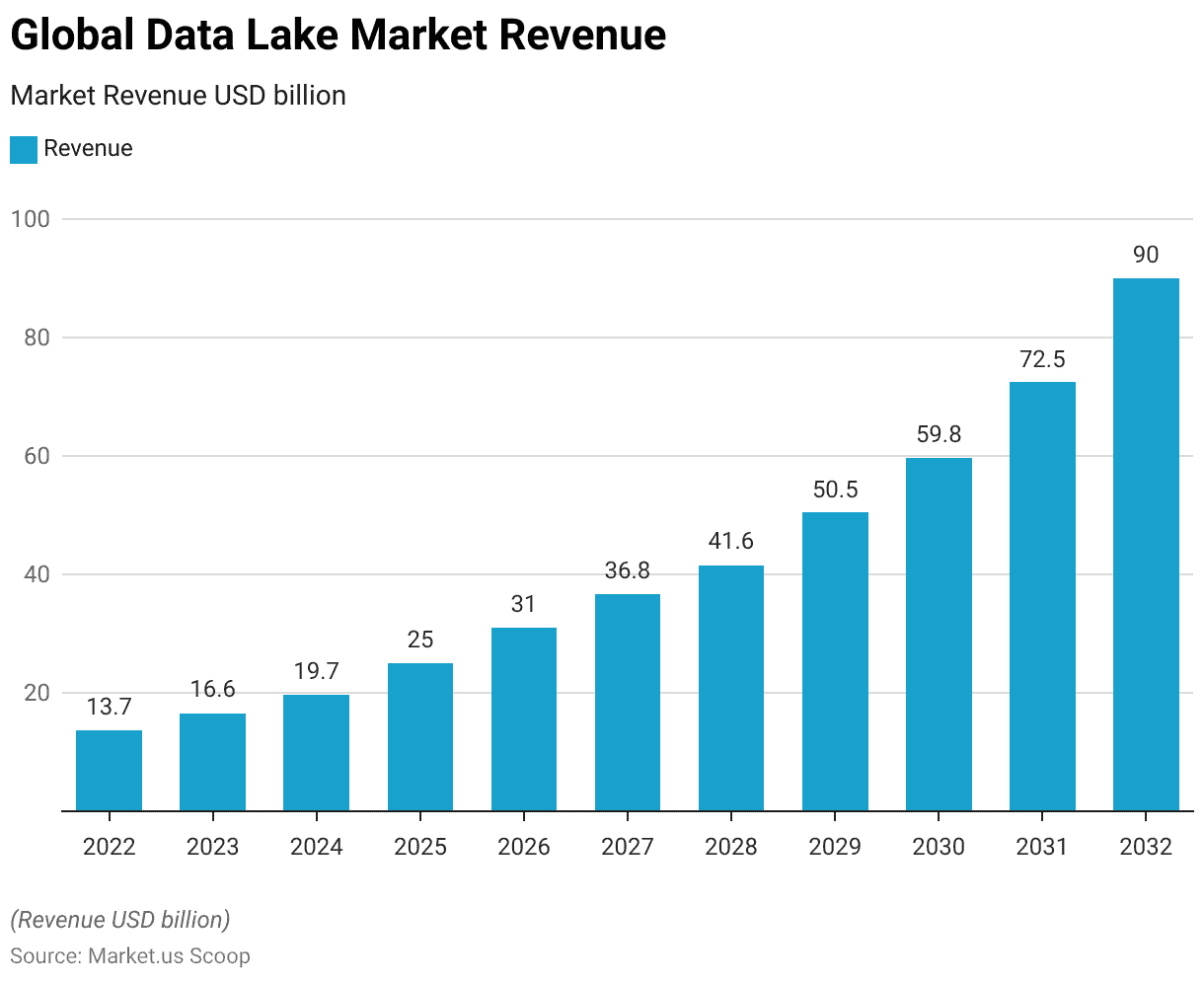

Data Lake Market Statistics

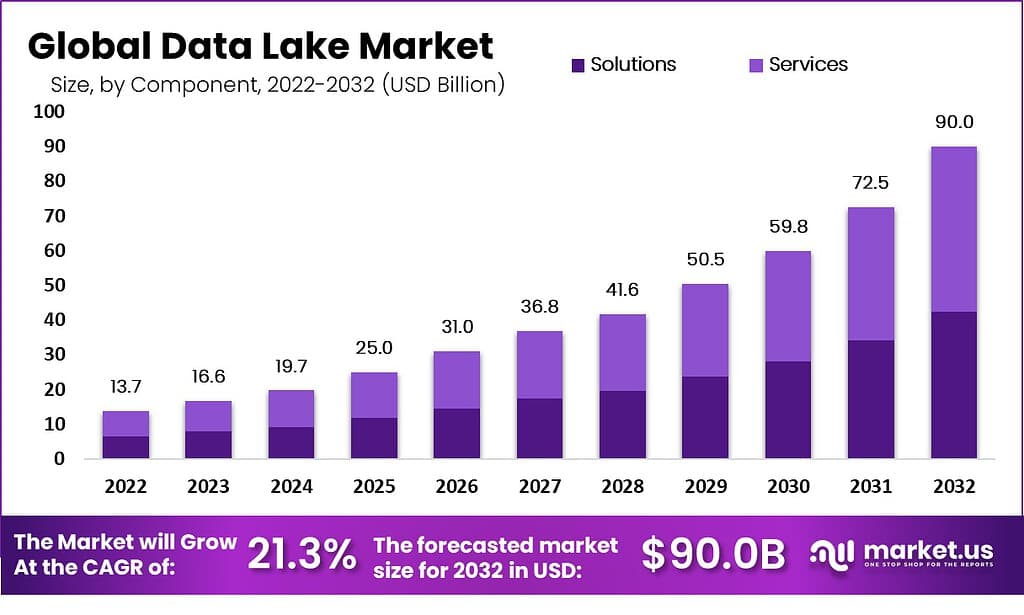

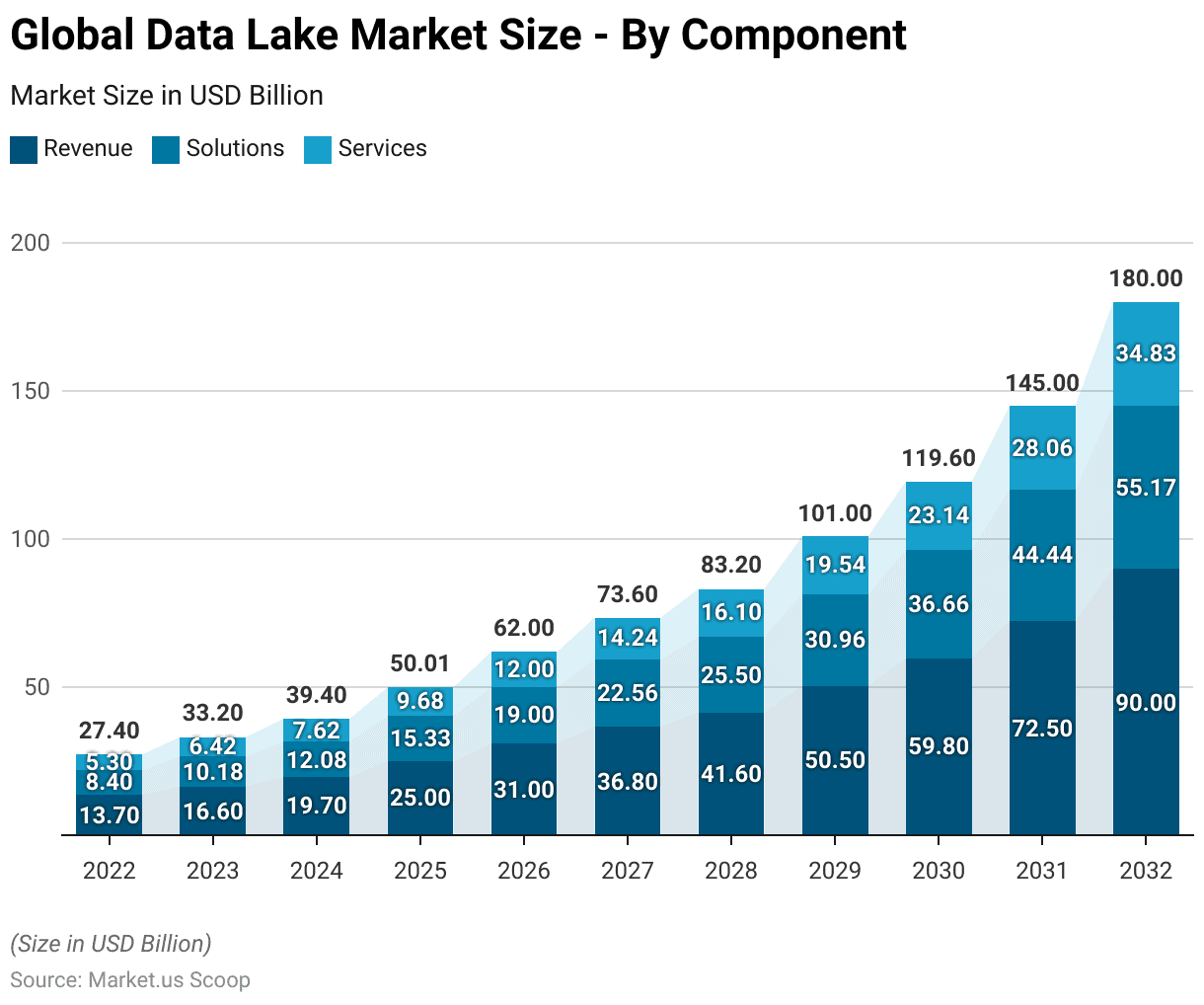

Global Data Lake Market Size Statistics

- The global data lake market is projected to experience significant growth over the next decade at a CAGR of 21.3%.

- In 2022, the market revenue was valued at USD 13.7 billion and is expected to rise to USD 16.6 billion in 2023.

- This upward trend continues, with the market estimated to reach USD 19.7 billion in 2024 and USD 25.0 billion in 2025.

- By 2026, the market is forecasted to generate USD 31.0 billion. Further increasing to USD 36.8 billion in 2027 and USD 41.6 billion in 2028.

- Substantial growth is expected after that, with revenues reaching USD 50.5 billion in 2029 and USD 59.8 billion in 2030.

- The market is anticipated to surpass USD 72.5 billion by 2031, ultimately reaching an impressive USD 90.0 billion in 2032.

- This robust growth highlights the increasing demand and adoption of data lakes globally. Driven by factors such as the expansion of big data and the growing need for advanced data analytics.

(Source: market.us)

Global Data Lake Market Size – By Component Statistics

2022-2027

- The global data lake market is set to grow significantly from 2022 to 2027, driven by both solutions and services components.

- In 2022, the total market revenue stood at USD 13.7 billion. With solutions accounting for USD 8.40 billion and services contributing USD 5.30 billion.

- By 2023, the market is projected to reach USD 16.6 billion. With solutions and services generating USD 10.18 billion and USD 6.42 billion, respectively.

- This trend is expected to continue, with the market growing to USD 19.7 billion in 2024 (USD 12.08 billion from solutions and USD 7.62 billion from services).

- By 2025, the market is forecasted to rise to USD 25.0 billion. With solutions at USD 15.33 billion and services at USD 9.68 billion.

- Further growth is projected, with the market expected to reach USD 31.0 billion in 2026. With solutions contributing USD 19.0 billion and services USD 12.0 billion.

- By 2027, the total revenue is anticipated to reach USD 36.8 billion. With USD 22.56 billion from solutions and USD 14.24 billion from services.

2028-2032

- In 2028, the market is projected to reach USD 41.6 billion, driven by USD 25.50 billion in solutions and USD 16.10 billion in services.

- The data lake market is expected to grow further. Reaching USD 50.5 billion in 2029 (USD 30.96 billion from solutions and USD 19.54 billion from services) and USD 59.8 billion in 2030 (USD 36.66 billion from solutions and USD 23.14 billion from services).

- By 2031, the market is forecasted to reach USD 72.5 billion. With solutions contributing USD 44.44 billion and services USD 28.06 billion.

- Ultimately, by 2032, the global data lake market is expected to reach USD 90.0 billion. With solutions accounting for USD 55.17 billion and services for USD 34.83 billion.

- This strong growth highlights the increasing demand for data lake solutions and services. Driven by the need for advanced data management and analytics capabilities across industries.

(Source: market.us)

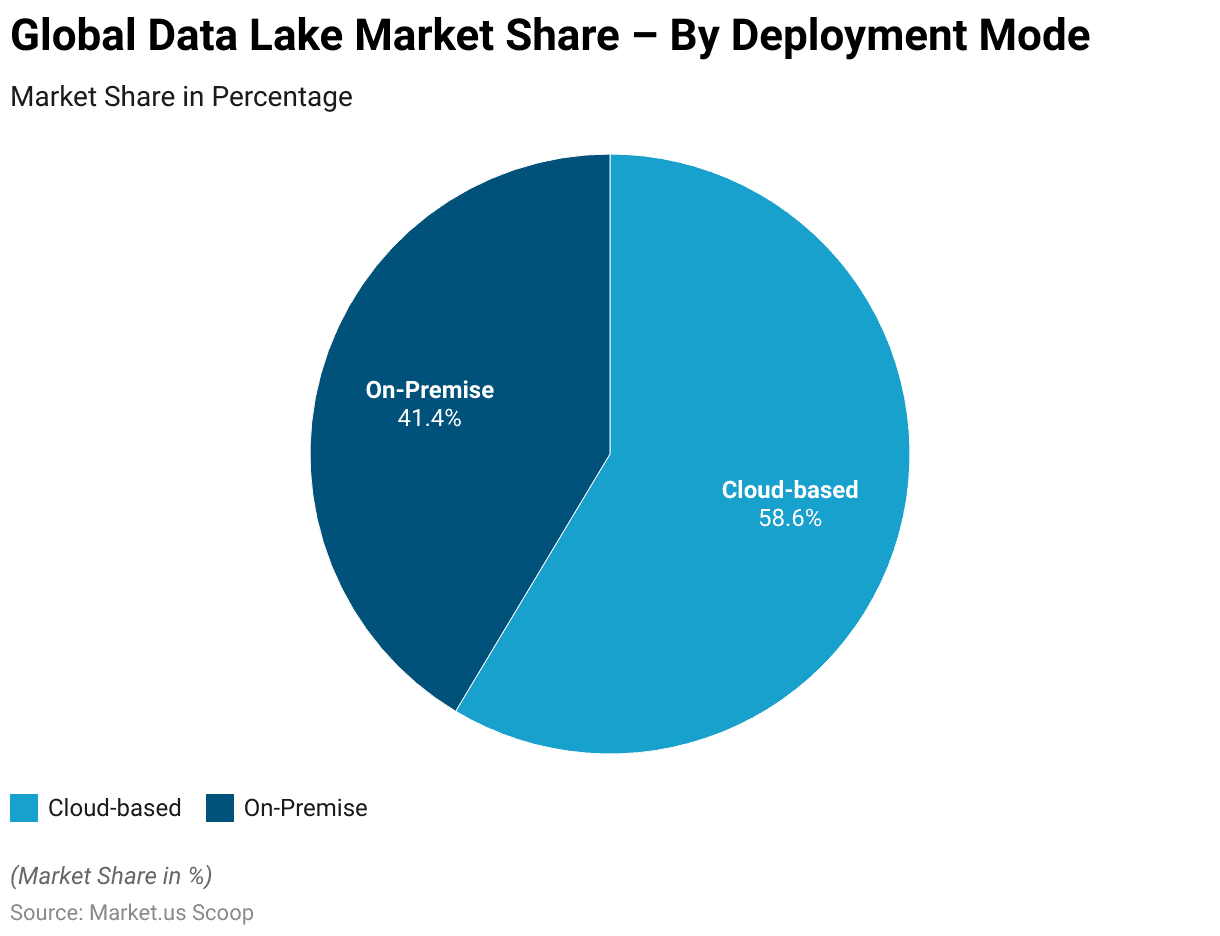

Global Data Lake Market Share – By Deployment Mode Statistics

- The global data lake market is segmented by deployment mode into cloud-based and on-premise solutions.

- As of the latest data, cloud-based deployment holds the majority of the market share, accounting for 58.6% of the market.

- In contrast, on-premise deployment constitutes 41.4% of the market share.

- This indicates a growing preference for cloud-based solutions due to their scalability, flexibility, and lower infrastructure costs.

- In contrast, on-premise solutions still hold a significant share, particularly in industries requiring enhanced control over data security and compliance.

(Source: market.us)

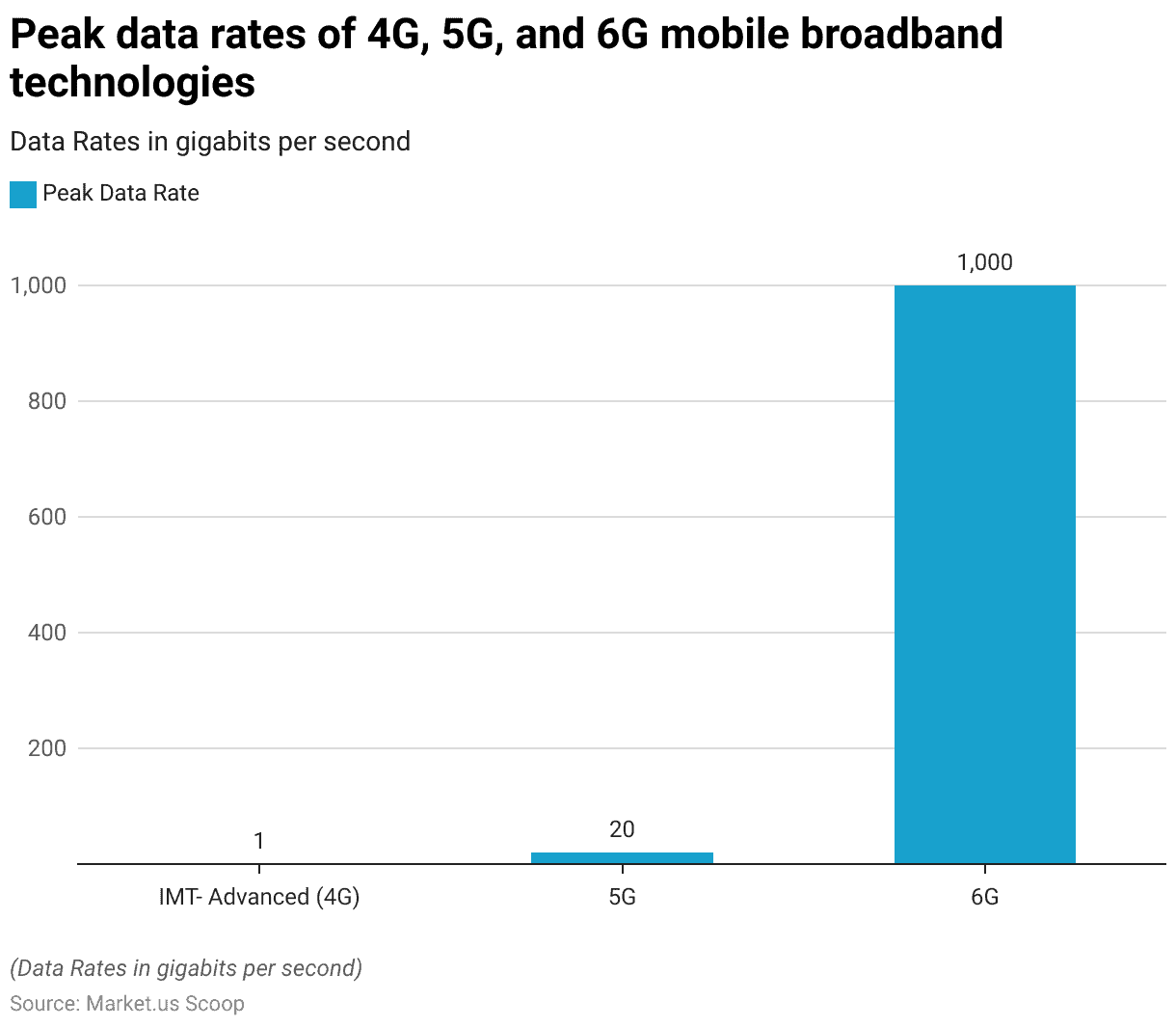

Data Lake Statistics By Data Rates

- The peak data rates of mobile broadband technologies have increased significantly with each generation.

- IMT-Advanced (4G) technology offers a peak data rate of 1 gigabit per second.

- With the introduction of 5G, this rate increased substantially to 20 gigabits per second. Enabling faster and more efficient data transmission.

- Looking ahead, 6G technology is expected to deliver an extraordinary peak data rate of 1,000 gigabits per second. Representing a monumental leap in mobile broadband capabilities and paving the way for advanced applications in various sectors.

(Source: Statista)

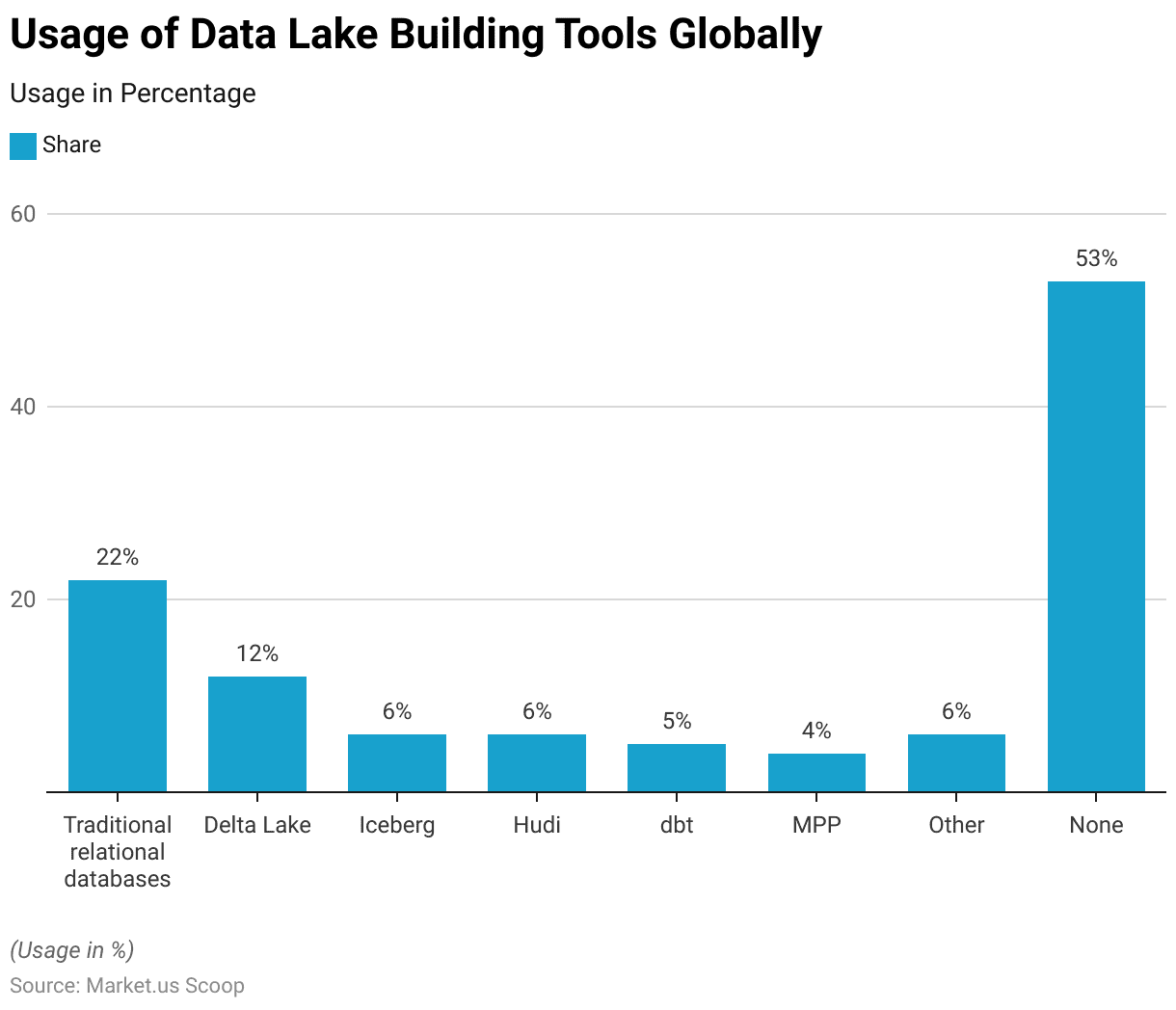

Usage of Data Lake Building Tools Globally Statistics

- In 2023, the global usage of tools for building data lakes reflects a varied landscape.

- Traditional relational databases remain the most widely used, with 22% of respondents indicating their preference for this tool.

- Delta Lake follows with 12%, while Iceberg and Hudi each account for 6% of usage.

- Other tools, such as DBT and MPP, are used by 5% and 4% of respondents, respectively.

- Additionally, 6% of respondents use other unspecified tools. Interestingly, a significant 53% of respondents report using no specific tools for building data lakes. Which may indicate reliance on custom or proprietary solutions or a lack of formalized tool usage in certain sectors.

(Source: Statista)

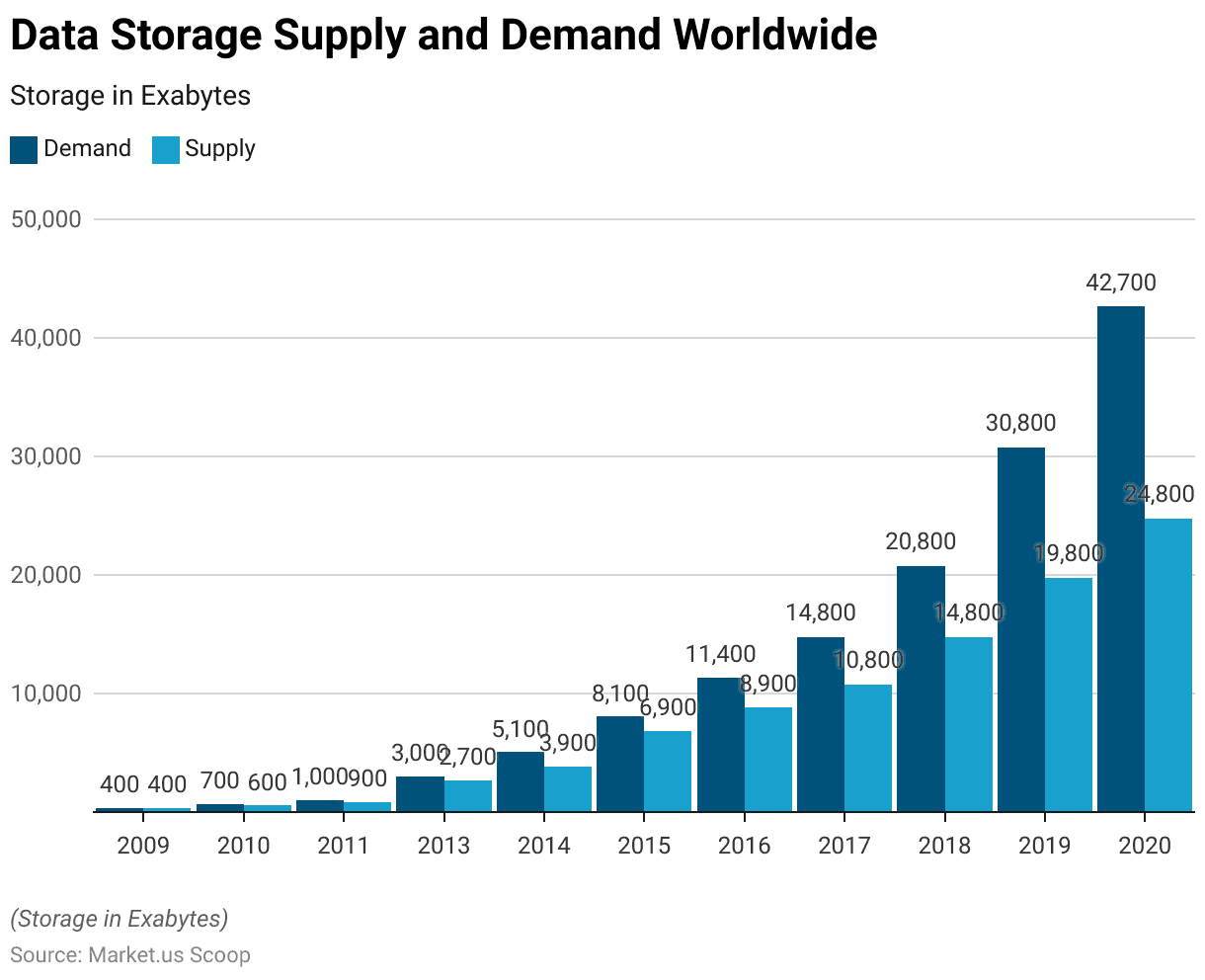

Data Lake Statistics By Data Storage Supply and Demand Worldwide

- From 2009 to 2020, the global demand for data storage experienced a dramatic increase, with supply struggling to keep pace.

- In 2009, both demand and supply were balanced at 400 exabytes.

- By 2010, demand rose to 700 exabytes, while supply lagged slightly at 600 exabytes.

- This gap widened over the years, as demand reached 1,000 exabytes in 2011, with supply at 900 exabytes.

- In 2012, demand surged to 2,000 exabytes, while supply increased to 1,700 exabytes.

- The trend continued, with demand growing to 3,000 exabytes in 2013 and supply reaching 2,700 exabytes.

- In 2014, demand jumped to 5,100 exabytes, compared to a supply of 3,900 exabytes.

- By 2015, demand had further increased to 8,100 exabytes, with supply at 6,900 exabytes.

- This pattern persisted into 2016, with demand at 11,400 exabytes and supply at 8,900 exabytes.

- The gap widened further in 2017, when demand reached 14,800 exabytes and supply was 10,800 exabytes.

- In 2018, demand grew to 20,800 exabytes, while supply increased to 14,800 exabytes.

- By 2019, demand reached 30,800 exabytes, while supply was 19,800 exabytes.

- Finally, in 2020, demand peaked at 42,700 exabytes, with supply rising to 24,800 exabytes.

- This significant gap between storage demand and supply highlights the accelerating need for data storage solutions worldwide.

(Source: Statista)

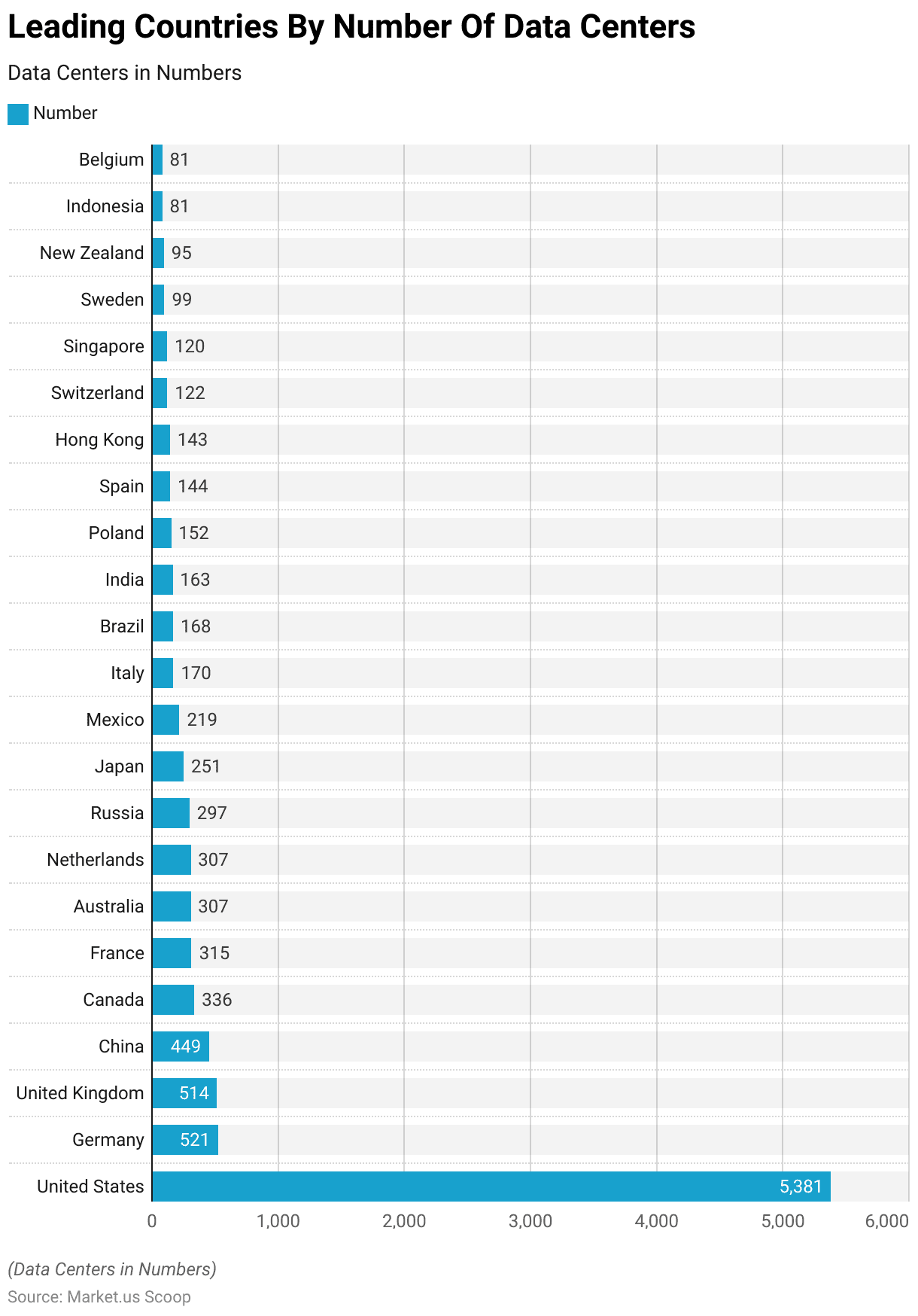

Leading Countries by Number of Data Centers By Data Lake Statistics

- As of March 2024, the United States leads the world in the number of data centers, with a total of 5,381 facilities.

- Germany follows with 521 data centers, while the United Kingdom comes in third with 514.

- China ranks fourth with 449 data centers, and Canada completes the top five with 336.

- France has 315 data centers, followed by Australia and the Netherlands, each with 307.

- Russia hosts 297 data centers, while Japan has 251, and Mexico has 219.

- Further, down the list, Italy maintains 170 data centers, Brazil 168, and India 163.

- Poland has 152 data centers, Spain 144, and Hong Kong 143.

- Switzerland hosts 122 data centers, while Singapore has 120.

- Sweden has 99 data centres, and New Zealand has 95.

- Indonesia and Belgium both have 81 data centers.

- This distribution reflects the global demand for data infrastructure, particularly in developed nations and rapidly growing economies.

(Source: Statista)

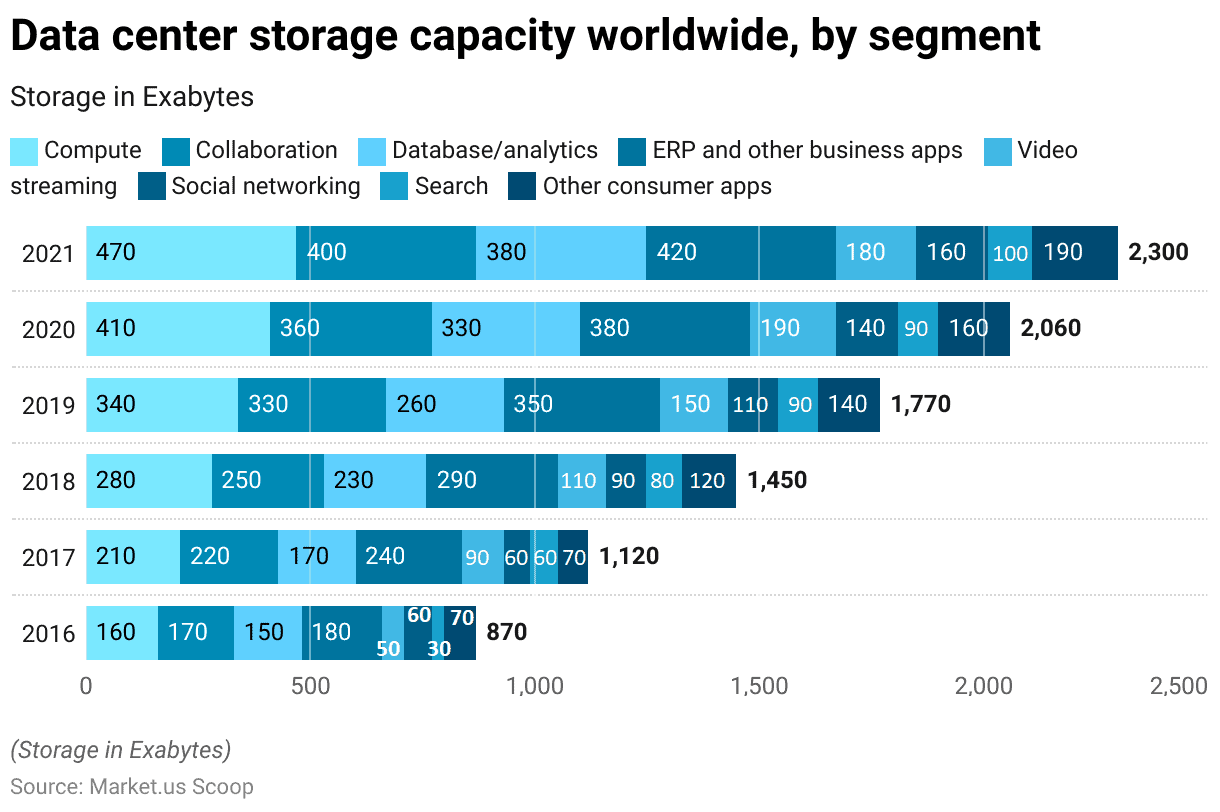

Data Center Storage Capacity Worldwide

2016-2018

- From 2016 to 2021, global data center storage capacity expanded significantly across various segments.

- In 2016, compute capacity reached 160 exabytes, collaboration tools utilized 170 exabytes, and database/analytics held 150 exabytes.

- ERP and other business applications accounted for 180 exabytes. While video streaming, social networking, search, and other consumer apps held 50, 60, 30, and 70 exabytes, respectively.

- By 2017, storage capacity for computing increased to 210 exabytes. With collaboration reaching 220 exabytes, database/analytics at 170 exabytes, and ERP/business applications at 240 exabytes.

- Video streaming grew to 90 exabytes, while social networking and search held steady at 60 exabytes each, and other consumer apps remained at 70 exabytes.

- In 2018, compute capacity expanded to 280 exabytes, collaboration to 250 exabytes, and database/analytics to 230 exabytes.

- ERP and other business applications grew to 290 exabytes, while video streaming increased to 110 exabytes. Social networking rose to 90 exabytes, search to 80 exabytes, and other consumer apps to 120 exabytes.

2019-2021

- This growth continued in 2019, with compute storage reaching 340 exabytes, collaboration at 330 exabytes, and database/analytics at 260 exabytes.

- ERP/business applications expanded to 350 exabytes. While video streaming, social networking, and search grew to 150, 110, and 90 exabytes, respectively. With other consumer apps increasing to 140 exabytes.

- In 2020, compute storage capacity reached 410 exabytes, collaboration tools expanded to 360 exabytes, and database/analytics grew to 330 exabytes.

- ERP/business applications rose to 380 exabytes, video streaming to 190 exabytes, and social networking to 140 exabytes. Search remained stable at 90 exabytes, while other consumer apps increased to 160 exabytes.

- Finally, in 2021, compute storage reached 470 exabytes, collaboration tools grew to 400 exabytes, and database/analytics rose to 380 exabytes. ERP and other business applications expanded to 420 exabytes, while video streaming slightly decreased to 180 exabytes.

- Social networking continued to grow to 160 exabytes, search reached 100 exabytes, and other consumer apps expanded to 190 exabytes.

- This consistent growth highlights the increasing demand for data storage across various sectors. Driven by the rise of cloud computing, collaboration platforms, and digital content consumption.

(Source: Statista)

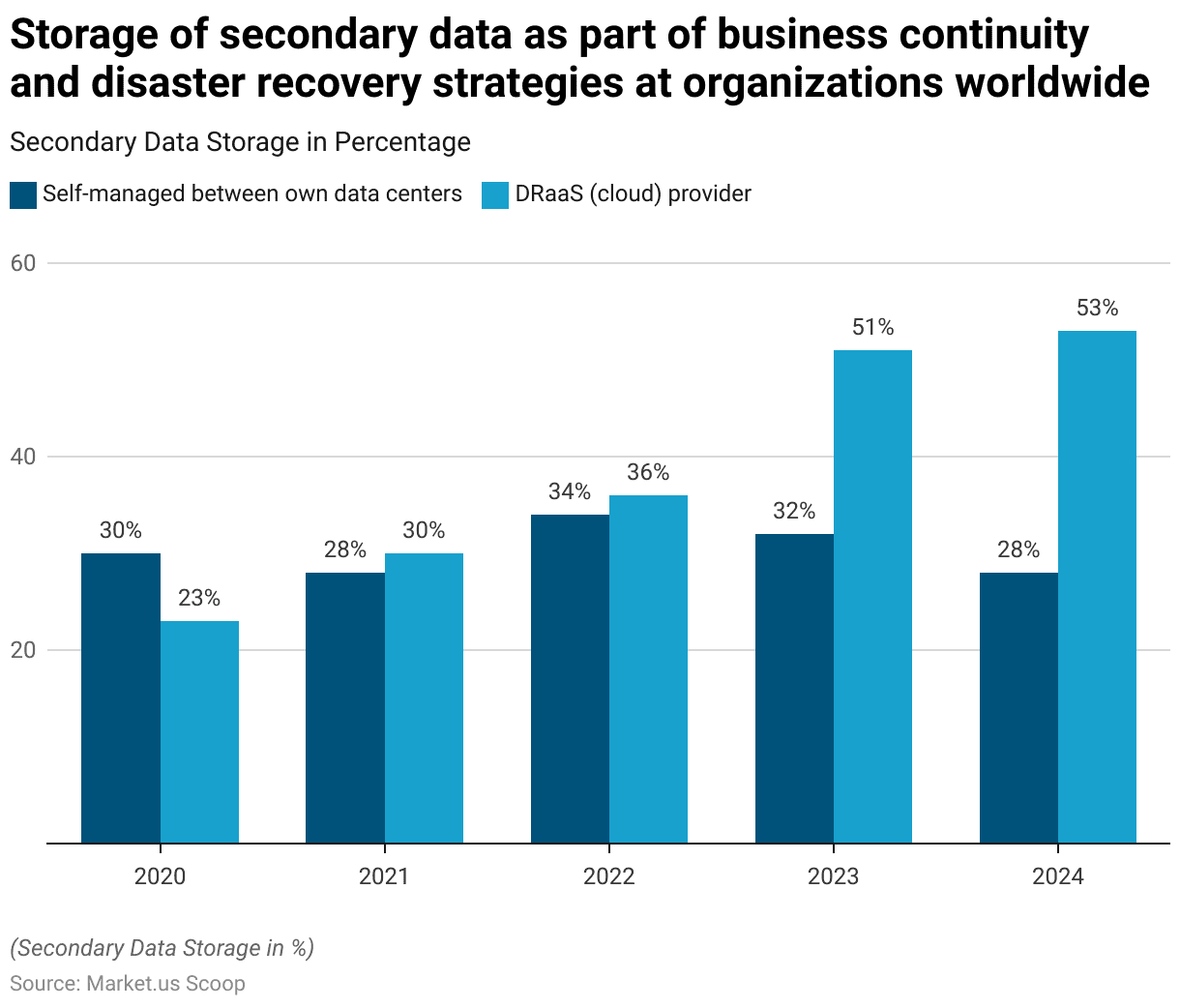

Secondary Data Storage Locations at Organizations

- From 2020 to 2024, the approach to storing secondary data as part of business continuity and disaster recovery strategies has shifted significantly among organizations worldwide.

- In 2020, 30% of organizations managed their secondary data between their own data centres. While 23% relied on Disaster Recovery as a Service (DRaaS) through cloud providers.

- By 2021, this balance began to shift, with self-managed solutions decreasing to 28% and DRaaS adoption increasing to 30%.

- In 2022, DRaaS overtook self-managed solutions. With 36% of organizations using cloud providers for disaster recovery, compared to 34% that continued to manage their data.

- This trend accelerated in 2023, as DRaaS adoption surged to 51%, while self-managed solutions dropped slightly to 32%.

- The forecast for 2024 indicates that DRaaS will continue to grow. With 53% of organizations expected to use cloud providers for disaster recovery.

- In contrast, self-managed approaches are projected to decline further to 28%.

- This shift reflects the growing preference for cloud-based solutions due to their scalability, cost-efficiency, and enhanced recovery capabilities.

(Source: Statista)

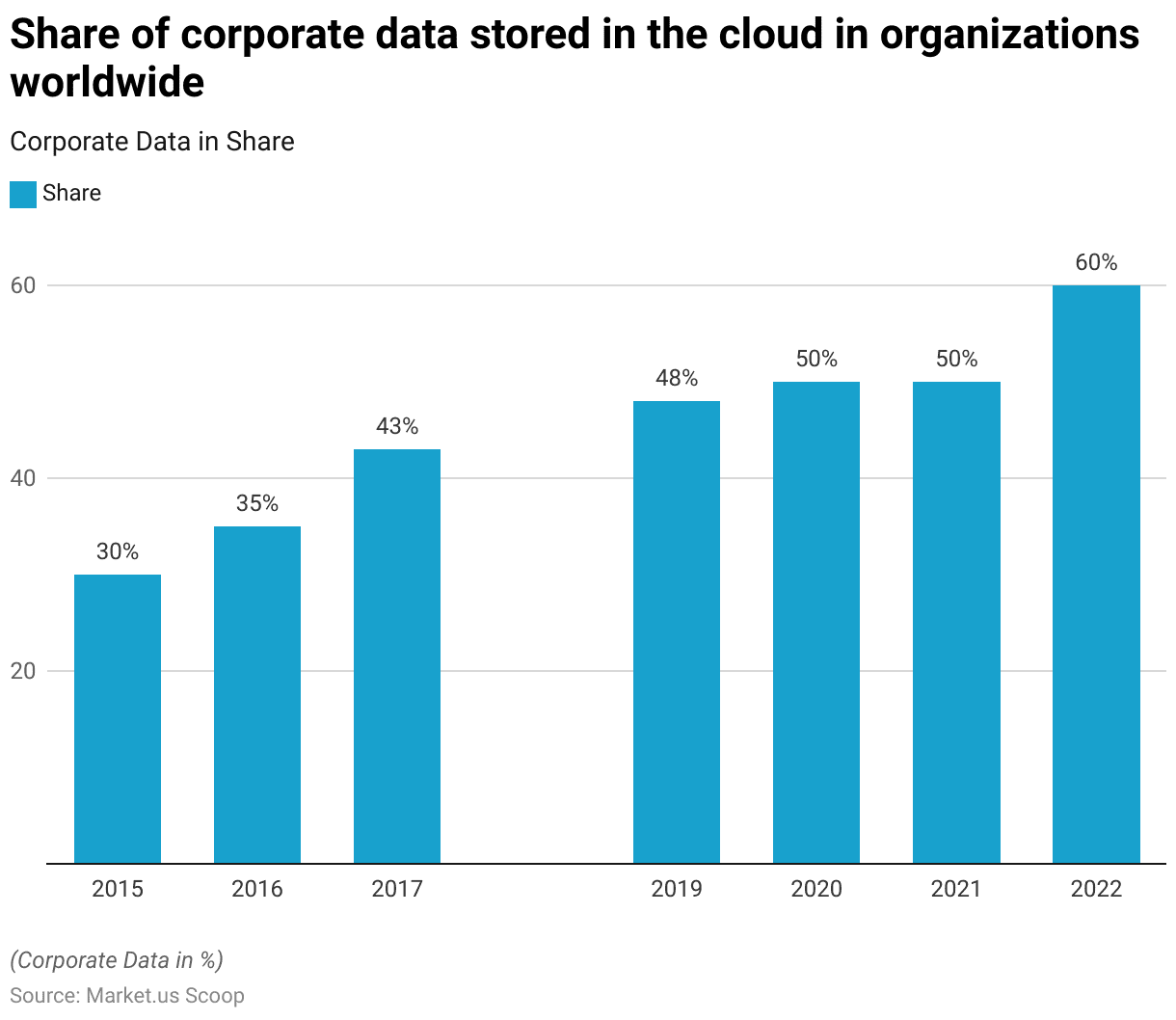

Cloud Storage of Corporate Data in Organizations Worldwide

- The share of corporate data stored in the cloud by organizations worldwide has increased significantly from 2015 to 2022.

- In 2015, 30% of corporate data was stored in the cloud, a figure that grew to 35% by 2016.

- This upward trend continued, with 43% of corporate data stored in the cloud in 2017.

- By 2019, nearly half of all corporate data, 48%, was cloud-based.

- In 2020 and 2021, cloud storage of corporate data stabilized at 50%. Indicating that half of all data was now managed in the cloud.

- However, in 2022, this percentage surged to 60%, reflecting a continued shift towards cloud solutions for corporate data storage. Driven by factors such as enhanced scalability, cost-efficiency, and the growing reliance on cloud infrastructure for business operations.

(Source: Statista)

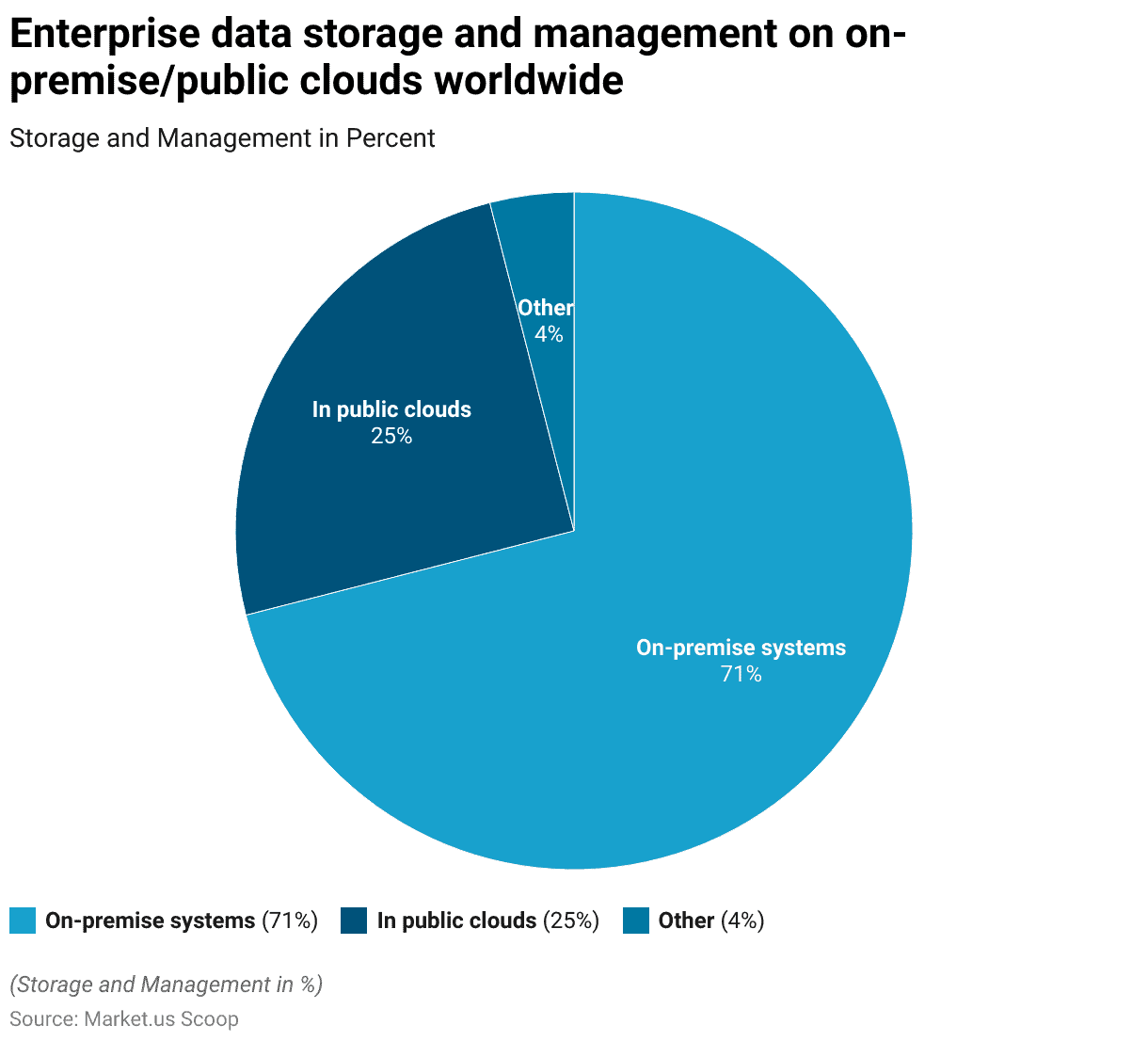

Enterprise Data Storage and Management

- In 2018, the majority of enterprise data storage and management was conducted on on-premise systems, accounting for 71% of the total.

- Public cloud infrastructure was used for 25% of enterprise data storage, reflecting the growing but still secondary role of cloud solutions.

- The remaining 4% of data storage and management fell into the “Other” category, which likely includes hybrid solutions and specialized data management systems.

- This distribution indicates that, while cloud adoption was increasing, on-premise systems still dominated enterprise data management at that time.

(Source: Statista)

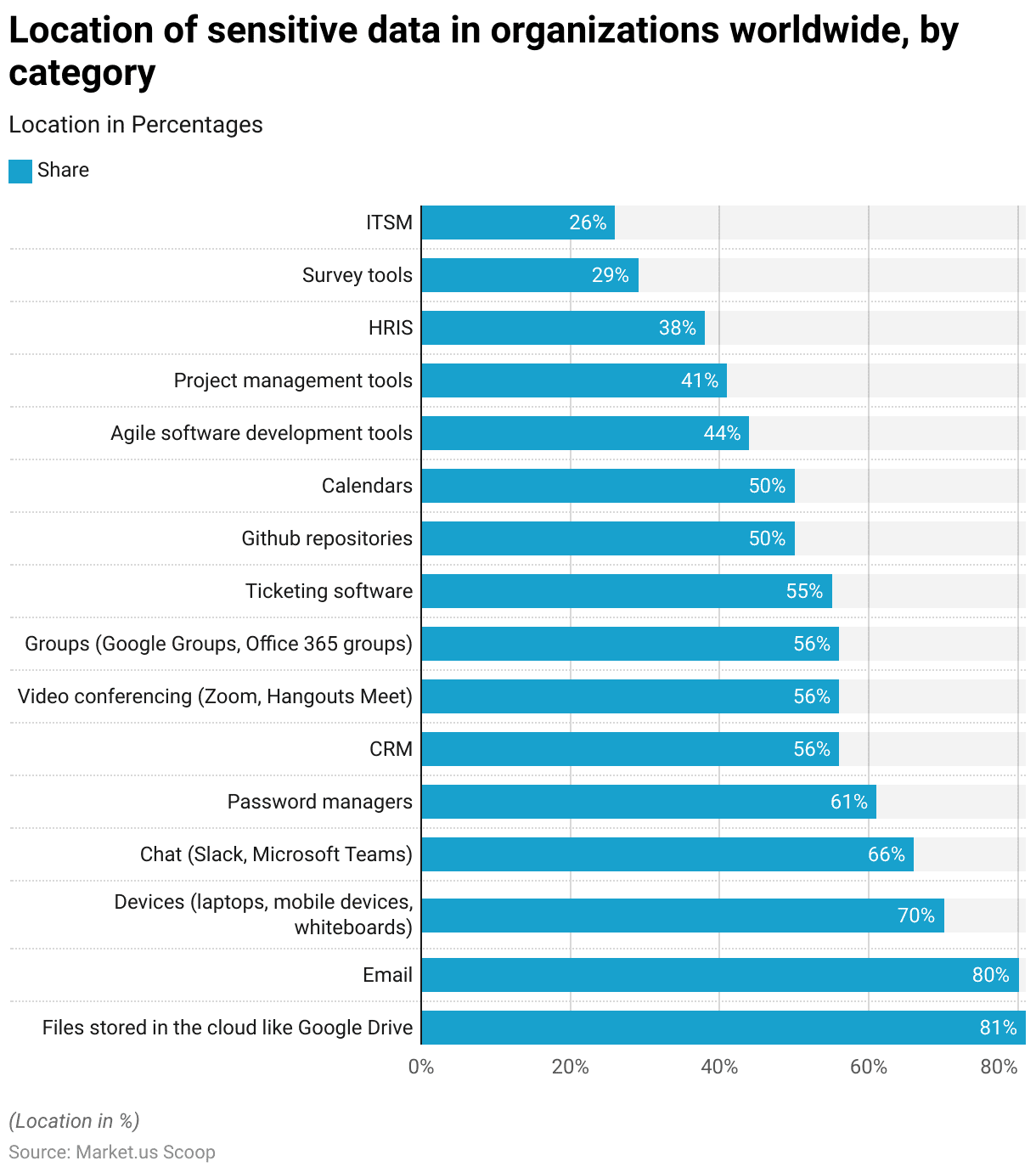

Location of Sensitive Data in Organizations

- In 2020, organizations worldwide stored sensitive data across various locations, with cloud storage solutions and email being the most common.

- Files stored in cloud platforms such as Google Drive were reported by 81% of respondents, while 80% indicated that sensitive data was located in email systems.

- Devices such as laptops, mobile devices, and whiteboards were identified as holding sensitive information by 70% of respondents.

- Additionally, chat applications like Slack and Microsoft Teams were reported by 66%, and password managers by 61%.

- Other key locations for sensitive data included CRM systems (56%), video conferencing platforms (56%), and groups like Google Groups and Office 365 Groups (56%).

- Ticketing software was identified by 55%, while GitHub repositories and calendars each accounted for 50% of responses.

- Agile software development tools were highlighted by 44%, project management tools by 41%, and HRIS (Human Resource Information Systems) by 38%.

- Survey tools and ITSM (IT Service Management) solutions were reported by 29% and 26% of respondents, respectively.

- These findings underscore the diverse range of platforms and systems where sensitive organizational data is stored, necessitating robust security measures across all these channels.

(Source: Statista)

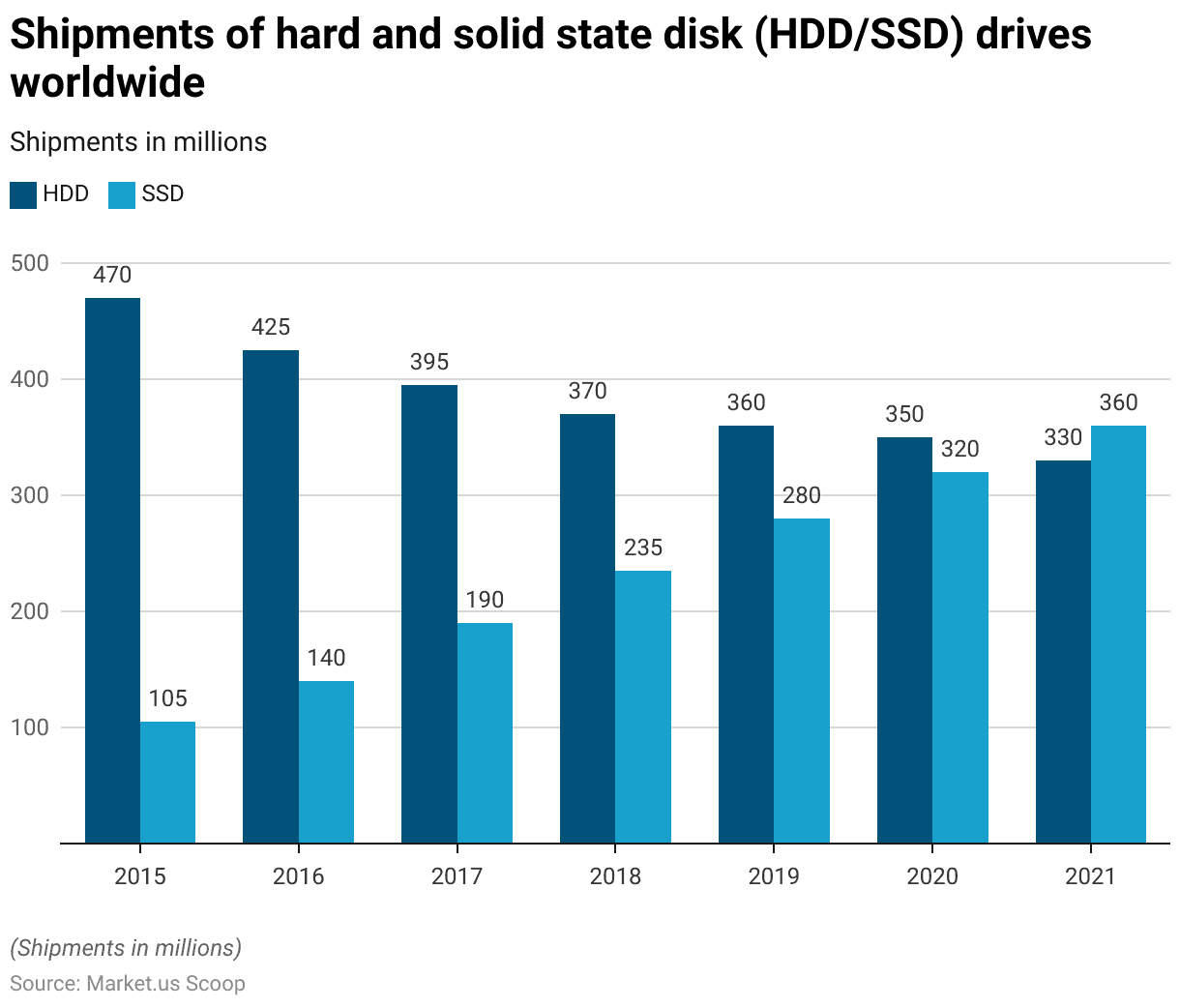

Data Storage Devices Shipments Statistics

HDD and SSD Shipments

- From 2015 to 2021, the global shipment of hard disk drives (HDD) steadily declined, while shipments of solid-state drives (SSD) experienced significant growth.

- In 2015, HDD shipments were at 470 million units, compared to just 105 million for SSDs.

- By 2016, HDD shipments had decreased to 425 million, while SSD shipments increased to 140 million.

- This trend continued, with HDD shipments dropping to 395 million in 2017 and 370 million in 2018, while SSD shipments rose to 190 million and 235 million, respectively.

- In 2019, HDD shipments fell further to 360 million, while SSDs surged to 280 million units.

- By 2020, HDD shipments had decreased to 350 million, whereas SSD shipments reached 320 million.

- In 2021, the shift became even more pronounced, with HDD shipments dropping to 330 million, while SSD shipments surpassed HDDs for the first time, reaching 360 million units.

- This reflects the increasing preference for SSDs due to their faster performance, lower power consumption, and enhanced durability, driving their adoption over traditional HDDs.

(Source: Statista)

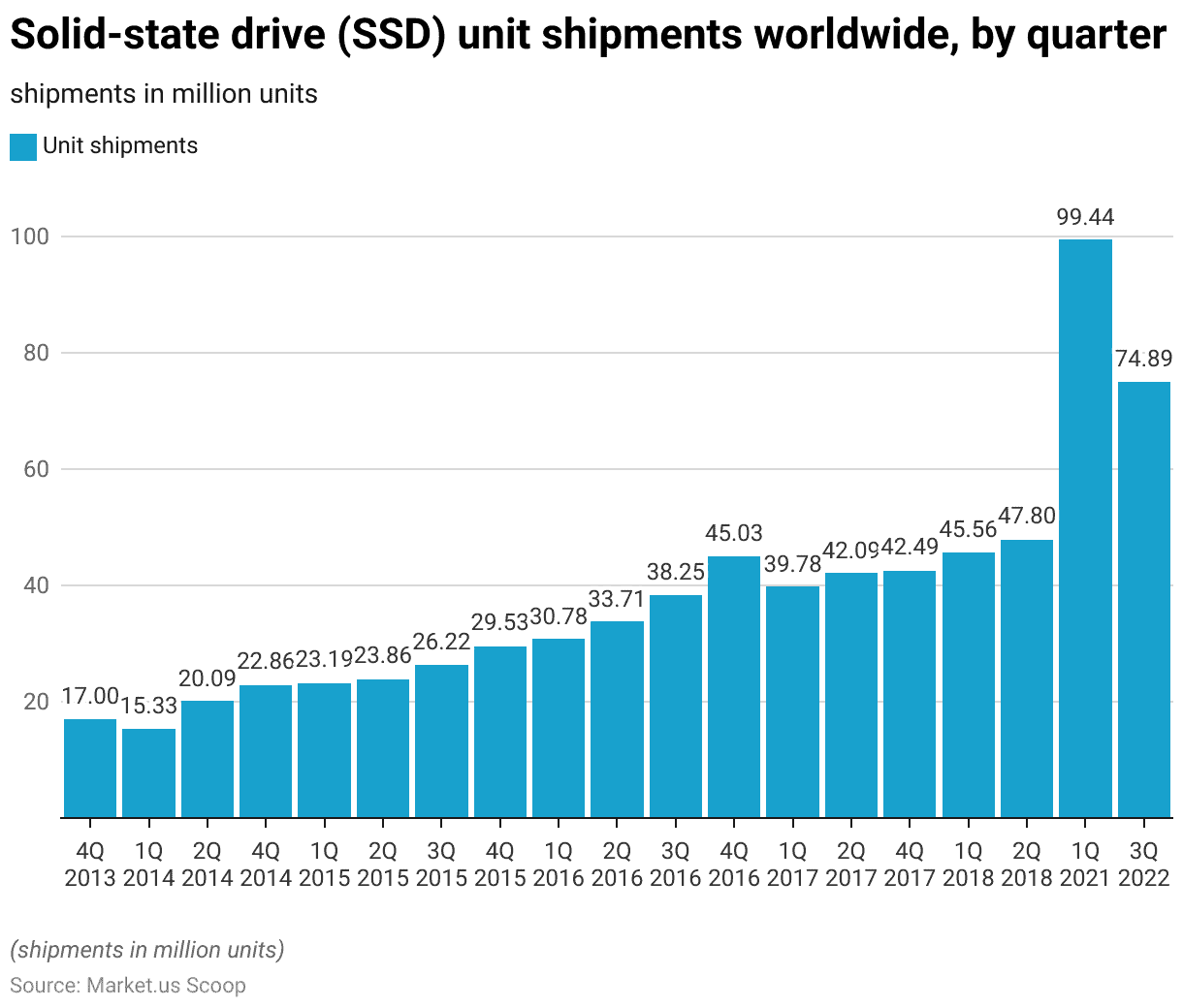

SSD Shipments

- From 2013 to 2022, the global shipment of solid-state drives (SSD) saw a substantial increase, reflecting the growing demand for faster and more efficient storage solutions.

- In the fourth quarter of 2013, SSD shipments were recorded at 17 million units.

- This figure fluctuated but generally trended upward over the following years. By the first quarter of 2014, shipments reached 15.33 million units, rising to 20.09 million in the second quarter and 22.86 million by the fourth quarter of that year.

- In 2015, SSD shipments continued to grow, starting at 23.19 million units in the first quarter and increasing steadily to 26.22 million in the third quarter and 29.53 million in the fourth quarter.

- This upward trajectory persisted into 2016, with shipments reaching 30.78 million in the first quarter, 33.71 million in the second quarter, 38.25 million in the third quarter, and peaking at 45.03 million in the fourth quarter.

- The trend continued into 2017, with SSD shipments remaining high, reaching 39.78 million in the first quarter and 42.09 million in the second quarter.

- By the end of 2017, shipments stabilized at 42.49 million units. The growth trend persisted in 2018, with shipments increasing to 45.56 million in the first quarter and 47.8 million in the second quarter.

- By the first quarter of 2021, SSD shipments saw a dramatic rise, reaching an impressive 99.44 million units, reflecting the explosion in demand for storage due to increasing digitalization.

- In the third quarter of 2022, shipments remained strong at 74.89 million units, underscoring the continuing importance of SSDs in the global market.

- This steady growth highlights the widespread adoption of SSDs as the preferred storage medium over traditional hard drives.

(Source: Statista)

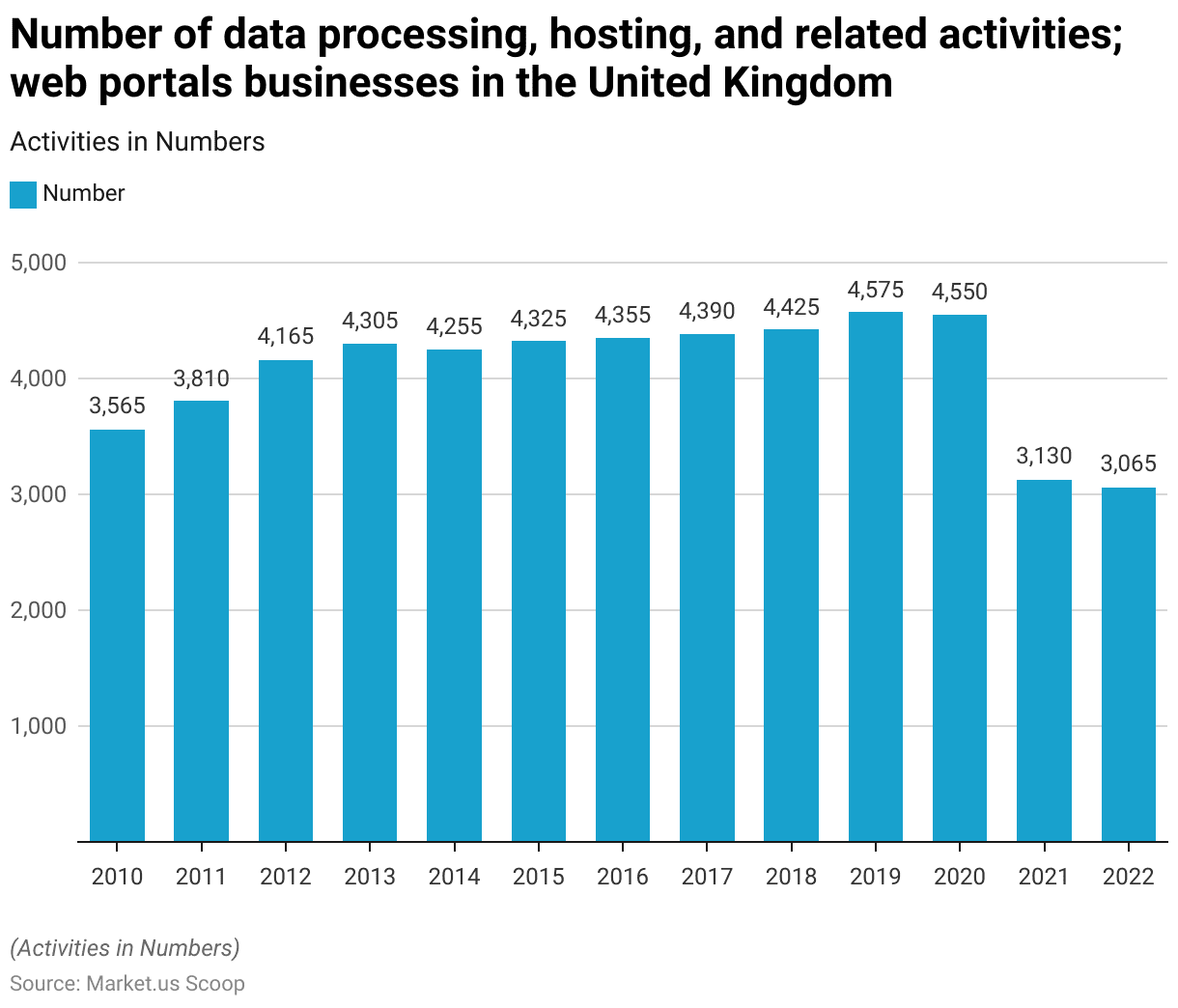

Data Processing and Hosting Businesses

- The number of businesses engaged in data processing, hosting, and related activities, as well as web portals in the United Kingdom, exhibited steady growth from 2010 to 2019, followed by a decline in the subsequent years.

- In 2010, there were 3,565 such businesses, a figure that increased to 3,810 in 2011 and continued to rise to 4,165 in 2012.

- By 2013, the number had grown to 4,305 businesses, although a slight decline occurred in 2014, with 4,255 businesses reported.

- The industry rebounded in 2015, reaching 4,325 businesses, and this upward trend persisted over the next few years, peaking at 4,575 businesses in 2019.

- However, by 2020, the number of businesses slightly decreased to 4,550, followed by a more significant decline in 2021, with only 3,130 businesses reported.

- In 2022, the downward trend continued, with 3,065 businesses in the sector.

- This decline may reflect changes in the industry, including consolidation, technological advancements, or market saturation.

(Source: Statista)

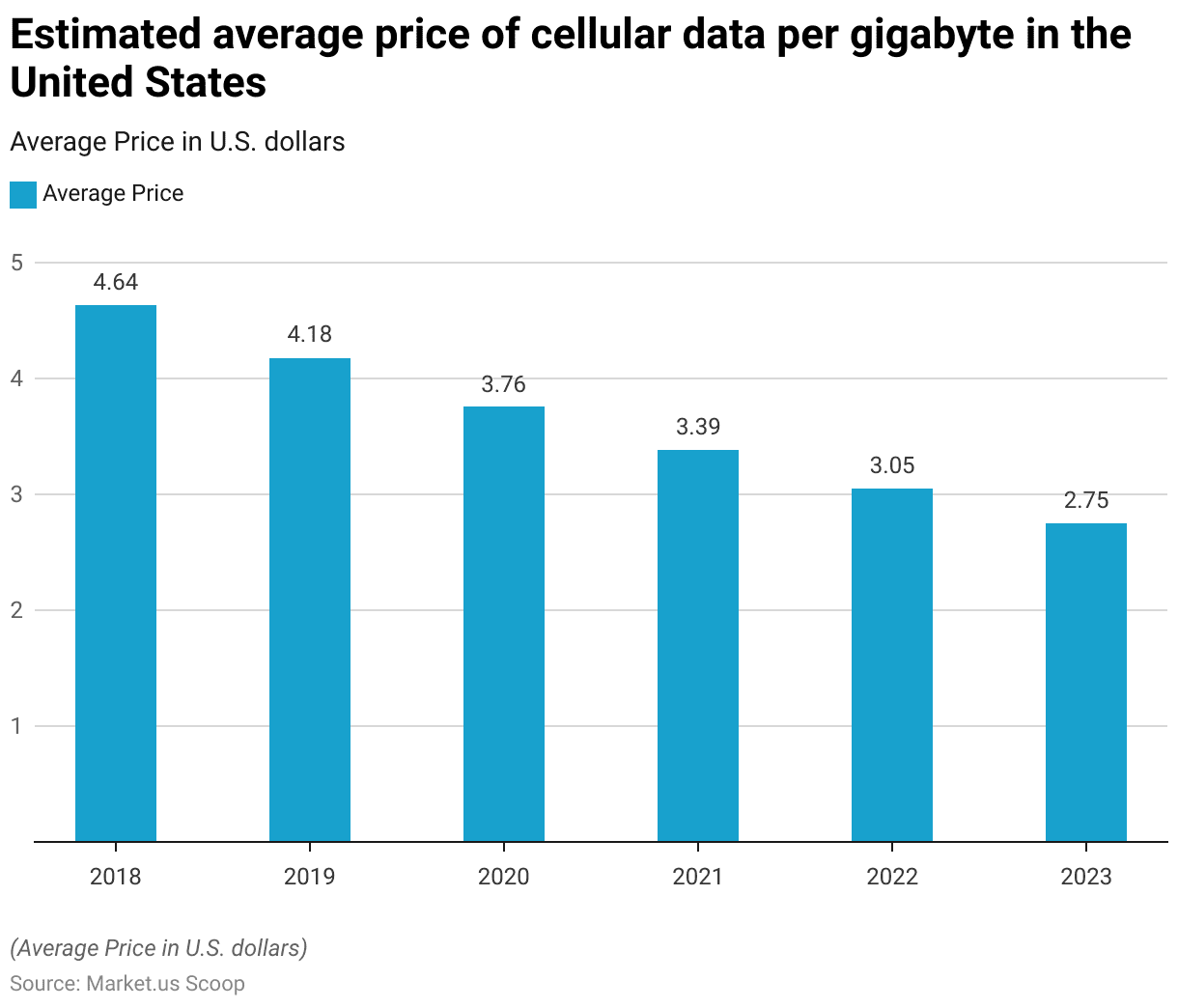

Cost Metrics

Price of Cellular Data

- The estimated average price of cellular data per gigabyte in the United States has steadily decreased from 2018 to 2023.

- In 2018, the price was USD 4.64 per gigabyte, which dropped to USD 4.18 in 2019.

- The decline continued in 2020, with the average price reaching USD 3.76 per gigabyte.

- By 2021, the cost was further reduced to USD 3.39, and in 2022, it dropped to USD 3.05.

- In 2023, the trend of decreasing prices persisted, with the average price per gigabyte of cellular data falling to USD 2.75.

- This consistent decline reflects increased competition, advancements in technology, and improved infrastructure in the mobile data industry.

(Source: Statista)

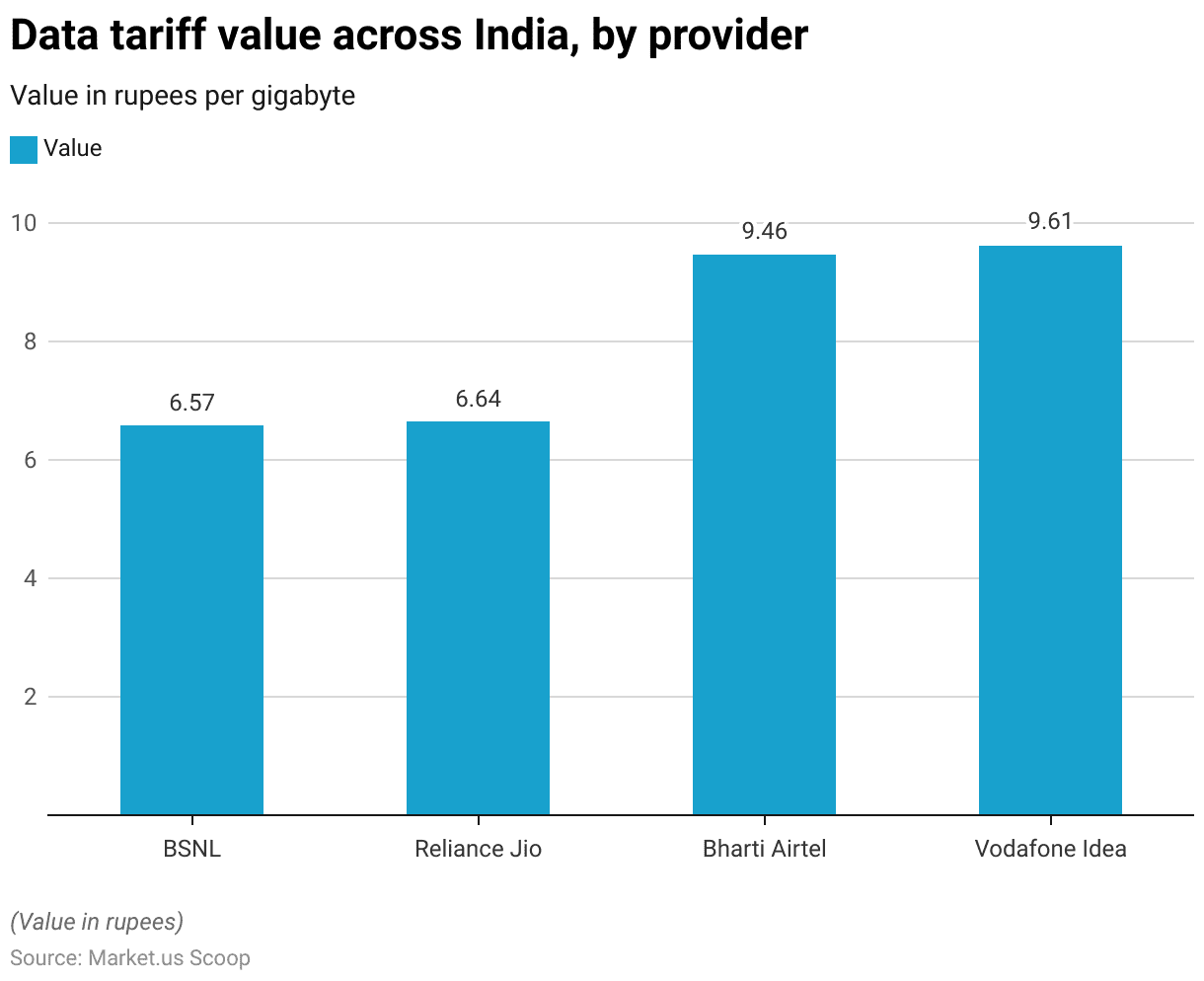

Data Tariff Value

- As of August 2023, data tariff values across India varied by provider, with BSNL offering the lowest rate at INR 6.57 per gigabyte.

- Reliance Jio followed closely with a tariff of INR 6.64 per gigabyte.

- Bharti Airtel charged a higher rate at INR 9.46 per gigabyte, while Vodafone Idea had the highest data tariff among the major providers at INR 9.61 per gigabyte.

- These variations reflect differing pricing strategies and market positions within the Indian telecommunications industry.

(Source: Statista)

Key Spending Statistics

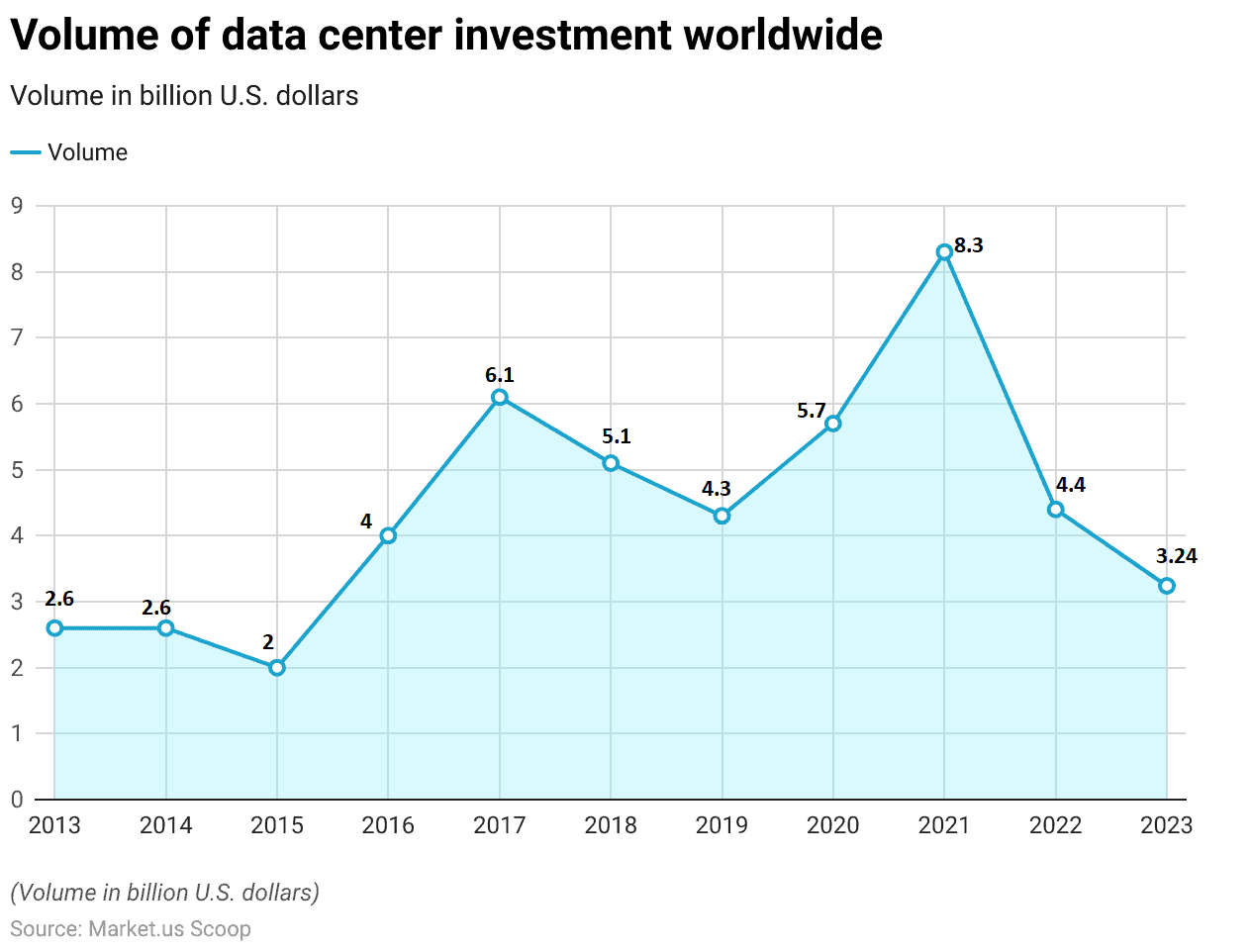

The Volume of Data Center Investment Worldwide

- The volume of global data center investment has fluctuated significantly from 2013 to 2023.

- In both 2013 and 2014, the transaction volume stood at USD 2.6 billion before dropping slightly to USD 2 billion in 2015.

- In 2016, investment doubled to USD 4 billion, and by 2017, it surged to USD 6.1 billion.

- However, a slight decline followed, with investment falling to USD 5.1 billion in 2018 and further to USD 4.3 billion in 2019.

- In 2020, data center investment recovered, reaching USD 5.7 billion, followed by a significant jump in 2021 to USD 8.3 billion, the highest in this period.

- In 2022, investment dropped to USD 4.4 billion.

- As of 2023 year-to-date (YTD), the transaction volume stands at USD 3.24 billion, reflecting ongoing activity in the data center sector but with a potential decline compared to previous years.

- These fluctuations in investment volumes highlight the dynamic nature of the data center market, influenced by evolving technology needs and economic factors.

(Source: Statista)

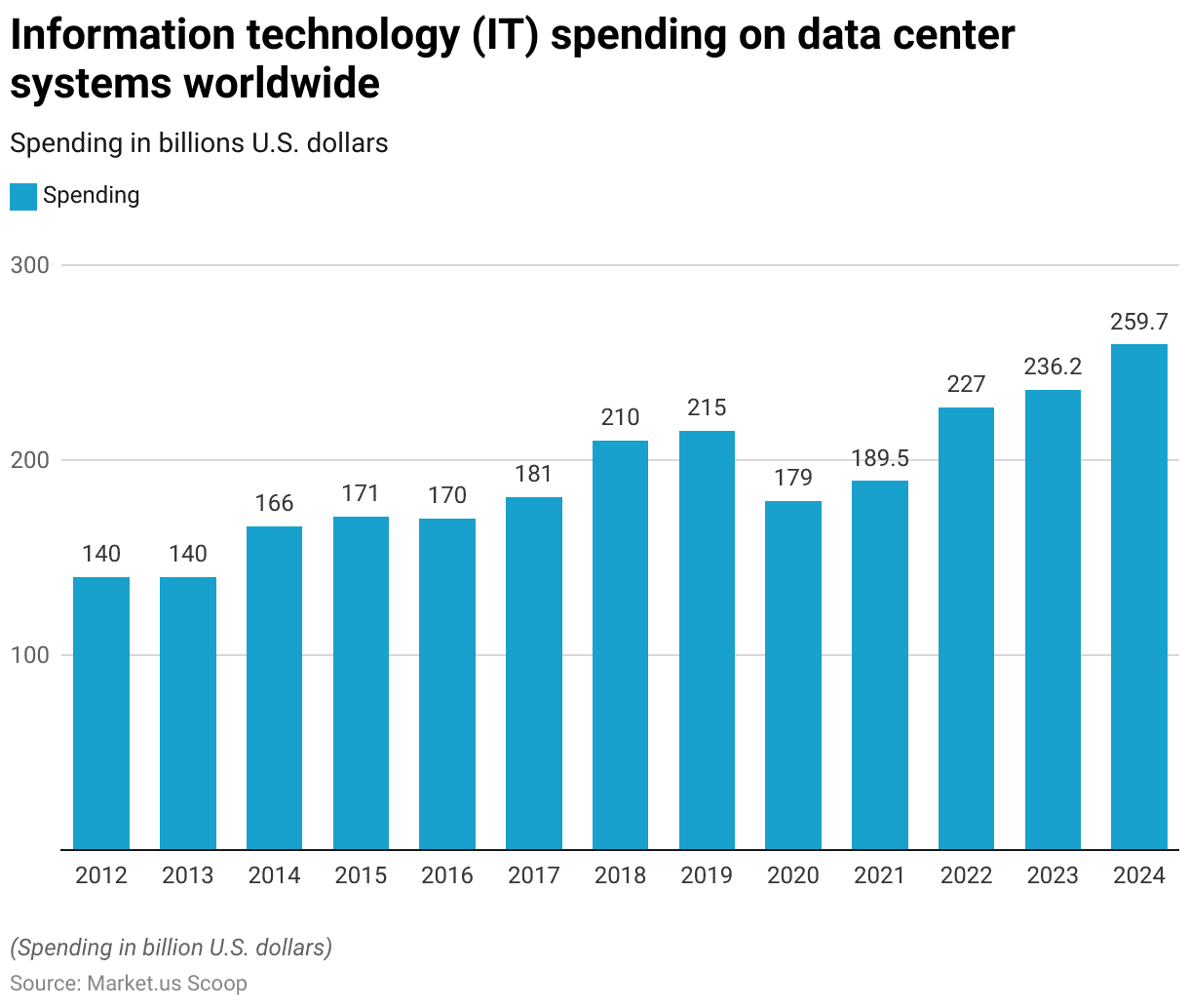

Information Technology (IT) Spending on Data Center Systems

- Global information technology (IT) spending on data center systems has experienced fluctuations from 2012 to 2024.

- In 2012 and 2013, spending remained stable at USD 140 billion.

- By 2014, it increased to USD 166 billion and continued to rise, reaching USD 171 billion in 2015.

- In 2016, spending slightly decreased to USD 170 billion, but growth resumed in 2017, when spending rose to USD 181 billion.

- A significant jump occurred in 2018, with IT spending on data center systems reaching USD 210 billion, followed by a modest increase to USD 215 billion in 2019.

- However, in 2020, spending dropped to USD 179 billion, likely reflecting market adjustments or shifts in investment.

- By 2021, the trend reversed, with spending reaching USD 189.51 billion, and continued growth was observed in 2022, with spending increasing to USD 227.02 billion.

- The upward trend is projected to persist, with global IT spending on data center systems expected to reach USD 236.18 billion in 2023 and further rise to USD 259.68 billion by 2024, driven by the ongoing demand for data infrastructure and cloud services.

(Source: Statista)

Issues and Challenges

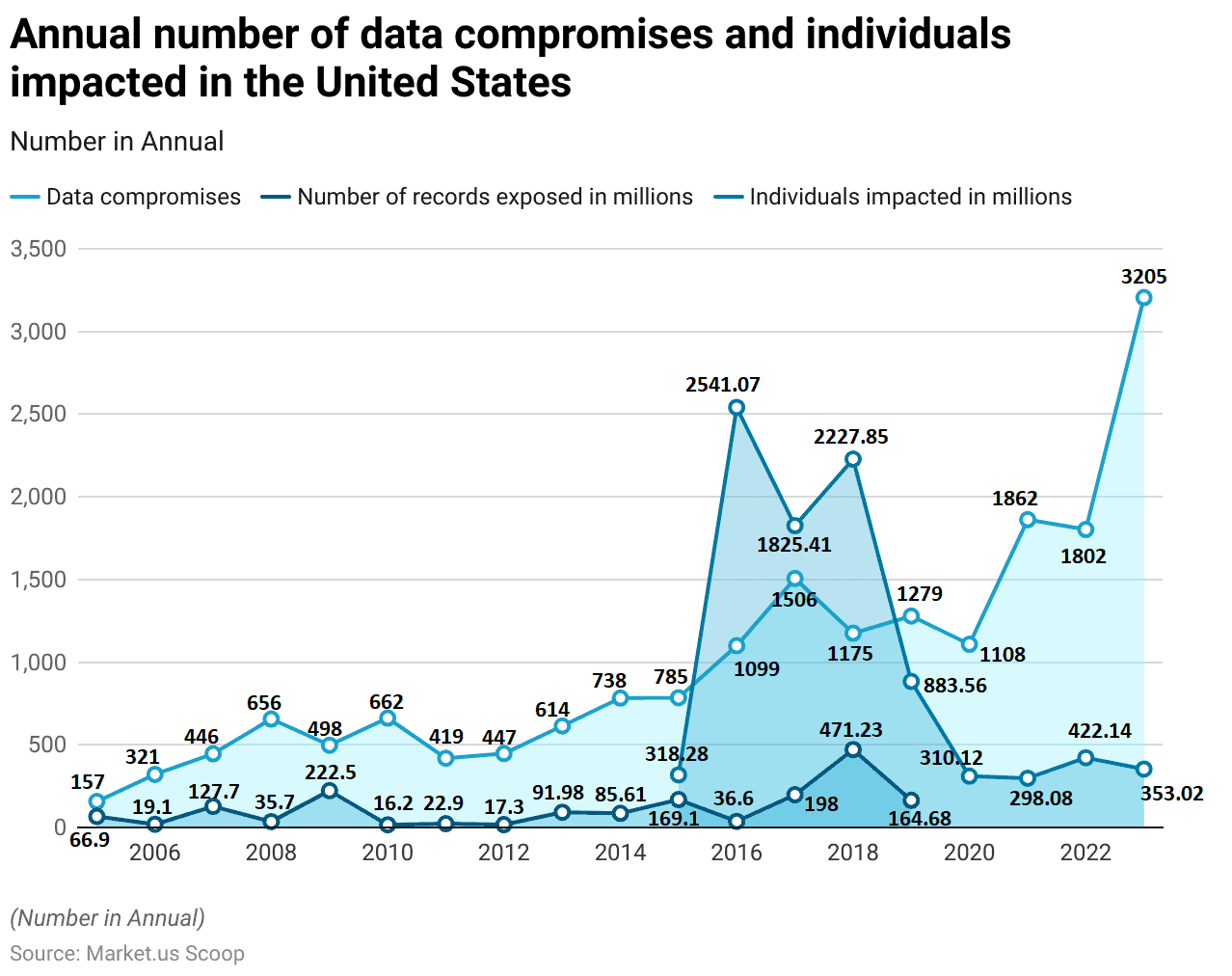

2005-2013

- The annual number of data compromises and the number of individuals impacted in the United States have shown a significant upward trend from 2005 to 2023.

- In 2005, there were 157 data compromises, exposing 66.9 million records.

- By 2006, this number had more than doubled to 321 compromises, though the number of records exposed dropped to 19.1 million.

- In 2007, data breaches increased to 446, with 127.7 million records exposed, followed by a jump to 656 breaches in 2008, exposing 35.7 million records.

- The trend continued, with 498 data compromises in 2009 and 222.5 million records exposed.

- In 2010, the number of breaches grew to 662, but only 16.2 million records were exposed.

- Over the next few years, the number of breaches fluctuated, with 419 in 2011 and 447 in 2012, but record exposures remained relatively low compared to previous years.

- By 2013, the number of breaches increased to 614, exposing 91.98 million records.

2014-2023

- The years 2014 and 2015 saw an increase in breaches to 783 and 785, respectively, with 85.61 million records exposed in 2014 and 169.1 million in 2015, impacting 318.28 million individuals.

- The number of compromises surged further in 2016 to 1,099, with 36.6 million records exposed and 2.54 billion individuals affected.

- Data breaches peaked in 2017, with 1,506 compromises, exposing 198 million records and impacting 1.83 billion individuals.

- In 2018, the number of breaches dropped to 1,175, but 471.23 million records were exposed, affecting 2.23 billion people.

- The trend stabilized somewhat in 2019, with 1,279 breaches and 164.68 million records exposed, impacting 883.56 million individuals.

- In 2020, there were 1,108 breaches, and 310.12 million people were affected.

- The number of data breaches rose sharply to 1,862 in 2021 and 1,802 in 2022. With millions of individuals affected each year.

- In 2023, data compromises reached an all-time high of 3,205, with 353.02 million individuals impacted.

- This data reflects the increasing frequency and scale of data breaches over time. Highlighting the growing risks associated with data security.

(Source: Statista)

Regulations for Data Lake Statistics

- Regulations for data lakes vary by region, but they generally focus on data privacy, security, and governance to ensure compliance with national and international laws.

- For instance, in the European Union, the General Data Protection Regulation (GDPR) mandates strict controls over how personal data is stored and accessed within data lakes. Requiring encryption and the ability to handle data deletion requests.

- Similarly, in the U.S., laws like the California Consumer Privacy Act (CCPA) and upcoming state-specific regulations. Such as the Texas Data Privacy and Security Act (TDPSA), impose requirements on data lakes to safeguard personal data, restrict unauthorized access, and allow data owners to request the deletion of their information.

- Countries like Brazil, with its General Data Protection Law (LGPD), also enforce stringent data governance rules.

- Further, these laws generally require enterprises to maintain detailed logs of access, utilize encryption, and implement role-based access controls to protect sensitive data within data lakes.

(Sources: InCountry, World Population Review, Qubole)

Innovations in Data Lake Statistics

- Innovations in data lakes are transforming how companies manage, analyze, and leverage massive amounts of data.

- Moreover, a key development is the rise of data lakehouses, which combine the flexibility of data lakes with the structured processing power of data warehouses, enabling real-time analytics and machine learning in a unified platform.

- Companies like Databricks and Snowflake are at the forefront, integrating advanced data processing engines such as Apache Spark and Presto for scalable and efficient data analysis.

- Qubole has introduced features like automated lifecycle management and real-time cost optimization, which help businesses monitor and control big data expenditures while scaling operations.

- Furthermore, innovations like Amazon’s S3 Express allow for faster access to data, bringing object storage closer to operational databases and increasing efficiency in processing high-volume datasets.

- However, these platforms evolve, enhanced data governance and security features, such as role-based access controls and encryption, ensure compliance with stringent regulations.

(Sources: Qubole, Datanami, DATAVERSITY)

Recent Developments

Acquisitions and Mergers:

- Snowflake acquires Neeva: In 2023, Snowflake, a leader in data cloud solutions, acquired Neeva, a search and analytics company, for $250 million. This acquisition aims to enhance Snowflake’s capabilities in data lakes by improving its search and query functionality across vast data repositories.

- Databricks acquires MosaicML: In 2023, Databricks, a prominent player in the data lakehouse market, acquired MosaicML, a machine learning platform, for $1.3 billion. This acquisition enhances Databricks’ offering by combining advanced AI and machine learning with their data lake capabilities to improve real-time data analysis.

New Product Launches:

- AWS announces Amazon DataZone: In late 2023, Amazon Web Services (AWS) launched Amazon DataZone, a new data management service designed to help organizations discover, manage, and govern data across their data lakes. It simplifies the sharing and cataloging of data while ensuring compliance with privacy standards.

- Google Cloud introduces BigLake: In early 2024, Google Cloud introduced BigLake, a unified storage engine designed to combine data lakes and warehouses. BigLake enables businesses to manage and analyze data from multiple sources in real time, offering enhanced scalability and cost efficiency.

Funding:

- Dremio raises $160 million in Series E funding: In 2023, Dremio, a company specializing in data lake analytics, raised $160 million to expand its platform’s capabilities. The funding will be used to further develop its data lakehouse infrastructure. Which allows businesses to run analytics directly on cloud data lakes without needing traditional data warehouses.

- Starburst secures $250 million in Series D funding: In early 2024, Starburst, a company that simplifies data access and analytics across data lakes. Raised $250 million to enhance its query engine technology. The funding will help Starburst scale its global presence and expand its open-source Trino platform. Making data lake analytics more accessible to enterprises.

Technological Advancements:

- AI-Powered Data Lake Analytics: AI and machine learning are becoming integral to data lake management and analytics. By 2025, over 40% of large enterprises are expected to implement AI-driven data lakes to automate data ingestion, improve query performance, and provide advanced insights.

- Data Lakehouse Architecture: The combination of data lakes and data warehouses, known as data lakehouse architecture, is becoming more popular. By 2026, it is projected that 60% of enterprises will adopt data lakehouse solutions to enable real-time analytics and simplify data management.

Market Dynamics:

- Growth in Data Lake Market: This growth is fueled by the increasing volume of unstructured data and the need for scalable. Cost-effective solutions to manage and analyze vast data sets.

- Rising Demand for Real-Time Analytics: As more businesses prioritize real-time decision-making, the demand for data lakes that support real-time data analytics is increasing. By 2025, 35% of enterprises are expected to deploy real-time analytics platforms integrated with their data lakes to gain faster insights and improve business outcomes.

Conclusion

Data Lake Statistics – Data lakes have transformed how organizations store and analyze vast amounts of structured and unstructured data by centralizing it in raw form, offering flexibility and scalability.

Their ability to handle diverse data types at a low cost makes them ideal for modern analytics and machine learning.

However, challenges like data governance and the risk of disorganized data (or “data swamps”) must be managed carefully.

As cloud computing and AI evolve, data lakes will become even more scalable and accessible. With lakehouse architectures improving operational effectiveness. When implemented strategically, data lakes enable advanced analytics and drive innovation.

FAQs

A data lake is a centralized repository that stores structured, semi-structured, and unstructured data in its raw form, allowing businesses to store vast amounts of data for later processing and analysis.

A data lake stores raw data in its native format without predefined schemas, offering flexibility and scalability, whereas a data warehouse stores processed and structured data optimized for specific queries and reports.

Data lakes can store all types of data, including structured data (like databases), semi-structured data (like logs and JSON), and unstructured data (like images, videos, and text documents).

The main benefits include the ability to store large volumes of diverse data, scalability, cost-efficiency, and flexibility for advanced analytics, machine learning, and real-time data processing.

The primary challenge is ensuring proper data governance and management to avoid the data lake turning into a “data swamp,” where disorganized and inaccessible data hinders its usefulness