Table of Contents

Introduction

According to Graphics Processing Units Statistics, Graphics Processing Units (GPUs) have emerged as a crucial component of modern computing systems, playing a pivotal role in various fields ranging from graphics rendering to scientific simulations and artificial intelligence. The primary function of GPUs is to handle the massive computational requirements inherent in rendering high-resolution graphics and complex visual effects.

Designed initially to accelerate graphical computations for visual rendering, GPUs have evolved into highly parallel processors capable of handling complex mathematical computations. This evolution has given rise to their applications in diverse domains such as gaming, machine learning, scientific research, and more.

Editor’s Choice

- Gaming Console Market size is expected to be worth around USD 65.00 Billion by 2032

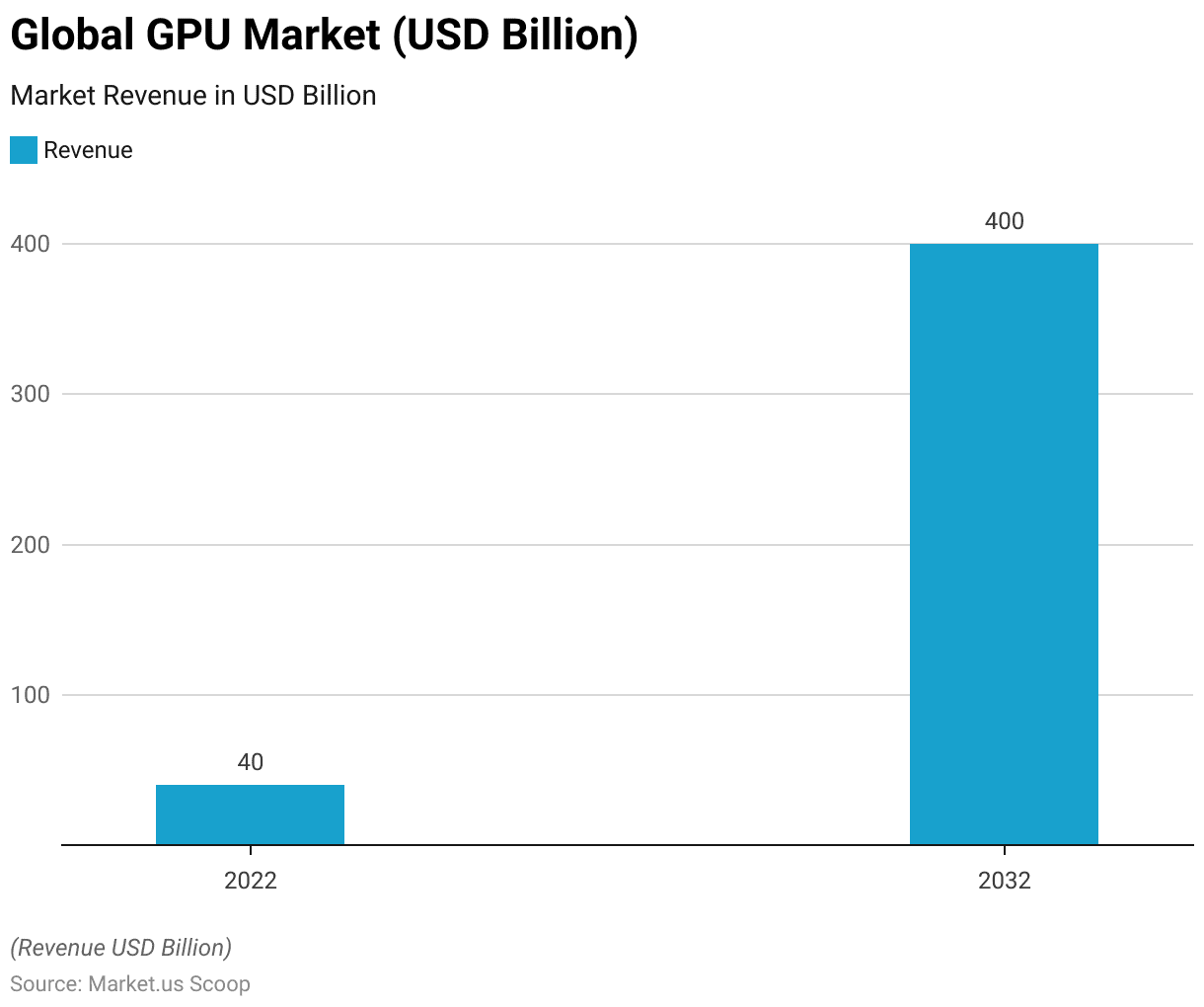

- In 2022, the global GPU market was valued at 40 billion U.S. dollars, and it is projected to reach 400 billion U.S. dollars by 2032, with a compound annual growth rate (CAGR) of 25% from 2023 to 2032.

- During 2019, the primary participants in the worldwide graphics processing unit (GPU) market amassed a total revenue of 18.2 billion U.S. dollars.

- The year 1993 witnessed the entry of NVIDIA into this domain. Yet, it wasn’t until 1997 that they garnered noteworthy recognition by launching the first Graphics Processing Unit (GPU), combining 3D acceleration alongside traditional 2D and video acceleration.

- The NVIDIA Titan RTX is a top-tier gaming GPU renowned for its exceptional aptitude in handling intricate deep-learning tasks. It has 4608 CUDA cores and 576 Tensor cores.

- Crafted by PNY, the NVIDIA Quadro RTX 8000 is the world’s most potent graphics card, meticulously designed for deep learning matrix multiplications.

- Most GPUs excel at gaming on monitors with resolutions of 1440p Quad HD (QHD) or higher refresh rate displays and facilitate immersive VR experiences.

- The Esports Market in terms of revenue was estimated to be worth USD 10,905.1 Mn by 2032

Global GPU Market Size

- In 2022, the worldwide market for graphics processing units (GPUs) achieved a valuation of 40 billion U.S. dollars.

- Projections indicate a significant escalation to 400 billion U.S. dollars by 2032, exhibiting a compound annual growth rate (CAGR) of 25% from 2023 to 2032.

Key Market Players

- Cloud Gaming Market size is expected to be worth around USD 143.4 Billion by 2032

- During 2019, the primary participants in the worldwide graphics processing unit (GPU) market amassed a total revenue of 18.2 billion U.S. dollars.

- Projections indicate this figure is poised to surge to 35.3 billion U.S. dollars by 2025.

- This notable growth starkly contrasts the 8.1 billion U.S. dollars in revenue from the top three vendors in 2015.

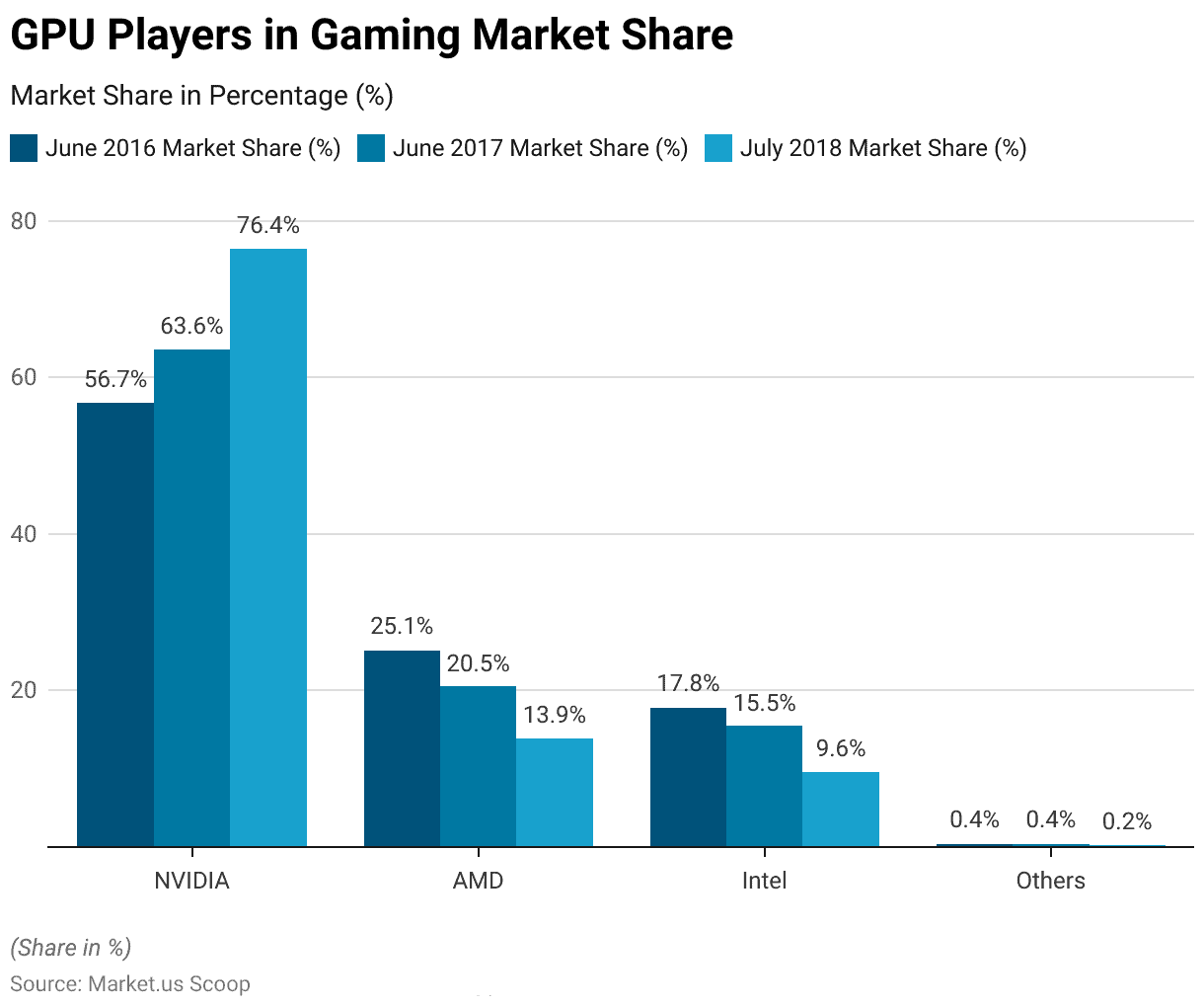

- For the gaming sector, in June 2016, NVIDIA held a dominant market share of 56.7%, which expanded to 63.6% by June 2017 and soared to an impressive 76.4% by July 2018.

- AMD, in contrast, held a 25.1% market share in June 2016, which declined to 20.5% in June 2017 and decreased to 13.9% by July 2018.

- Meanwhile, Intel’s market share stood at 17.8% in June 2016, dipped to 15.5% in June 2017, and further contracted to 9.6% by July 2018.

Evolution of the Graphics Processing Unit (GPU)

- Before the contemporary conception of graphics cards, video display cards held prominence. IBM played a significant role in this trajectory by revealing the Monochrome Display Adapter (MDA) in 1981.

- In 1996, 3dfx Interactive introduced the Voodoo1 graphics chip, which gained initial prominence in the arcade industry and deliberately forsook 2D graphics capabilities. This avant-garde hardware initiative played a pivotal role in catalyzing the 3D revolution.

- In just one year, the Voodoo2 emerged, heralding its arrival as one of the pioneering video cards capable of supporting parallel processing by two cards within a single personal computer.

- The year 1993 witnessed the entry of NVIDIA into this domain. Yet, it wasn’t until 1997 that they garnered noteworthy recognition by launching the first Graphics Processing Unit (GPU), combining 3D acceleration alongside traditional 2D and video acceleration.

- The inception of the term “GPU” owes its existence to NVIDIA’s significant contributions. NVIDIA assumed a pivotal and influential role in shaping the trajectory of contemporary graphics processing, a role highlighted by the unveiling of the GeForce 256.

- The debut of the GeForce 256 represented a leap beyond the capabilities of its forerunners, the RIVA processors, marking a substantial advancement in the realm of 3D gaming performance.

- NVIDIA’s drive remained robust, as evidenced by the introduction of the GeForce 8800 GTX, which showcased an impressive texture-fill rate of 36.8 billion per second.

- 2009 ATI introduced the impactful Radeon HD 5970 dual-GPU card before its acquisition by AMD.

GPU Architecture

Memory Hierarchy

- A substantial and unified register file stands at the architecture’s core, encompassing 32,768 registers.

- This intricate architecture spans 16 Streaming Multiprocessors (SMs) each with a 128KB register file.

- With an allocation of 32 cores per SM, this configuration yields a collective 2MB spread seamlessly throughout the chip.

- Complementing this arrangement, totaling 48 warps, equating to 1,536 threads per SM, seamlessly integrates into the architecture. This is further augmented by the allocation of 21 registers per thread.

- The memory design is marked by low latency, operating within 20 to 30 cycles.

- In tandem with this efficiency, the architecture boasts a substantial bandwidth capacity, surpassing the threshold of 1,000 GB/s.

- An integral architecture component includes specialized memory caches dedicated to texture and constants. This arrangement entails a read-only constant cache with a capacity of 64KB.

- A 12KB texture cache is also incorporated into the architecture’s design.

- This texture cache exhibits an impressive memory throughput rate, quantified at 739.63 GB/s. Accompanying this high throughput, the cache registers a noteworthy texture cache hit rate of 94.21%.

- The architecture exhibits a commendable performance potential of up to 177 GB/s in terms of throughput.

Parallels Between CPU and GPU

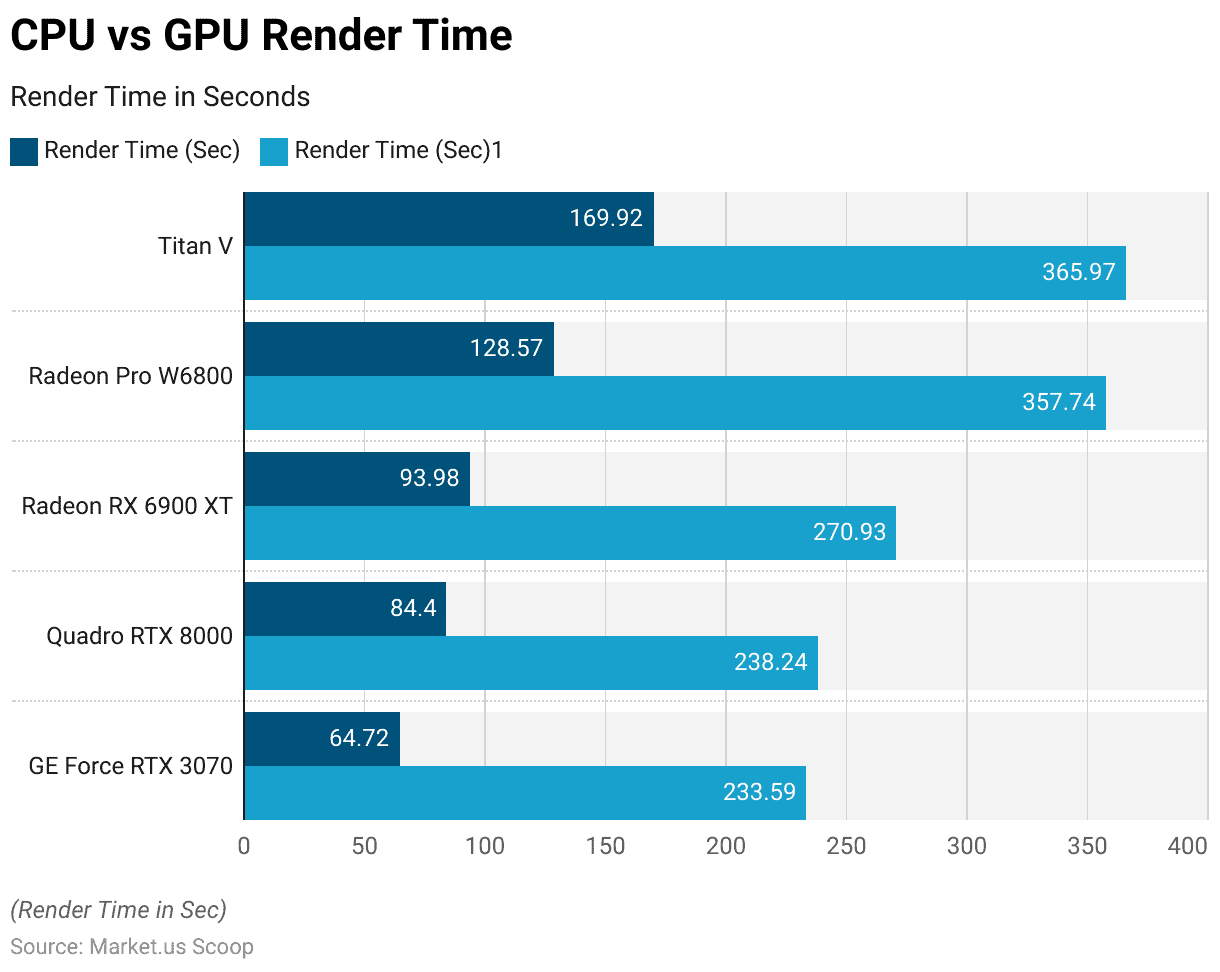

- In a comparative analysis of render times, several GPU and CPU combinations are crucial. The rendering performance of the GE Force RTX 3070 was showcased with a time of 64.72 seconds, while the Intel Core i9-12900K exhibited a contrasting render time of 233.59 seconds.

- Similarly, the Quadro RTX 8000 recorded a render time of 84.4 seconds, juxtaposed with 238.24 seconds for the AMD Ryzen 9 3950X 16-core processor.

- The Radeon RX 6900 XT demonstrated a render time of 93.98 seconds, in contrast to the render time of 270.93 seconds for the Intel Core i7-12700KF.

- For the Radeon Pro W6800, the render time extended to 128.57 seconds, while the Intel Core i9-11900K @350GHz registered a render time of 357.74 seconds.

Applications of GPUs

Gaming

- Gaming Simulator Market is estimated to grow at a 14.8% compound annual growth rate.

- Contemporary games heavily rely on the GPU’s capabilities, often surpassing the demands placed on the CPU. The intricate tasks of processing intricate 2D and 3D graphics, rendering complex polygons, and efficiently mapping textures necessitate a potent and swift GPU. The efficiency with which your pictures/video card (GPU) processes data directly correlates with the number of frames displayed per second, resulting in smoother gameplay.

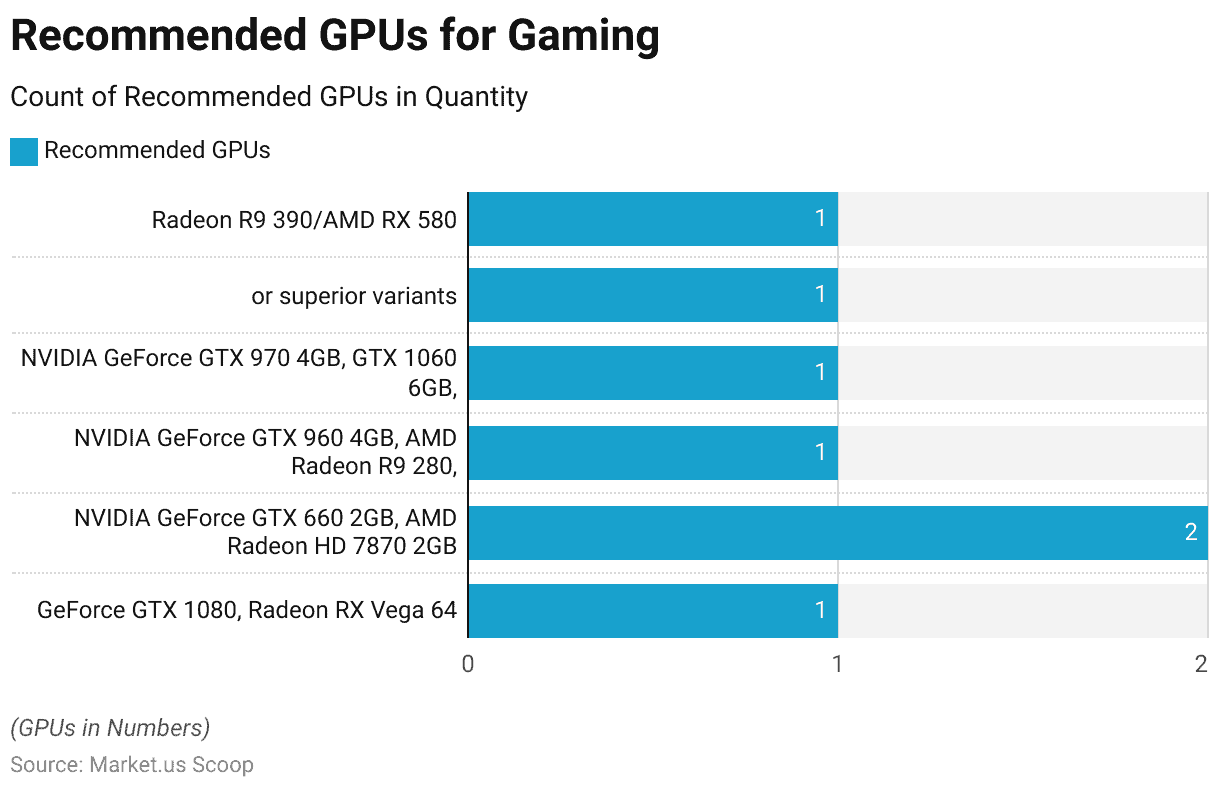

- For instance, the recommended graphics specifications for Call of Duty: Black Ops 4 include mid-range options like the NVIDIA GeForce GTX 970 4GB, GTX 1060 6GB, or Radeon R9 390/AMD RX 580.

- Turning to World of Warcraft, the recommended GPU options include the NVIDIA GeForce GTX 960 4GB or the AMD Radeon R9 280 or superior variants.

- The GTX 960 is commendable, delivering dependable 1080p performance while maintaining power efficiency and lower temperatures.

- The AMD R9 280 boasts additional video memory, making both GPUs proficient at running demanding games at elevated settings.

- The expansive sandbox action-adventure title Grand Theft Auto V, alongside the popular battle royale phenomenon Fortnite Battle Royale, suggests an NVIDIA GeForce GTX 660 2GB or an AMD Radeon HD 7870 2GB.

- These GPUs are suitably priced and engineered to facilitate fluid 1080p gaming experiences, catering to players seeking performance without breaking the bank.

Machine Learning and Deep Learning

- The NVIDIA Titan RTX is a top-tier gaming GPU renowned for its exceptional aptitude in handling intricate deep-learning tasks. It has 4608 CUDA cores and 576 Tensor cores.

- It has a GPU memory of 24 GB GDDR6 and a Memory Bandwidth of 673GB/s.

- NVIDIA’s Tesla represents a pioneering tensor core Graphics Processing Units meticulously designed to accelerate an array of critical tasks encompassing artificial intelligence, high-performance computing (HPC), deep learning, and machine learning.

- Embracing the potency of the NVIDIA Volta architecture, the Tesla V100 stands as a formidable embodiment, boasting a striking 125TFLOPS of deep learning performance for both training and inference purposes.

- Its CUDA cores are 5120, while tensor cores are 640.

- Its memory bandwidth is 900 GB/s, and GPU memory is 16GB. Its clock speed is 1246 MHz.

- Crafted by PNY, the NVIDIA Quadro RTX 8000 is the world’s most potent graphics card, meticulously designed for deep learning matrix multiplications.

Cryptocurrency Mining

- During 2021, the sales of graphics cards experienced a significant surge, and this surge was not attributed to a rise in the desire for enhanced gaming graphics. Rather, the driving factor was the capacity of Graphics Processing Units (GPUs) to engage in cryptocurrency mining activities.

- Positioned at the apex of the NVIDIA RTX 30 series of graphics cards, the RTX 3090 is a pinnacle performer. Built on the foundation of the Ampere architecture, this card distinguishes itself through remarkable daily profitability efficiency.

- The AMD Radeon RX 5700 XT can engage in mining cryptocurrencies such as ETH, GRIN, RVN, ZEL, XHV, ETC, and BEAM, and its power consumption is 225 watts.

- Utilizing Nvidia’s Ampere architecture as its foundation, the RTX A5000 showcases a configuration of 8192 CUDA cores and is equipped with 24GB of GDDR6 memory accompanied by a 384-bit memory interface.

- Additionally, it boasts a boost clock speed reaching 1.75 GHz while maintaining a peak power consumption of 230W.

Discuss your needs with our analyst

Please share your requirements with more details so our analyst can check if they can solve your problem(s)