Table of Contents

Introduction

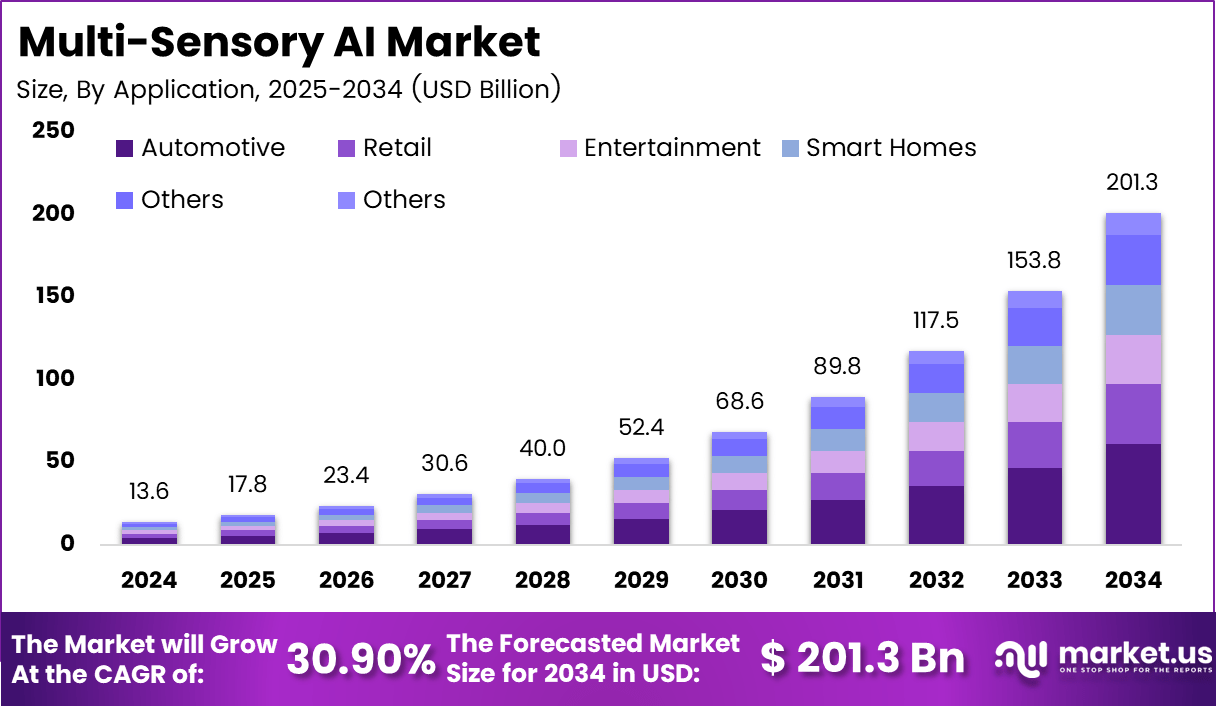

The global multi-sensory AI market generated USD 13.6 billion in 2024 and is projected to grow to approximately USD 201.3 billion by 2034, at a robust CAGR of 30.9%. In 2024 North America held a dominant share of more than 40.4 %, with revenues of around USD 5.50 billion.

This surge reflects the rising demand for systems that integrate vision, hearing, touch and sensor-fusion capabilities across robotics, autonomous vehicles, healthcare and consumer electronics. Advanced machine learning and deep interoperable sensor platforms are enabling more human-comparable perception and decision-making in machines.

How Growth is Impacting the Economy

The rapid expansion of the multi-sensory AI market is expected to stimulate economic value by improving productivity across industries and fuelling high-skilled employment in AI engineering, sensor manufacturing and system integration. These systems enable faster automation of complex tasks in manufacturing, logistics and healthcare, reducing time-to-market and lowering operational waste. Public investments in smart infrastructure and autonomous systems are projected to catalyse productivity gains and regional competitiveness.

As firms adopt multi-sensory AI, there is a shift toward digital-twinned production, remote service delivery and predictive maintenance, all of which reduce downtime and boost asset utilisation. The ripple effects include growth in IoT device ecosystems, increased demand for edge-computing capacity and resurgence in hardware-component manufacturing. Ultimately, the market’s growth is anticipated to underpin the next wave of the global digital infrastructure, creating a multiplier effect across supply chains and services.

➤ Smarter strategy starts here! Get the sample – https://market.us/report/multi-sensory-ai-market/free-sample/

Impact on Global Businesses

Rising Costs & Supply Chain Shifts

Businesses adopting multi-sensory AI face increased costs related to advanced sensor arrays, high-capacity processing and real-time data fusion infrastructure. Supply chains are shifting to source sophisticated sensors, LiDAR, radar and high-performance computing modules. Firms are re-evaluating their vendor bases, locating production closer to demand centres to reduce latency and logistics risk.

Sector-Specific Impacts

In automotive and autonomous vehicles, the technology enables sophisticated driver-assistance systems, transforming vehicle safety and user experience. In healthcare, multi-sensory AI drives advanced diagnostics and wearable monitoring, shifting business models toward predictive and remote care. Consumer electronics and retail sectors use these systems for immersive user interfaces and personalised experiences, altering hardware design and user engagement strategies. Each sector is redefining operations around machine perception and multisensory interaction, compelling businesses to pivot toward integrated sensor-AI ecosystems.

Strategies for Businesses

Organisations should prioritise building scalable, modular multi-sensory AI architectures that support sensor fusion and real-time analytics. Collaborating with specialised hardware and sensor manufacturers will mitigate supply-chain disruption. Investing in edge-computing and hybrid cloud environments enables latency-sensitive deployment, while implementing strong data governance addresses privacy and ethical concerns.

Upskilling staff in multimodal AI frameworks, sensor integration and system calibration is critical. Businesses should also explore cross-sector partnerships (for example between automotive and healthcare) to leverage multi-sensory platforms across use cases. Finally, embedding analytics-driven feedback loops and performance monitoring allows continuous improvement and faster time-to-value.

Key Takeaways

- Market projected to grow to ~USD 201.3 billion by 2034 at 30.9% CAGR

- North America held over 40.4 % share in 2024 with ~USD 5.50 billion revenue

- Multi-sensory AI enables machines to integrate vision, audio and tactile data in real time

- Rising hardware and sensor costs as well as supply-chain localisation are business challenges

- Key sectors impacted: automotive, healthcare, consumer electronics and retail

- Strategic focus on modular architecture, sensor fusion and edge deployment is essential

➤ Unlock growth secrets! Buy the full report – https://market.us/purchase-report/?report_id=163204

Analyst Viewpoint

Currently, the multi-sensory AI market is entering a phase of accelerated adoption driven by advanced sensor technologies and AI-fusion algorithms. The outlook remains highly positive as industries move beyond unimodal systems to richer multimodal perception frameworks. Over the next decade, the market is expected to mature as ecosystems of sensors, AI models and computing infrastructure become commoditised and standardised. Analysts anticipate broad diffusion into mid-tier enterprises and emerging markets, underpinning new services and business models around immersive experiences, autonomous systems and digital-physical convergence.

Use Case and Growth Factors

| Use Case | Growth Factors |

|---|---|

| Autonomous vehicle perception (sensor fusion from cameras, radar, LiDAR) | Focus on vehicle safety and automation; rising sensor adoption; government support for AVs |

| Healthcare monitoring and diagnostics (wearables, vision + audio + sensor fusion) | Ageing population; need for remote-care; advanced sensor affordability |

| Consumer electronics immersive interfaces (voice+vision+touch) | Rising demand for natural human-computer interaction; proliferating IoT devices |

| Industrial automation and digital twins (robotics with multi-sensory feedback) | Manufacturing digitisation; rise of smart factories and edge-AI |

| Smart home and retail experiences (gestures, voice, visual recognition) | Consumer preference for personalised, immersive experiences; sensor cost decline |

Regional Analysis

North America held a dominant share in 2024 with more than 40.4 %, owing to advanced research hubs, high AI adoption, and strong sensor-hardware ecosystems. Europe is expanding steadily with a strong emphasis on automation, robotics, and regulatory frameworks for AI deployment. Asia Pacific is expected to witness the fastest growth over the forecast period, driven by large-scale manufacturing of sensors, IoT penetration, strong automotive and electronics sectors, and government initiatives in China, Japan, and Korea. Emerging regions such as Latin America and the Middle East/Africa are gradually adopting multi-sensory AI in smart-city, healthcare, and retail initiatives, offering growth potential as infrastructure improves.

➤ More data, more decisions! see what’s next –

- 3A Video Games Market

- Crime Analytics Market

- Smart Microloan Platforms Market

- Micro Mobility Data Analytics Market

Business Opportunities

The multi-sensory AI market offers compelling business opportunities for sensor manufacturers, hardware integrators, edge-AI providers and software-platform developers. There is scope for service providers delivering turnkey solutions in healthcare monitoring, industrial automation and automotive perception systems.

Emerging players can carve niches in the metaverse and AR/VR experiences by embedding multi-sensory feedback. Partnerships between hardware and software firms enable ecosystem play-outs. Subscription-based models for real-time sensor-fusion analytics or industrial maintenance platforms open recurring-revenue streams. The localisation of sensor production and edge-deployment services in emerging markets presents additional growth avenues.

Key Segmentation

The market is segmented by technology (natural-language processing, computer vision, speech recognition, machine learning & deep learning, sensor fusion), by deployment mode (cloud-based, edge computing, on-premises), by application (automotive, healthcare, retail, consumer electronics, industrial automation) and by region (North America, Europe, Asia Pacific, Latin America, Middle East & Africa). Among technology segments, machine learning & deep learning held the largest share in 2024 as they underlie the multisensory fusion process. Deployment by edge computing is projected to grow strongly due to latency-sensitive applications.

Key Player Analysis

Market participants are focused on strengthening sensor-AI integration, end-to-end multimodal solutions and cross-industry partnerships. They are investing in developing proprietary sensor arrays (vision, LiDAR, radar, audio), deep-learning fusion models, and scalable edge/cloud platforms. Collaboration with automotive, healthcare, robotics and consumer-electronics firms enables ecosystem scale-up. R&D investments target real-time processing, low-latency sensor fusion and energy-efficient modules. Competitive strategy emphasises global footprint expansion, strategic alliances and acquisitions to build integrated hardware-software stacks that facilitate faster deployment of multi-sensory AI across multiple use-cases.

- Aryballe Technologies

- Databricks

- IBM Corporation

- Immersion Corporation

- Meta

- Microsoft Corporation

- Mobileye

- Nuance Communications

- NVIDIA

- Palantir Technologies

- TechSee

- Advanced Micro Devices Inc.

- Amazon Web Services Inc.

- Others

Recent Developments

- In 2025 Q1, A new multimodal sensor-fusion platform for autonomous vehicles was unveiled by a major tech group.

- In 2024 Q4, a Healthcare provider announced the deployment of wearables with vision, audio, and tactile sensing for remote patient monitoring.

- In 2024 Q3, a Consumer electronics OEM launched an immersive smart-home assistant with vision, voice, and gesture control capabilities.

- In 2024 Q2, an Industrial automation firm integrated multi-sensory AI in its digital-twin platform to enable predictive maintenance in manufacturing.

- In 2024 Q1, the Government initiative in North America awarded grants for research in multi-sensory AI systems for smart-city infrastructure.

Conclusion

The global multi-sensory AI market is poised for extraordinary growth, underpinned by sensor-fusion innovations and expanding applications across sectors. Businesses that invest in modular architectures, scalable deployment, and cross-industry partnerships will lead in the upcoming era of human-comparable machine perception and automation.

Discuss your needs with our analyst

Please share your requirements with more details so our analyst can check if they can solve your problem(s)