Table of Contents

Report Overview

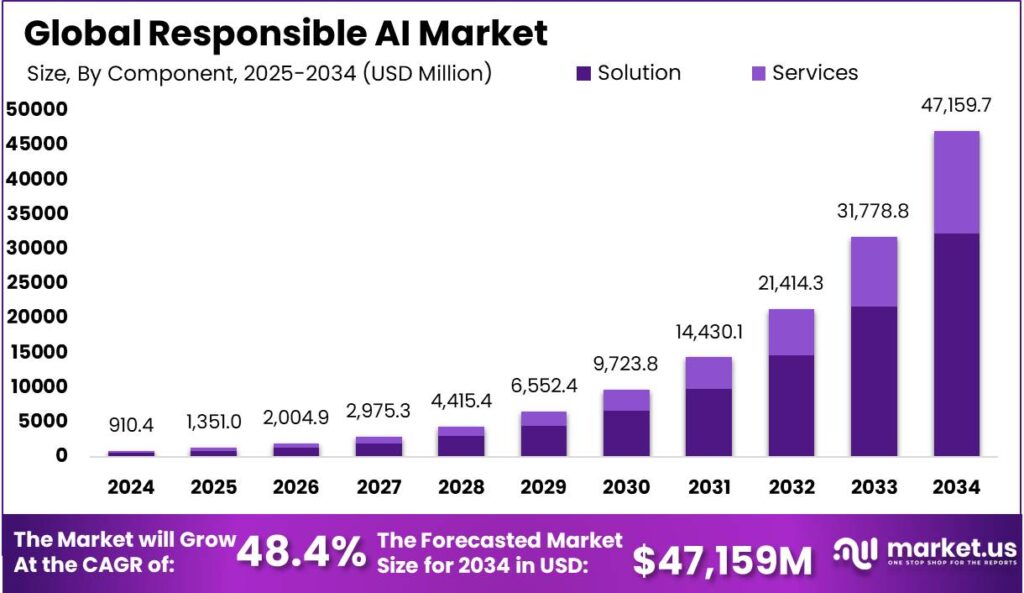

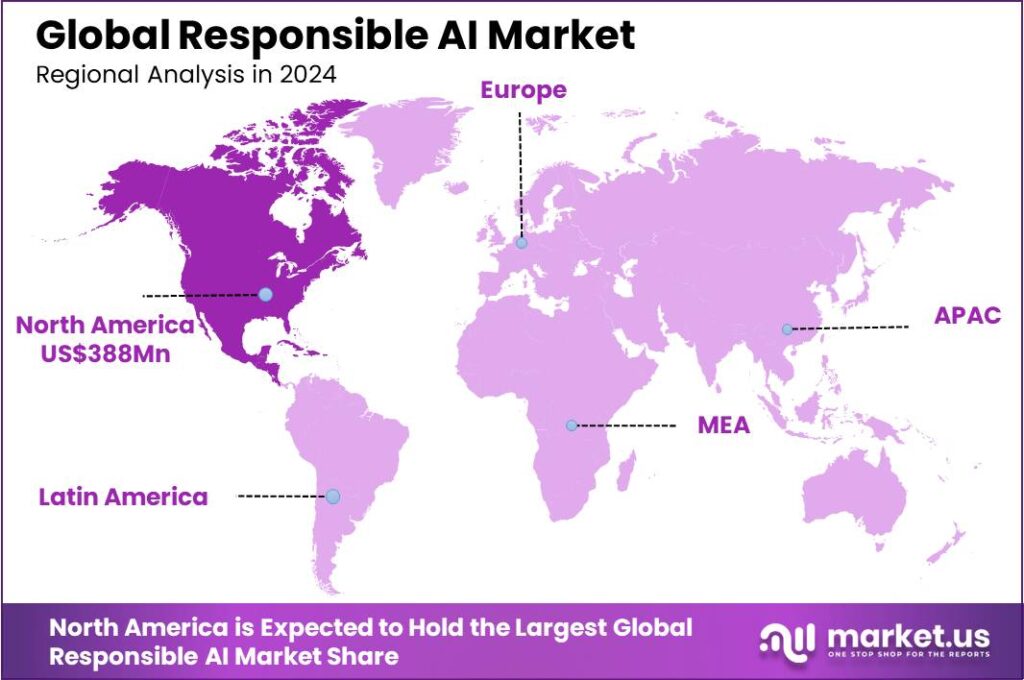

The Global Responsible AI market is set for significant expansion, with forecasts indicating it will reach USD 47,159 million by 2034, up from USD 910.4 million in 2024. This marks a robust compound annual growth rate (CAGR) of 48.40% from 2025 to 2034. In 2024, North America is expected to dominate the market, accounting for over 42.7% of the global share, which translates to revenues of approximately USD 388 million.

Responsible AI refers to the development and deployment of AI systems that prioritize fairness, transparency, accountability, and ethical considerations. It’s about ensuring that AI is used in a way that benefits all of humanity while minimizing harm. As AI technologies continue to evolve, there is an increasing need for responsible AI practices.

The growth of responsible AI practices is driven by increased public and regulatory scrutiny of AI’s ethical implications, such as privacy concerns and biases. Governments are pushing for stronger regulations to ensure AI respects human rights. Additionally, there is rising demand for transparency in AI decision-making. As businesses use AI for tasks like hiring and loan approvals, stakeholders expect clear, explainable processes to avoid mistrust and negative perceptions, which could hinder AI adoption.

Key trends in responsible AI include the shift to “human-in-the-loop” systems, where AI collaborates with humans to prevent errors and biases while allowing for continuous oversight and improvements. Another trend is the rise of explainable AI (XAI), which aims to create models with clear, understandable justifications for their decisions, ensuring accountability in critical areas like healthcare and criminal justice.

As the demand for AI technologies grows across sectors, the market for responsible AI is expanding rapidly. Companies are increasingly investing in AI that aligns with ethical values, especially in industries such as healthcare, finance, and government, where the stakes are higher. This expansion is also being driven by the realization that responsible AI practices can lead to greater long-term success.

Key Takeaways

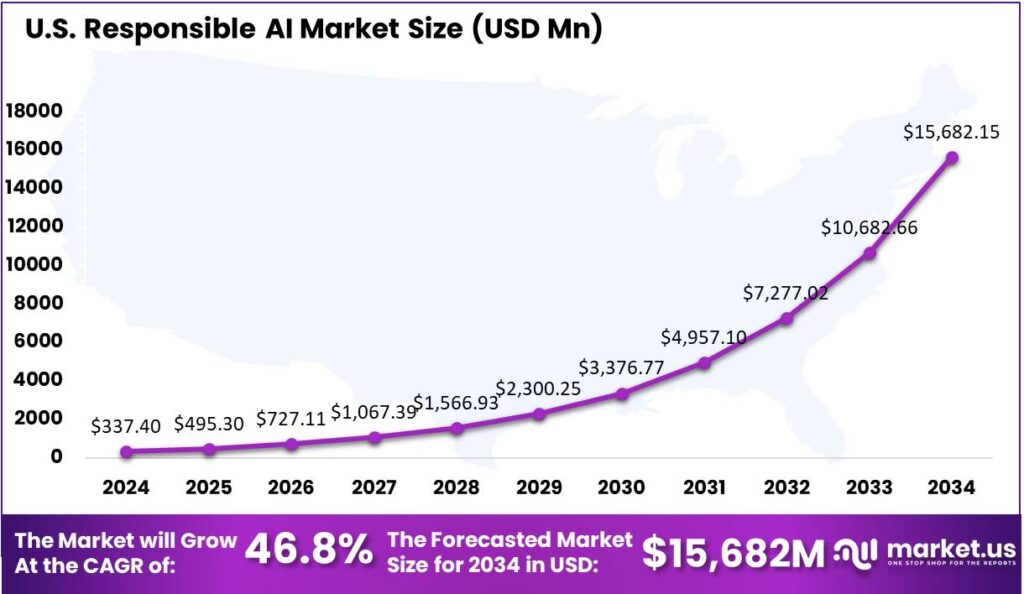

- U.S. Responsible AI market is set to reach $337.4 million in 2024, with a CAGR of 46.8%.

- North America is projected to hold over 42.7% of the global Responsible AI market share in 2024, with revenues estimated at $388 million.

- The Solution segment is expected to lead the market, capturing more than 68.6% of the share.

- The On-Premises segment will dominate with over 62.5% of the market share.

- Large Enterprises are set to take the lead, comprising over 72.7% of the market share in 2024.

- The BFSI (Banking, Financial Services, and Insurance) segment is projected to dominate, holding more than 34.7% of the market share.

Analyst’s Viewpoint

The responsible AI market presents significant opportunities as organizations increasingly focus on ethical, transparent, and fair AI practices. Demand is rising for AI solutions that prioritize privacy, mitigate bias, and ensure accountability, driven by regulatory pressures and consumer awareness. The growing focus on AI governance, explainable AI, and ethics consulting presents opportunities as companies seek solutions that align with ethical standards. This fosters trust and compliance in sectors like healthcare, finance, and government.

Despite its growing importance, the widespread implementation of responsible AI faces several challenges. One of the biggest hurdles is the lack of standardized guidelines for responsible AI across industries. With various stakeholders, including governments, regulators, and businesses, all working on different frameworks, it’s difficult to ensure consistency and alignment. Another significant challenge is the technical difficulty in creating unbiased and transparent AI systems.

Technological innovations like explainable AI (XAI) are helping address responsible AI challenges by providing clear justifications for decisions, especially in sectors like healthcare. Federated Learning protects user data while enabling AI applications, and AI fairness toolkits help developers reduce bias in datasets. Additionally, AI-driven governance platforms assist organizations in complying with regulations and monitoring AI impacts in real-time.

Impact Of AI

- Promoting Fairness and Inclusivity: AI has the potential to remove biases in decision-making processes, ensuring that systems are fairer. By using diverse data, AI can help create more inclusive solutions that represent different cultures, backgrounds, and genders. This can prevent discrimination, ensuring that everyone gets a fair shot.

- Transparency in Decision-Making: As AI becomes integrated into everyday life, people want to understand how decisions are made. AI systems designed with responsibility in mind can provide explanations for their actions. When AI is transparent, users can trust that decisions are based on logical reasoning and not hidden agendas.

- Accountability and Trust: Responsible AI means accountability. When AI systems make errors, having clear lines of accountability is vital. It’s important for companies to have protocols in place to ensure that human oversight is always present, and AI systems are continuously monitored to prevent unintended consequences.

- Data Privacy and Security: AI impacts the way sensitive data is collected, stored, and used. Responsible AI ensures that personal data is protected and handled with the utmost care. By using encryption and other security measures, AI can work to minimize risks to data privacy, which is crucial as technology advances.

- Ethical AI Development: Ethical AI refers to developing and deploying AI systems that adhere to ethical standards. This means that AI should not harm people, and its development should prioritize social good. By adopting ethical guidelines, companies can ensure that AI is used responsibly in various industries, from healthcare to finance.

U.S. Responsible AI Market Size

The U.S. market for Responsible AI is expected to see significant growth in 2024, reaching a projected value of $337.4 million. This burgeoning market is entering a robust growth phase, with analysts predicting an impressive compound annual growth rate (CAGR) of 46.8%. This indicates that Responsible AI, a field focused on ensuring ethical, transparent, and accountable AI systems, is gaining traction rapidly across various industries.

As AI technologies become more integrated into critical sectors, such as healthcare, finance, and autonomous systems, the need for responsible and trustworthy AI systems is becoming paramount. Organizations are under increasing scrutiny to ensure that their AI models are not only effective but also aligned with ethical standards. This growing emphasis on Responsible AI is pushing businesses to invest in tools and strategies that mitigate risks like bias, discrimination, and lack of transparency.

In 2024, North America is expected to maintain a dominant position in the Responsible AI market, commanding over 42.7% of the total market share. The region is anticipated to generate revenues of approximately USD 388 million, solidifying its leadership in the adoption and implementation of ethical AI practices.

North America’s advanced technological infrastructure, robust AI research ecosystem, and strong regulatory framework contribute significantly to this commanding market share. As businesses across industries seek to leverage AI for strategic advantage, the demand for responsible and ethical AI solutions is soaring, particularly in the United States and Canada, where regulatory bodies are increasingly focused on AI governance and accountability.

The dominance of North America in the Responsible AI market can be attributed to several factors, including a growing emphasis on AI ethics and transparency, heightened consumer awareness about data privacy, and the region’s leadership in AI innovation. With the U.S. leading the charge, both public and private sectors are investing heavily in AI ethics research, developing tools and frameworks to ensure that AI systems operate in a fair and unbiased manner.

Emerging Trends

- Ethical Guidelines for AI: As AI continues to grow, there’s a stronger push for ethical guidelines to ensure it’s used in ways that are good for society. This involves balancing progress with the potential risks of automation, surveillance, and privacy concerns.

- Privacy Preservation in AI: Data privacy is becoming more important. New methods, like federated learning, are emerging to train AI models without needing to share sensitive data. This helps protect individual privacy while still enabling AI growth.

- AI Governance and Accountability: There’s a growing movement to establish clear rules for how AI should be governed. Governments and organizations are setting up frameworks to ensure AI is developed and deployed responsibly, keeping humans in control of decision-making.

- AI and Human Collaboration: Rather than replacing humans, AI is being designed to complement human abilities. It’s about collaboration—using AI to assist in tasks while leaving critical decision-making to people.

- AI Accountability and Auditing: As AI systems are used more widely, there is a growing need to hold them accountable for their actions. This includes creating methods to audit AI decisions, ensuring they align with ethical standards, and verifying they work as intended.

Top Use Cases

- Healthcare Diagnostics: AI is being used to assist doctors in diagnosing diseases more accurately. It can analyze medical images or patient data to spot early signs of conditions like cancer, helping doctors make better decisions. By using AI, healthcare professionals can catch problems early and improve patient outcomes.

- Bias Detection in Hiring: AI can help companies ensure their hiring processes are fair by spotting potential biases in job applications. It looks at factors like gender or ethnicity that might affect the decision-making process and helps remove unfair advantages or disadvantages. This makes the hiring process more inclusive and diverse.

- Sustainable Agriculture: Farmers use AI to monitor soil health and predict weather patterns, ensuring that crops are grown sustainably. This helps in reducing the overuse of resources like water and fertilizers, improving both yield and environmental impact. It makes farming smarter and more eco-friendly.

- Smart City Traffic Management: AI helps in managing city traffic by predicting traffic patterns and adjusting signals accordingly, reducing congestion and pollution. This makes commuting faster and more efficient, benefiting both the environment and citizens’ quality of life. It’s like having a traffic controller for the entire city.

- Personalized Learning in Education: AI can adapt to a student’s learning pace, providing customized lessons and feedback. This helps each student learn better by focusing on their specific strengths and weaknesses. It makes education more accessible and engaging for everyone.

Major Challenges

- Bias in AI Models: AI systems can unintentionally inherit biases from the data they are trained on, which may result in unfair decision-making. This can lead to discrimination in areas like hiring, loan approvals, or law enforcement. Tackling this bias requires diverse datasets and continuous monitoring to ensure fairness.

- Lack of Transparency: Many AI algorithms work like a “black box,” meaning it’s often unclear how decisions are made. This can be problematic in sectors like healthcare or finance where accountability is crucial. To solve this, more AI models need to be interpretable and transparent to ensure trust and fairness.

- Privacy Concerns: AI systems require vast amounts of data, which can raise concerns about individual privacy. People are increasingly worried about how their personal data is being used, stored, and shared. It’s essential for regulations to evolve and protect privacy while still allowing AI to thrive.

- Accountability in Case of Failure: If an AI system makes a mistake, it can be hard to pinpoint who is responsible – whether it’s the developers, the company, or the machine itself. Clear guidelines are needed to hold someone accountable for AI failures, especially in critical areas like self-driving cars or medical diagnoses.

- Job Displacement and Economic Impact: AI can automate many tasks, potentially displacing workers in sectors like manufacturing, retail, or transportation. While it can create new jobs, the shift requires reskilling and policies to help workers transition into new roles. The economic impact of AI needs to be carefully managed to avoid increasing inequality.

Market Opportunities for Key Players

- AI Governance and Compliance Solutions: With increasing regulatory scrutiny around AI, there’s a growing demand for solutions that ensure AI systems are compliant with laws and regulations. Companies offering tools for AI governance, risk management, and compliance can capitalize on this trend.AI companies that develop platforms to monitor and ensure adherence to ethical guidelines and regulations will be well-positioned to serve businesses across sectors.

- Ethical AI in Healthcare: The healthcare sector is increasingly relying on AI for diagnostics, patient management, and drug discovery. Companies that focus on ethical AI in healthcare will play a pivotal role in shaping the future of medical AI technologies.There is significant demand for responsible AI tools that enhance patient outcomes while safeguarding privacy and reducing biases in medical decision-making.

- AI for Sustainable Development: Responsible AI companies that focus on creating technologies to address global environmental challenges have an opportunity to make a lasting impact. AI solutions that help monitor environmental changes, optimize energy usage, or advance sustainable agricultural practices are becoming increasingly important.Companies can tap into the growing demand for AI tools that aid in achieving the United Nations Sustainable Development Goals (SDGs).

- AI in Financial Services for Fair Lending: Financial institutions are under pressure to ensure that their AI-driven lending algorithms are free from biases that may discriminate against underrepresented groups. Responsible AI companies can offer tools that help financial organizations audit their algorithms and ensure fairness in credit scoring, loan approvals, and risk assessments.By developing solutions that promote fairness and transparency in AI-driven financial services, companies can build trust with both consumers and regulators.

- AI Ethics Training and Education: Responsible AI companies can offer training programs, workshops, and consulting services to help organizations understand how to use AI responsibly and mitigate risks associated with unethical practices.There’s a growing market for educational resources that help organizations integrate ethical AI practices into their operations.

Recent Developments

- August 2024: In 2024, businesses are partnering with AI companies and integrating AI into their operations. Ethical AI companies emphasize research transparency, safety, accountability, and the alignment of AI with human values.

- September 2024: Microsoft and G42 are partnering to advance Responsible AI by opening two centers in Abu Dhabi. One center, co-founded by G42 and Microsoft with the support of Abu Dhabi’s AIATC, will focus on promoting responsible AI practices in the Middle East and Global South. The second is the expansion of Microsoft’s AI for Good Research Lab in Abu Dhabi, aimed at supporting AI projects for societal impact.

- PwC’s 2024 US Responsible AI Survey indicates that Responsible AI’s top 5 reported benefits relate to risk management and cyber security program enhancements, improved transparency, customer experience, coordinated AI management and innovation.

Conclusion

In summary, the responsible AI market is rapidly expanding as organizations and governments recognize the importance of ethical AI practices. Companies are increasingly investing in frameworks, tools, and technologies that promote fairness, transparency, and accountability in AI systems. With growing public awareness and regulatory pressure, responsible AI has become integral to building trust and ensuring that AI technologies are aligned with societal values.

As the market continues to mature, collaboration between businesses, regulators, and academia will be crucial in establishing standardized practices and policies. The continued development of ethical AI solutions will drive innovation while mitigating risks associated with bias, privacy, and security concerns. The future of responsible AI looks promising, with an increasing emphasis on creating systems that benefit both businesses and society at large.

Discuss your needs with our analyst

Please share your requirements with more details so our analyst can check if they can solve your problem(s)