Table of Contents

Introduction

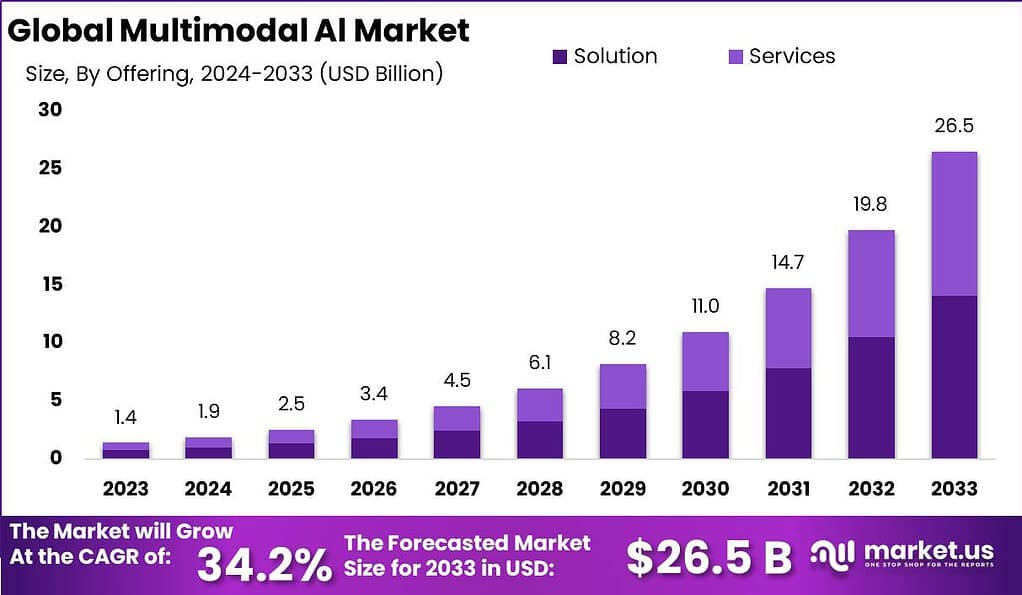

According to Market.us, The Global Multimodal Al Market size is expected to be worth around USD 26.5 Billion By 2033, from USD 1.4 Billion in 2023, growing at a CAGR of 34.2% during the forecast period from 2024 to 2033. Multimodal AI, which stands for Multimodal Artificial Intelligence, refers to the integration of different modes of information, such as text, images, speech, and gestures, into AI systems. This approach enables machines to understand and interact with humans in a more natural and intuitive manner.

The market for multimodal AI is expanding as industries recognize its potential to drive innovation and efficiency. This growth can be attributed to the increasing demand for sophisticated AI systems that can provide more accurate insights and improved user experiences across sectors like healthcare, automotive, media, and retail. Businesses are investing in multimodal AI to leverage its ability to process complex datasets, thereby enhancing decision-making and operational efficiencies. Key market players are focusing on developing robust multimodal AI solutions to gain a competitive edge, reflecting a trend towards more interactive and intuitive AI interfaces.

The demand for multimodal AI is driven by the increasing need for natural and intuitive human-machine interactions. Industries such as healthcare, e-commerce, entertainment, and automotive are adopting multimodal AI to create personalized and engaging experiences for their customers. Moreover, the advancements in deep learning, computer vision, and natural language processing have significantly contributed to the progress of multimodal AI.

Multimodal AI Statistics

- The Multimodal AI Market is expected to be worth around USD 26.5 billion by 2033, growing at an impressive CAGR of 34.2%.

- The Solution segment led the market, with a 53.2% share.

- The Cloud segment also held a strong position, capturing more than 61% of the market.

- The Machine Learning segment took a significant share, holding 32.6% of the market.

- The BFSI (Banking, Financial Services, and Insurance) segment dominated with a 28.5% share.

Emerging Trends

- Increasing Demand for GPU: As the adoption of multimodal AI accelerates, there is a heightened demand for GPUs, leading to pressure on hardware production and the innovation of more cost-effective solutions.

- Cloud-Based Multimodal AI Deployment: With multimodal AI increasingly being deployed on cloud platforms, there is a significant move towards using cloud infrastructure to leverage scalability and cost-efficiency benefits.

- Rise of Smaller, Efficient AI Models: There’s a trend towards optimizing AI models to be smaller and more efficient, accommodating businesses that require less resource-intensive solutions.

- Customization and Local Deployment: Enterprises are focusing on customizing AI models and deploying them locally to leverage proprietary data while maintaining data privacy and reducing reliance on large, centralized AI providers.

- Expansion in Developing Regions: The Asia Pacific region is experiencing rapid growth in the adoption of multimodal AI due to the digital transformation in various industries like e-commerce and healthcare.

Top Use Cases of Multimodal AI

- Enhanced Virtual Assistants: Multimodal AI is enabling more sophisticated virtual assistants that can understand and process multiple forms of data simultaneously, improving user interaction across platforms.

- Advanced Healthcare Applications: In healthcare, multimodal AI is being utilized for detailed medical imaging and personalized treatment plans, significantly enhancing patient care.

- Secure Financial Services: The technology is crucial in the BFSI sector for secure customer authentication and fraud detection, using features like facial recognition and voice commands.

- Interactive Media and Entertainment: Multimodal AI plays a significant role in media by analyzing user engagement across different modalities to optimize content delivery and advertising strategies.

- Efficient Retail and E-Commerce: By analyzing customer interactions and behaviors across multiple channels, multimodal AI enhances personalization and service delivery in the retail sector.

Major Challenges

- Data Privacy Concerns: The integration of various data types raises significant privacy issues, necessitating stringent data protection measures.

- Complexity in Integration: The diverse nature of data sources requires sophisticated systems to integrate and process data effectively, posing a technical challenge.

- High Costs of Implementation: Although cloud deployment reduces upfront costs, the overall implementation of multimodal AI can be expensive, especially for small enterprises.

- Regulatory and Ethical Issues: As AI systems become more autonomous, there is a growing need for robust regulatory frameworks to manage ethical concerns and ensure safe use.

- Hardware Dependencies: The reliance on advanced hardware like GPUs and the need for robust infrastructure can limit the adoption of multimodal AI in resource-constrained environments.

Market Opportunities

- Expansion into Emerging Markets: The increasing digital transformation in regions like Asia Pacific presents significant opportunities for deploying multimodal AI in various sectors .

- Innovations in AI Hardware: The growing need for efficient and cost-effective hardware offers opportunities for innovation in AI model deployment and management.

- Tailored AI Solutions for SMEs: There is a growing market for customized AI solutions that cater specifically to the needs of small and medium enterprises.

- Enhanced Data Analytics Services: With the ability to process and analyze multiple data types, multimodal AI can offer more comprehensive analytics services, driving demand in sectors such as finance and healthcare.

- Adoption in Non-Traditional Sectors: There is potential for multimodal AI to be adopted in sectors like agriculture and manufacturing, where it can improve efficiency and productivity through better data utilization.

Recent Developments

- In March 2023, OpenAI released a new version of its ChatGPT tool, named GPT-4. This upgraded model is pretty special because it can understand both text and images, making it more helpful. For example, GPT-4 can look at pictures of your closet and help you make a list of what to pack for a trip.

- In June 2023, Microsoft rolled out Kosmos-2, another advanced AI model. Kosmos-2 is great at making sense of how objects are described in words and pictures, tying these two types of information together effectively. This makes it a powerful tool in the world of AI that can handle multiple types of data.

- By December 2023, Meta (the company behind Facebook) announced its plan to add similar multimodal AI features to its smart glasses, made in partnership with Ray-Ban. This means you could just speak to your glasses, and they’ll be able to tell you about what you’re seeing around you using their built-in cameras and microphones.

- Also in the same month, Alphabet Inc., Google’s parent company, introduced an AI model called Gemini. Gemini is quite a star because it has managed to outperform human experts in a big AI challenge called the MMLU benchmark. This test measures how well AI systems can handle a variety of language-based tasks, showing just how smart Gemini really is.

Conclusion

In conclusion, the multimodal AI market is experiencing significant growth and holds great potential for the future. The integration of multiple modes of information, such as text, images, speech, and gestures, is revolutionizing various industries and applications. The demand for multimodal AI is being driven by the need for more natural and intuitive human-machine interactions. Industries such as healthcare, e-commerce, entertainment, and automotive are actively adopting multimodal AI to enhance user experiences and deliver personalized services.

Companies are investing heavily in research and development to create advanced multimodal AI technologies. The advancements in deep learning, computer vision, and natural language processing are fueling the progress in multimodal AI, enabling machines to understand and interpret multimodal data more effectively.

Discuss your needs with our analyst

Please share your requirements with more details so our analyst can check if they can solve your problem(s)