Table of Contents

- LLM Observability Platform Market Introduction

- How Growth is Impacting the Economy

- Impact on Global Businesses

- Strategies for Businesses

- Key Takeaways

- Analyst Viewpoint

- Use Case and Growth Factors

- Regional Analysis

- Business Opportunities

- Key Segmentation Overview

- Key Player Analysis

- Recent Developments

- Conclusion

LLM Observability Platform Market Introduction

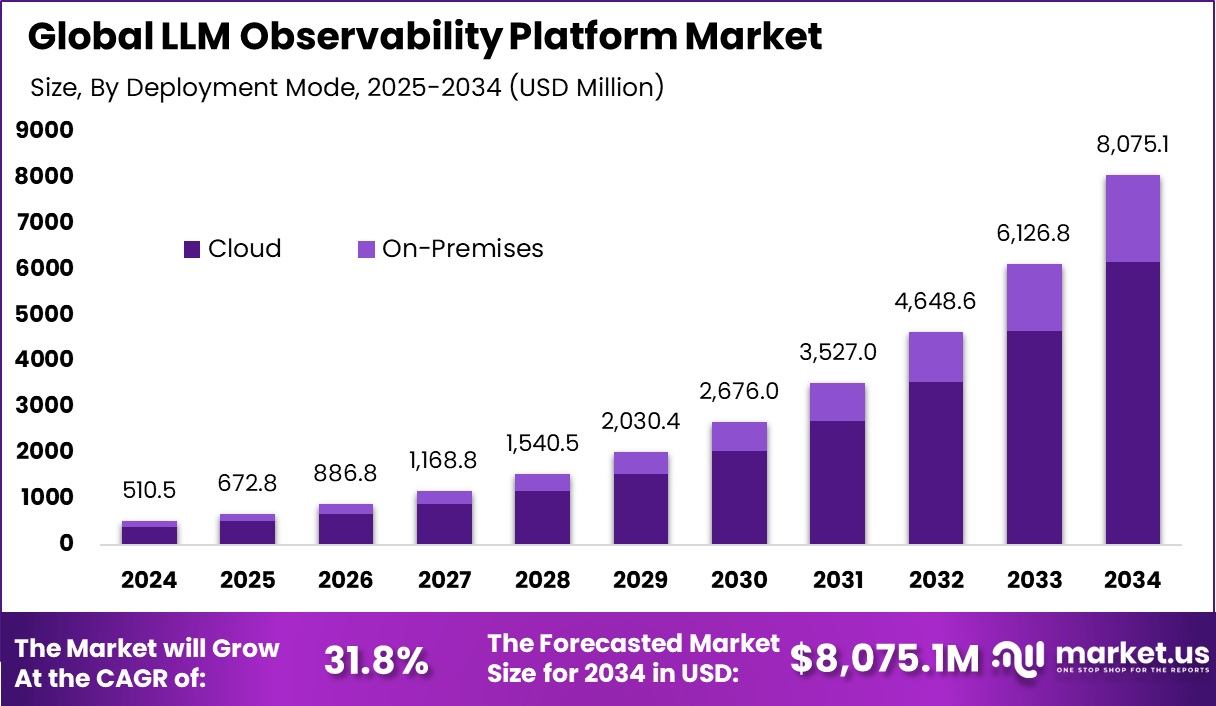

The global LLM observability platform market is expanding rapidly as enterprises scale large language model deployments across business functions. The market generated USD 510.5 million in 2024 and is projected to grow from USD 672.8 million in 2025 to about USD 8,075.1 million by 2034, recording a CAGR of 31.8% during the forecast period.

LLM observability platforms provide monitoring, evaluation, tracing, and governance for AI models in production environments. North America dominated in 2024 with over 38.0% share and USD 193.9 million in revenue, supported by early AI adoption, cloud maturity, and strong enterprise demand for responsible AI operations.

How Growth is Impacting the Economy

Growth in LLM observability platforms is strengthening the AI economy by enabling safer, more reliable deployment of generative AI at scale. As enterprises adopt LLMs for customer service, software development, and decision support, observability tools reduce model failures, bias risks, and compliance issues. This improves productivity while lowering the economic cost of AI errors.

Governments benefit from better oversight of AI usage in regulated sectors such as finance, healthcare, and public services. The market’s expansion is also creating demand for AI engineers, MLOps specialists, and data governance professionals. Over time, robust observability frameworks accelerate AI commercialization, support responsible innovation, and reinforce trust in AI-driven economic systems.

➤ Year-End Sale: Hurry Enjoy Upto 60% off @ https://market.us/purchase-report/?report_id=168188

Impact on Global Businesses

Rising Costs and Supply Chain Shifts

Businesses face increased costs related to monitoring infrastructure, evaluation pipelines, and compliance tooling for LLM deployments. However, AI supply chains are shifting toward standardized observability layers that reduce long-term risk, downtime, and model retraining expenses.

Sector-Specific Impacts

Technology companies rely on observability to manage large scale AI products. Financial services use it to ensure compliance and auditability. Healthcare organizations depend on monitoring to maintain accuracy and safety. Retail and e-commerce leverage observability to optimize AI driven personalization and customer engagement.

Strategies for Businesses

Organizations are embedding observability at the design stage of LLM applications rather than treating it as an afterthought. Adoption of unified dashboards for performance, cost, and safety metrics is increasing. Businesses are integrating observability with MLOps and DevOps workflows to enable continuous improvement. Strong governance policies, prompt management, and human-in-the-loop validation are also becoming core strategies to manage AI risk and scalability.

Key Takeaways

- LLM observability is critical for safe and scalable generative AI deployment

- Market growth is supported by a strong 31.8% CAGR through 2034

- North America leads due to enterprise AI maturity

- Observability reduces operational, compliance, and reputational risks

- Long-term demand is driven by widespread enterprise AI adoption

➤ Unlock growth! Get your sample now! @ https://market.us/report/llm-observability-platform-market/free-sample/

Analyst Viewpoint

Currently, LLM observability platforms are evolving from experimental tools to enterprise-grade infrastructure. Present adoption is strongest among technology, finance, and AI-first organizations deploying LLMs in production. Looking ahead, the outlook remains highly positive as regulatory scrutiny increases and AI systems become more autonomous. Future platforms are expected to incorporate advanced evaluation, explainability, and cost optimization features. Over the forecast period, LLM observability is anticipated to become a foundational layer of enterprise AI stacks worldwide.

Use Case and Growth Factors

| Use Case | Description | Key Growth Factors |

|---|---|---|

| LLM performance monitoring | Tracking accuracy, latency, and output quality | Expansion of production-grade LLM deployments |

| Model safety and bias detection | Identifying harmful or biased outputs | Rising focus on responsible and ethical AI |

| Cost and token usage optimization | Monitoring inference and API costs | Increasing AI infrastructure spending |

| Prompt and workflow evaluation | Testing prompt effectiveness and changes | Rapid iteration of generative AI applications |

| Regulatory compliance auditing | Ensuring traceability and governance | Growing AI regulations and compliance needs |

Regional Analysis

North America dominates the LLM observability platform market with more than 38.0% share, driven by advanced cloud infrastructure, early generative AI adoption, and strong enterprise investment. Europe follows with growing emphasis on AI governance and regulatory compliance. Asia Pacific is emerging as a high-growth region due to rapid digital transformation and expanding AI adoption in enterprises. Other regions are gradually adopting observability solutions as AI deployment scales.

➤ Explore Huge Library Here –

- Satellite Texting Market

- Embedded Lending Market

- AI Infrastructure Security Market

- Music Similarity Search AI Market

Business Opportunities

Significant opportunities exist in integrated observability platforms that combine monitoring, evaluation, and governance. Demand is rising for industry-specific solutions tailored to regulated sectors. AI cost optimization and performance analytics offer strong value creation potential. Emerging markets present long-term opportunities as enterprises adopt generative AI at scale. Providers offering scalable, secure, and regulation-ready platforms are well-positioned for sustained market growth.

Key Segmentation Overview

The market is segmented by component into software platforms and services, with software accounting for the dominant share. By deployment mode, cloud-based observability platforms lead adoption, while hybrid models are gaining traction. By application, segments include performance monitoring, safety evaluation, cost management, and compliance. End users span technology companies, financial services, healthcare, retail, and government organizations, with technology and finance leading adoption.

Key Player Analysis

Market participants focus on improving model transparency, real-time monitoring, and governance capabilities. Competitive differentiation is driven by evaluation depth, scalability, and ease of integration with AI stacks. Continuous innovation in safety metrics, explainability, and automation strengthens positioning. Long-term competitiveness depends on adaptability to evolving AI regulations and support for multiple LLM architectures and providers.

- Arize AI, Inc.

- Weights & Biases, Inc.

- Datadog, Inc.

- Dynatrace LLC

- New Relic, Inc.

- Splunk Inc.

- IBM Corporation

- Microsoft Corporation

- Google LLC

- NVIDIA Corporation

- Honeycomb.io, Inc.

- Lightstep, Inc.

- Tecton, Inc.

- Monte Carlo Data, Inc.

- Superwise, Inc.

- Others

Recent Developments

- Increased enterprise adoption of LLM monitoring and evaluation tools

- Integration of observability platforms with MLOps pipelines

- Rising focus on AI safety, bias detection, and governance features

- Expansion of cost optimization and token usage analytics

- Growing regulatory-driven demand for AI traceability solutions

Conclusion

LLM observability platforms are becoming essential to enterprise AI success. Rapid growth, led by North America, highlights rising demand for safe, transparent, and scalable AI operations, positioning observability as a cornerstone of future generative AI ecosystems.

Discuss your needs with our analyst

Please share your requirements with more details so our analyst can check if they can solve your problem(s)