Table of Contents

Introduction

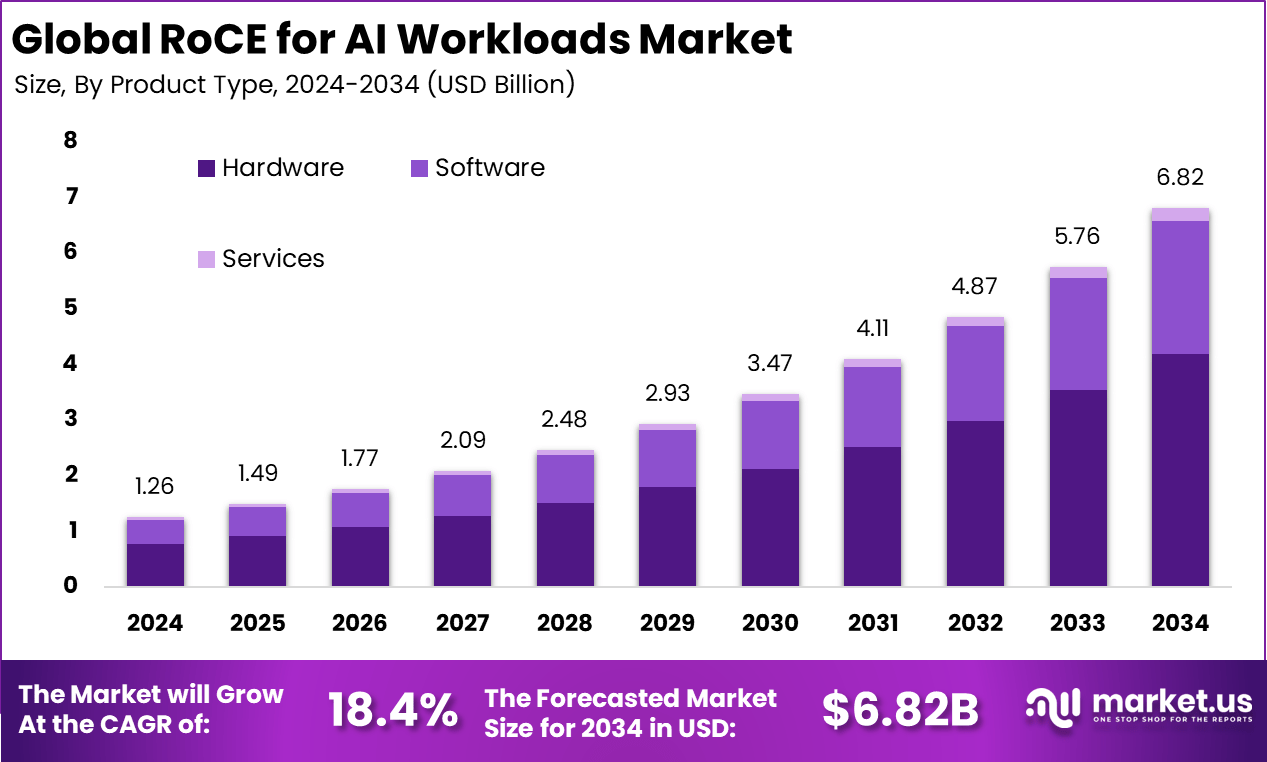

The Global RoCE for AI Workloads Market reached USD 1.26 billion in 2024 and is projected to grow to USD 6.82 billion by 2034, registering a strong CAGR of 18.4%. Adoption increases as data-intensive industries require low-latency, high-throughput networking to accelerate AI training and inference. North America dominated the market with a 52.6% share and USD 0.66 billion revenue in 2024. Growing deployment of AI clusters, cloud data centers, and HPC environments continues to fuel rapid RoCE-based adaptation for efficient, scalable AI workload processing.

How Growth is Impacting the Economy

The expansion of RoCE-enabled AI infrastructure significantly strengthens global productivity as enterprises accelerate AI model execution, reduce compute delays, and improve resource utilization. This shift boosts economic efficiency by enabling faster insights for industries such as finance, automotive, manufacturing, and healthcare. Governments and enterprises benefit from faster R&D cycles, enhanced automation, and reduced operational waste.

RoCE adoption also supports large-scale digital transformation, creating demand for advanced data centers, high-speed interconnects, and specialized chipsets. The market’s growth stimulates job creation across cloud engineering, networking architecture, and AI optimization roles. Rapid adoption of RoCE-based systems enhances national AI competitiveness, encouraging long-term investments and supporting the global transition toward high-performance, latency-optimized digital economies.

➤ Smarter strategy starts here! Get the sample – https://market.us/report/roce-for-ai-workloads-market/free-sample/

Impact on Global Businesses

Global businesses face rising infrastructure investments as AI workloads become more compute-intensive, requiring high-performance networking, accelerators, and optimized clusters. Supply chains shift to source RDMA-enabled hardware and low-latency fabrics to support real-time AI analytics. Sectors such as autonomous vehicles, financial trading, genomics, retail personalization, cybersecurity, and robotics witness strong performance gains as RoCE reduces bottlenecks and speeds up inference. Industries dependent on massive data streams accelerate migration to RoCE-based cloud platforms to sustain competitive performance levels.

Strategies for Businesses

- Modernize AI clusters with RDMA-enabled interconnects.

- Adopt hybrid or multi-cloud deployment for scalable AI operations.

- Prioritize network optimization to reduce latency and training time.

- Invest in GPU-dense architectures and RoCE-compliant NICs.

- Strengthen AI workload monitoring and performance analytics.

- Collaborate with hardware vendors to future-proof data-center expansion.

Key Takeaways

- Strong demand driven by high-speed AI networking requirements.

- North America leads with 52.6% market share.

- Industries shift toward RDMA-enabled cloud and HPC environments.

- RoCE reduces latency bottlenecks, improving AI training performance.

- Future growth supported by advanced chipsets and next-gen AI workloads.

➤ Unlock growth secrets! Buy the full report – https://market.us/purchase-report/?report_id=168435

Analyst Viewpoint

The market shows robust momentum as enterprises transition from legacy Ethernet to RoCE-optimized networking that supports advanced AI models. Demand remains strong in data-intensive sectors where low latency is essential. Looking ahead, increased adoption of generative AI, hyperscale cloud expansion, and rise of edge-AI workloads will accelerate global RoCE usage. As computing becomes more distributed and real-time driven, RoCE will remain critical for achieving high throughput and operational efficiency across global AI ecosystems.

Use Case & Growth Factors

| Category | Details |

|---|---|

| Key Use Cases | AI model training, inference acceleration, HPC workloads, autonomous systems, real-time analytics, cloud AI optimization |

| Growth Factors | Rising AI compute demand, hyperscale data-center expansion, RDMA adoption, GPU/accelerator proliferation, need for low-latency networking |

Regional Analysis

North America dominates with 52.6% share, driven by extensive AI R&D, hyperscaler investments, and strong demand for advanced networking. Europe expands steadily as automotive, industrial automation, and enterprise AI adoption grows. Asia Pacific emerges as the fastest-growing region due to strong investments in national AI programs, semiconductor manufacturing, and cloud infrastructure. Latin America, the Middle East, and Africa gradually adopt RoCE solutions as digitalization accelerates and demand for AI workloads increases across banking, telecom, and government segments.

➤ Want more market wisdom? Browse reports –

- Smart Waste Routing AI Market

- LLM Observability Platform Market

- Underwater Marine IoT & Wireless Market

- Work Zone Detection AI Market

Business Opportunities

Significant opportunities emerge in RDMA-optimized cloud platforms, next-gen NICs, programmable switches, AI accelerator integration, and high-bandwidth data-center fabrics. Vendors can expand in emerging markets adopting AI at scale and offer managed high-speed networking services for enterprises transitioning to RoCE clusters. Opportunities also include performance-tuning software tools, AI workload orchestration platforms, and custom RoCE deployments for robotics, automotive, and industrial automation.

Key Segmentation

The market comprises components such as adapters, switches, accelerators, and RoCE-enabled software stacks. Applications include AI training, inference, HPC modeling, real-time analytics, and distributed deep-learning operations. Deployment models span cloud, hybrid cloud, and on-premise HPC clusters. Key end-users include cloud providers, enterprises, research institutions, autonomous technology developers, financial analytics firms, and industrial automation providers.

Key Player Analysis

Leading participants focus on high-performance networking innovation, integrating advanced RDMA capabilities and enhancing support for large-scale AI clusters. Strategies prioritize optimizing throughput, reducing latency, and enabling efficient inter-GPU communication. Vendors invest in chip-level acceleration, scalable fabric architectures, and software-defined network orchestration. Strategic partnerships with cloud providers and AI system integrators strengthen their market position. Continuous optimization for generative AI and edge-AI workloads remains central to gaining a competitive advantage.

- NVIDIA

- AMD

- Intel

- Cisco

- Arista Networks

- Broadcom

- Marvell

- Dell Technologies

- HPE

- Juniper Networks

- International Business Machines Corporation

- Fujitsu

- Lenovo Group Ltd

- Supermicro

- Inspur

- Others

Recent Developments

- Launch of high-bandwidth RoCE-enabled NICs for AI clusters.

- Expansion of RDMA-optimized switches supporting next-gen accelerators.

- Integration of RoCE with cloud AI platforms for faster training cycles.

- Introduction of performance-tuning tools for distributed AI workloads.

- Partnerships to develop scalable RoCE architectures for hyperscale data centers.

Conclusion

The RoCE for AI Workloads Market is rising rapidly as enterprises demand high-speed, low-latency infrastructure for next-generation AI applications. With strong global adoption and expanding use cases, RoCE remains essential for powering scalable, high-performance AI ecosystems.

Discuss your needs with our analyst

Please share your requirements with more details so our analyst can check if they can solve your problem(s)